Written by Maram Ayari, DevOps Engineer

Contents

What is Object Detection? How is it Different from Object Localization and Classification?

Object detection is a key task in computer vision, essential for enabling machines to analyze and understand visual data. In various applications, from autonomous driving to facial recognition, the ability to locate and classify objects within digital images is crucial. This process relies on artificial intelligence (AI), allowing computers to interpret visual information in a way similar to human perception. By recognizing and categorizing objects based on semantic categories, object detection plays a foundational role in many AI-powered systems.

At the heart of object detection are two techniques: object localization and object classification. Object localization involves identifying the precise location of objects within an image, typically using bounding boxes. Object classification, on the other hand, assigns each detected object to a specific category. When these two techniques are combined, object detection systems can simultaneously determine both the position and type of objects within images.

The following sections will delve into the process of setting up a custom object detection system, including how to preprocess a dataset, train the YOLOv8 model, and deploy a SageMaker endpoint for detecting custom objects through inference.

What is the YOLO model?

YOLO (You Only Look Once) is a real-time object detection algorithm developed by Joseph Redmon and Ali Farhadi in 2015. It is a single-stage object detector that utilizes a convolutional neural network (CNN, a type of deep learning model designed to process structured grid data like images) to predict bounding boxes and class probabilities of objects within input images. YOLO was initially implemented using the Darknet framework (an open-source neural network framework written in C and CUDA, optimized for performance).

The YOLO algorithm divides the input image into a grid of cells, with each cell predicting the probability of an object’s presence, the bounding box coordinates, and the object’s class. Unlike two-stage object detectors such as R-CNN and its variants, which first generate region proposals and then classify those proposals into object categories, YOLO processes the entire image in a single pass, making it faster and more efficient.

Why YOLOv8?

YOLOv8 offers several improvements over previous versions of YOLO:

| Improvement | Description |

| Accuracy | YOLOv8 achieves state-of-the-art results on a variety of object detection benchmarks |

| Speed | YOLOv8 is faster that previous versions of YOLO, despite being more accurate |

| Developer experience | YOLOv8 includes several features that make it easier to use and customize compared to previous versions of YOLO |

Difference Between Variants of YOLOv8

The primary difference between the variants of YOLOv8 lies in the size and complexity of the model. Larger and more complex models offer greater accuracy but operate at a slower speed. In contrast, smaller and less complex models are faster but tend to be less accurate.

The following table compares the different variants of YOLOv8 in terms of model size, accuracy (measured by mAP 50-95), inference speed on CPU and A100 TensorRT, the number of parameters, and floating point operations (FLOPs).

| Model | size(pixels) | mAP 50-95 | Speed CPU ONNX (ms) | Speed A100TensorRT(ms) | Params(M) | Flops(B) |

| Yolov8n | 640 | 37.3 | 80.4 | 0.99 | 3.2 | 8.7 |

| Yolov8s | 640 | 44.9 | 128.4 | 1.20 | 11.2 | 28.6 |

| Yolov8m | 640 | 50.2 | 234.7 | 1.83 | 25.9 | 78.9 |

| Yolov8l | 640 | 52.9 | 375.2 | 2.39 | 43.7 | 165.2 |

| Yolov8x | 640 | 53.9 | 479.1 | 3.53 | 68.2 | 257.8 |

Preparing a Custom Dataset

Building a custom dataset is crucial for enhancing the algorithm’s performance in scenarios where off-the-shelf pre-trained models may not suffice. This process involves the following steps:

- Gathering Background and Object Images: Collecting a diverse set of background images and images of the objects of interest.

- Overlaying Objects onto Backgrounds: Using automated image scripts to overlay the object images onto the background images, creating realistic scenarios that the algorithm needs to learn.

- Applying Data Augmentation: Utilizing various augmentation techniques, such as rotation, scaling, flipping, and color adjustments, to increase the variability and robustness of the dataset.

- Annotating Data: Ensuring each image has YOLO format annotation, including the class and location (usually a bounding box) of each object. Annotation accuracy directly impacts model performance.

- Converting Annotation Format: Preparing annotations in the specific format required by YOLO. Each image is accompanied by a .txt file listing all objects with their class and bounding box information. Bounding boxes are formatted as:

<object-class> <x_center> <y_center> <width> <height>

The coordinates are normalized relative to image dimensions, and <object-class> represents the class index.

- Splitting the Dataset: Dividing the dataset into training, validation, and optionally, test sets. This step is crucial for avoiding overfitting and evaluating model performance. A typical split is 70% training, 15% validation, and 15% test. In this case, an 80% training and 20% validation split is used.

| def split_dataset(base_path, train_ratio=0.8, val_ratio=0.2, background_dir=’./backgrounds’): # Create paths for images and labels images_path = os.path.join(base_path, ‘images’) labels_path = os.path.join(base_path, ‘labels’) # Create train, val, and test directories for set_type in [‘train’, ‘val’]: for content_type in [‘images’, ‘labels’]: os.makedirs(os.path.join(base_path, set_type, content_type), exist_ok=True) # Get all image filenames all_files = [f for f in os.listdir(images_path) if os.path.isfile(os.path.join(images_path, f))] random.shuffle(all_files) # Calculate split indices total_files = len(all_files) train_end = int(train_ratio * total_files) val_end = train_end + int(val_ratio * total_files) # Split files train_files = all_files[:train_end] val_files = all_files[train_end:val_end] # Function to copy files def copy_files(files, set_type): for file in files: # Copy image shutil.copy(os.path.join(images_path, file), os.path.join(base_path, set_type, ‘images’)) # Copy corresponding label if it exists and is not empty label_file = file.rsplit(‘.’, 1)[0] + ‘.txt’ shutil.copy(os.path.join(labels_path, label_file), os.path.join(base_path, set_type, ‘labels’)) # Copy files to respective directories copy_files(train_files, ‘train’) copy_files(val_files, ‘val’) |

Class distribution

The following code outputs a bar chart showing the class distribution in a dataset based on the provided label files. This code calculates the class distribution of a dataset given a list of label files (label_paths). Each label file contains a list of object annotations, where each line consists of a class ID and the coordinates of the object in the image.

First, a Counter object named class_counts is created to track the number of instances for each class ID. The code then iterates over each label file, reads the lines, extracts the class ID from each line, and updates the class_counts object with the count of each class ID.

Next, the class IDs are replaced with class names using a list called class_names, and a new dictionary, class_counts_names, is created with the class names as keys and their corresponding counts from class_counts as values.

Finally, a pandas DataFrame is created from the class_counts_names dictionary, and a bar chart is plotted using matplotlib. The x-axis represents the class names, the y-axis represents the number of instances for each class, and the plot is titled “Class Distribution.”

| from collections import Counter import matplotlib.pyplot as plt import numpy as np import pandas as pd class_counts = Counter() for label_file in label_paths: with open(label_file, “r”) as file: lines = file.readlines() class_counts.update(Counter([int(line.split()[0]) for line in lines])) # Replace class IDs with class names class_counts_names = {class_names[int(class_id)]: count for class_id, count in class_counts.items()} # Create a pandas DataFrame and plot the bar chart df = pd.DataFrame.from_dict(class_counts_names, orient=”index”, columns=[“count”]) ax = df.plot(kind=”bar”) plt.xlabel(“Classes”) plt.ylabel(“Number of Instances”) plt.title(“Class Distribution”) plt.show() |

Training Yolov8

How to Prevent Overfitting ?

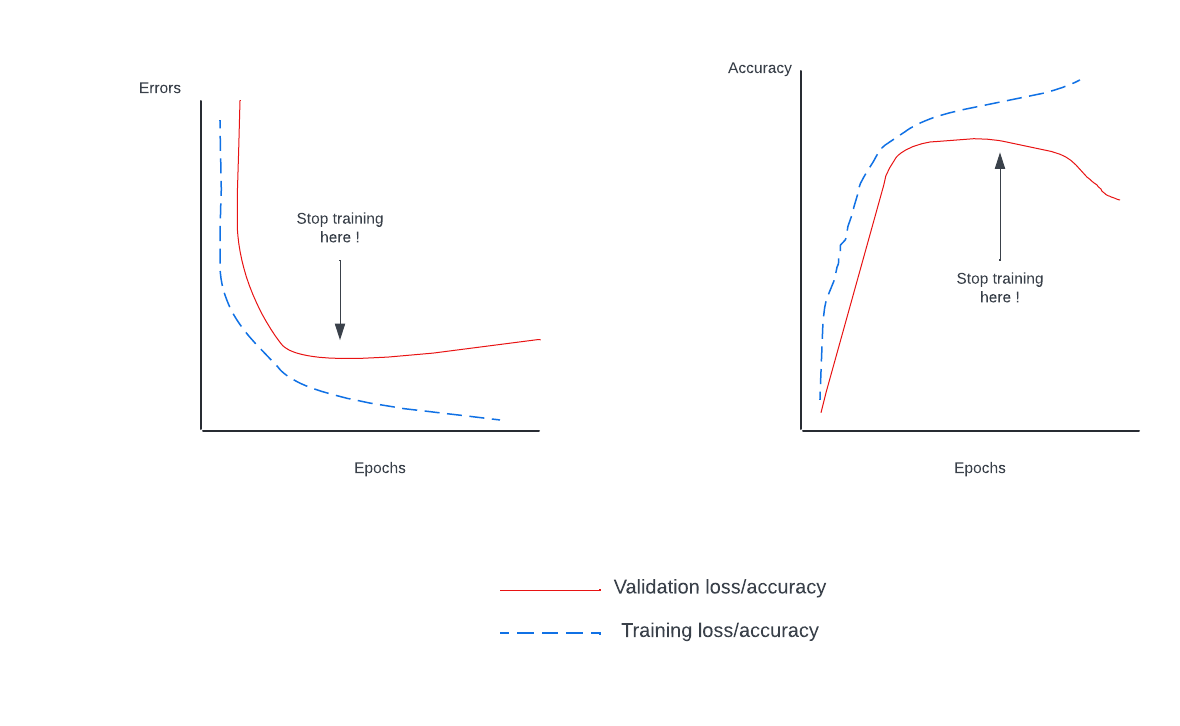

Overfitting is a scenario where a model performs well on training data but poorly on data not seen during training. This occurs when the model has memorized the training data rather than learning the underlying relationships between features and labels.

Overfitting is indicated when the training set shows high precision, but the validation set does not. This suggests that the model can recognize the images it was trained on but struggles to make accurate predictions when presented with new data.

Early Stopping

Early stopping is a valuable technique for optimizing model training by monitoring validation performance and halting training once the model stops improving. This approach conserves computational resources and helps prevent overfitting.

The process involves setting a patience parameter that determines how many epochs to wait for an improvement in validation metrics before stopping training. If the model’s performance does not improve within these epochs, training is stopped to avoid unnecessary time and resource expenditure.

For YOLOv8, early stopping can be enabled by setting the patience parameter in the training configuration. For example, patience=5 means training will stop if there’s no improvement in validation metrics for 5 consecutive epochs. This method ensures the training process remains efficient and achieves optimal performance without excessive computation.

Learning Rate

This hyperparameter controls the extent to which the model’s parameters are updated in each training step. A lower learning rate generally requires more epochs for convergence, while a higher rate might result in faster training but with an increased risk of overfitting.

Creating a Data Configuration File

Create a YAML file (e.g., data.yaml) with the following content:

| path: ./project_path train: train/images val: val/images nc: <number_of_classes> names: – ‘class1’ – ‘class2’ – ‘class3’ – … |

Creating a Script to Train the Model

| import torch from ultralytics import YOLO import argparse import os def fine_tune(epochs): if not os.path.isfile(‘./dataset.yaml’): print(f”\nThe file ‘{file_path}’ exists. Run main.py to generate it.”) return torch.cuda.set_device(0) device = torch.device(“cuda” if torch.cuda.is_available() else “cpu”) print(f’Using device: {device}’) # Load a pretrained YOLO model model = YOLO(“yolov8n.pt”, task=’detect’) # Train the model results = model.train(data=”./dataset.yaml”, epochs=epochs) if __name__ == “__main__”: parser = argparse.ArgumentParser() parser.add_argument(“-e”, “–epochs”, help=”Number of epochs”, type=int, default=100) args = parser.parse_args() fine_tune(args.epochs) |

Closing Thoughts

This article has provided a comprehensive guide to setting up a custom object detection system using YOLOv8. It covered the essential steps, including preparing a custom dataset, training the model, and preventing overfitting, while also highlighting the differences between YOLOv8 variants. The process of fine-tuning the model and configuring the training environment was also discussed, ensuring that users have a clear understanding of how to implement and optimize their own object detection models.

For most applications, it is recommended to start with the smaller YOLOv8 models (e.g., YOLOv8n or YOLOv8s) to gauge initial performance and adjust complexity as needed. Additionally, leveraging data augmentation and monitoring validation metrics closely are key to improving accuracy while avoiding overfitting, especially when working with custom datasets.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.

About Maram Ayari

DevOps Engineer at TrackIt with almost a year of experience, Maram holds a master’s degree in software engineering and has deep expertise in a diverse range of key AWS services such as Amazon EKS, Opensearch, and SageMaker.

Maram also has a background in both front-end and back-end development. Through her articles on medium.com, Maram shares insights, experiences, and advice about AWS services. She is also a beginner violinist.