If you need to host a website that only contains static content, S3 might be an excellent choice for its simplicity: you upload your files on your bucket and don’t need to worry about anything else.

However, today’s companies are looking for automation, and who says automation says Jenkins (or any other CI tool, but we are going to use Jenkins in this example).

In the first part of this article, we are going to discuss how to automate the build of a Ruby website (it could work for any language) and upload it on S3 with Docker and Jenkins. In the second part, we are going to discuss how to password protect it with Nginx and Kubernetes.

Contents

Automated build with Jenkins and Docker

Create Dockerfile

In this part, I’m assuming that your project is hosted on a git repository and that this repository is accessible by Jenkins (if not, look for Jenkins git plugin).

In order to keep your Jenkins installation clean and avoid having to install a lot of packages/dependancies, we are going to use a temporary Docker container to build your website.

Our website is written is Ruby, so we are going to use the following Dockerfile. Feel free to adapt it if you’re using a different language.

FROM ruby:2.2.7 RUN mkdir -p /app ADD . /app WORKDIR /app RUN apt-get update RUN gem install --no-ri --no-rdoc bundler RUN bundle install

/app is where our project will be built.

This Dockerfile needs to be placed at the root of your project dir.

Note that we are not building it yet, the build command will be executed directly by Jenkins.

Let’s try to build it locally:

docker build -t my-static-website . mkdir website && docker run -v `pwd`/website:/app/build my-static-website bundle exec middleman build --clean

If everything went correctly, your build should be in your website/ directory on your machine. If yes, we can proceed to the next step.

Creating S3 bucket

We need to create our S3 bucket, give it the proper permissions and create an IAM user so Jenkins is allowed to upload to it.

Let’s call it static-website-test and put it in the region you prefer. Leave everything as default and confirm creation.

In your bucket settings, under the Properties tab, enable static website hosting. Under Permissions, paste the following policy so the website will be publicly accessible:

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "PublicReadGetObject",

"Effect": "Allow",

"Principal": "*",

"Action": "s3:GetObject",

"Resource": "arn:aws:s3:::static-website-test/*"

}

]

}

Creating IAM user for Jenkins

Access the IAM section of your AWS console and create a new user and give it the following permissions (so the user will only be allowed to access the static website bucket):

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:ListBucket"

],

"Resource": [

"arn:aws:s3:::static-website-test"

]

},

{

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::static-website-test/*"

]

}

]

}

Create Jenkinsfile

Now that everything is ready on AWS side, we need to write a Jenkinsfile. A Jenkinsfile is a text file that contains the definition of a Jenkins Pipeline and is checked into source control.

For this part, I assume that Docker is configured with Jenkins and AWS plugins are installed.

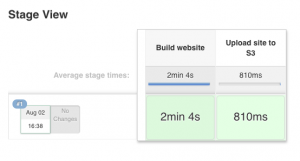

Our project is going to have 2 steps: build of the website, and upload to S3.

#!/usr/bin/env groovy

def appName = "static-website-test/*"

node {

stage('Build website') {

docker.withServer(env.DOCKER_BUILD_HOST) {

deleteDir()

checkout scm

sh "git rev-parse --short HEAD > .git/commit-id"

commitId = readFile('.git/commit-id').trim()

imageId = "${appName}:${commitId}".trim()

appEnv = docker.image(imageId)

sh "docker build --tag ${imageId} --label BUILD_URL=${env.BUILD_URL} ."

sh "mkdir website && docker run -v `pwd`/website:/app/build ${imageId} bundle exec middleman build --clean"

}

}

stage('Upload site to S3') {

withAWS(credentials: 'AWS_STATIC_S3_SITES') {

s3Upload(file:'website', bucket:'static-website-test', path: '')

}

}

}

Commit to repository

Push your Dockerfile and Jenkinsfile to your repository. Depending on your Jenkins configuration the build may or may not start automatically. If not, go on your project and click on Build now.

Make sure that all steps have finished successfully, visit your website url (written on your S3 bucket), and you should be able to see your website! Congratulations!

Protect the website with an authentication with Kubernetes and Nginx

Now let’s imagine that you need to password-protect your website (to host an internal documentation for example). To do that, we will need to use a reverse proxy in front of the website, for the simple reason that S3 doesn’t allow that kind of customization (it’s pages hosting at its simplest).

Detailing the installation of a simple nginx reverse proxy would be boring, let’s imagine that you’re hosting your own Kubernetes cluster in your AWS vpc. Kubernetes is a powerful tool, it can manage your AWS for you. Quick example: if you deploy multiple websites on your cluster, Kubernetes is going to manage ports and Elastic Load Balancer for you. So in only one command, you can have a running website with an ELB created and associated.

Sounds amazing? Look how simple it is:

Prepare nginx configuration

Connect to your Kubernetes cluster.

Create your password db:

echo -n 'user:' >> htpasswd openssl passwd -apr1 >> htpasswd

Configuration that will be used by nginx container:

my-static-website.conf

upstream awses {

server static-website-test.s3-website-us-east-1.amazonaws.com fail_timeout=0;

}

server {

listen 80;

server_name _;

keepalive_timeout 120;

access_log /var/log/nginx/static-website.access.log;

location / {

auth_basic "Restricted Content";

auth_basic_user_file /etc/nginx/.htpasswd;

proxy_pass http://awses;

proxy_set_header Host my-static-website.s3-website-us-east-1.amazonaws.com;

proxy_set_header Authorization "";

proxy_http_version 1.1;

proxy_set_header Connection "";

proxy_hide_header x-amz-id-2;

proxy_hide_header x-amz-request-id;

proxy_hide_header x-amz-meta-server-side-encryption;

proxy_hide_header x-amz-server-side-encryption;

proxy_hide_header Set-Cookie;

proxy_ignore_headers Set-Cookie;

proxy_intercept_errors on;

add_header Cache-Control max-age=31536000;

}

}

In order to pass them to Kubernetes, we are going to encode them in base64 to store them as secret.

cat htpasswd | base64 cat my-static-website.conf | base64

Copy and paste the outputs in your secret file:

apiVersion: v1 kind: Secret metadata: name: nginx-auth-config namespace: default type: Opaque data: .htpasswd: BASE64_OUTPUT my-static-website.conf: BASE64_OUTPUT

Now let’s write our deployment file (nginx-auth-deployment.yml):

apiVersion: v1

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: nginx-auth

labels:

name: nginx-auth

spec:

replicas: 1

template:

metadata:

labels:

name: nginx-auth

spec:

imagePullSecrets:

- name: docker-registry

volumes:

- name: "configs"

secret:

secretName: nginx-auth-config

containers:

- name: nginx-auth

image: nginx:latest

imagePullPolicy: Always

command:

- "/bin/sh"

- "-c"

- "sleep 5 && nginx -g \"daemon off;\""

volumeMounts:

- name: "configs"

mountPath: "/etc/nginx/conf.d"

readOnly: true

ports:

- containerPort: 80

name: http

Same for the service (nginx-auth-svc.yml)

apiVersion: v1

kind: Service

metadata:

labels:

name: nginx-proxy-svc

name: nginx-proxy-svc

annotations:

service.beta.kubernetes.io/aws-load-balancer-backend-protocol: http

spec:

ports:

- port: 80

targetPort: 80

name: http

selector:

name: nginx-auth

type: LoadBalancer

It’s this last file that interests us. While the others were linked to nginx and deployment configuration, this one links your deployment to AWS. We can see how easy it is to create an elastic load balancer. This file is highly customizable and you can describe all kinds of AWS resources you could possibly need.

Now create your deployment and service:

kubectl apply -f nginx-auth-secret.yml kubectl create -f nginx-auth-deployment.yml kubectl create -f nginx-auth-svc.yml

You can follow the progess with the two following commands:

kubectl describe deployment nginx-auth kubectl describe service nginx-proxy-svc

Once everything is completed, the description of your service should give you the url of your ELB. Visit this url and congratulations, your website is available and password protected!

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.