At TrackIt we’ve recently helped Fuse Media, a media and entertainment client, implement an AI/ML pipeline that helped the company significantly streamline and accelerate its post-production editing process.

Read the Fuse Media (AI Content Curation Pipeline) Case Study

A recurrent challenge faced by companies that handle large volumes of content on a daily basis is the need to consistently edit out inappropriate content to adhere to distribution guidelines and requirements. This often translates into huge amounts of human labor dedicated to manually identifying and editing instances of questionable content. Fuse Media, for instance, was dedicating around 6–10 human hours of editing time per piece of content to the curation process.

This article describes how TrackIt implemented an AI/ML pipeline that automates and streamlines the process of identifying questionable content, which can provide significant time savings to companies who consistently curate their content in their post-production editing efforts.

Contents

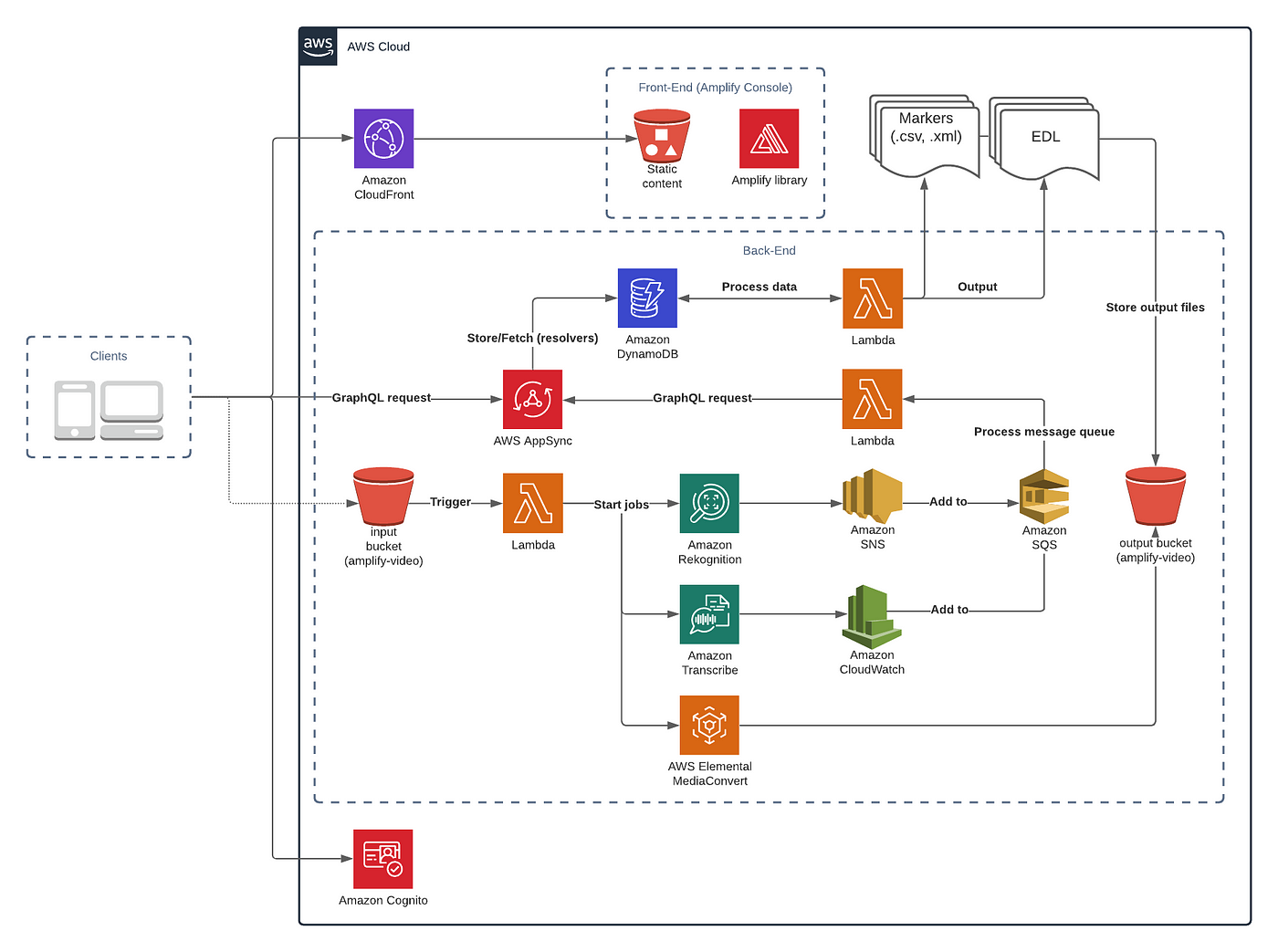

Solution Architecture

AWS Services Used

The following AWS services were used in the AI/ML pipeline:

- Amazon Rekognition: Used to analyze video from the ingested content for segment detection (to detect technical cues and shots) and content moderation (to detect graphic or questionable content).

- Amazon Transcribe: Used to analyze audio from the ingested content. Helps create a transcript file from the audio to filter unwanted words.

- AWS Amplify Video: Used to provide end-users with a playback video on the web UI. An HLS playlist is created using Amplify Video.

- Amazon CloudFront: AWS’s content delivery network (CDN) used to deliver website content and data.

- Amazon Cognito: Used for user authentication.

- Amazon Dynamo DB: Used to store results of AI/ML jobs (Rekognition, Transcribe).

- AWS Lambda: Used to host code that processes metadata coming from API requests, Cloudwatch Events, or SNS Topics.

- Amazon CloudWatch: Used to store logs.

- Amazon SQS: Message queuing service used to process job results from Rekognition and Transcribe when a job is started, complete, or has failed. (AWS Lambda is used to process messages. Amazon SNS or Amazon CloudWatch events send their messages to the SQS Queue).

- Amazon SNS: Used to send email notifications to the end-users and send job results from Rekognition/MediaConvert/Transcribe to the SQS Queue.

- Amazon AppSync: Serves as the GraphQL API to handle user requests and internal requests to save, fetch, and delete metadata and also generate specific assets.

- Amazon S3: Used to ingest video content and store generated assets (EDL, transcripts, Marker files, HLS playlist).

How the Pipeline Works

An asset (video) is uploaded to the input S3 bucket. If a record of the video does not already exist inside the content database, an AI/ML job using Amazon Rekognition and Amazon Transcribe is triggered along with a MediaConvert job that creates an HLS playlist for subsequent content curation by the end-users on the front-end UI.

The Rekognition, Transcribe, and MediaConvert jobs are asynchronous i.e. these jobs do not happen simultaneously. Once each of these jobs is complete, a notification is sent through SNS or CloudWatch events. The notification services are configured to redirect notifications content to an SQS queue.

Each time a new record is created in the SQS queue a Lambda function is triggered to process the data. New records are saved in Amazon DynamoDB using AppSync (AppSync has been used with Amplify in this solution because the latter includes directives for CRUD operations that shorten parts of the development process).

Once records are saved, assets (EDL files and marker files [.xml, .csv]) can be generated on-demand from the API. Cognito is used to handle the user authentication process. The Amplify console uses Amazon CloudFront, AWS’s CDN to distribute content. All processed assets are stored in an output S3 bucket.

Building a Custom Web-Based Video Player for Content Curation and Review

Fuse Media asked for the following keyboard command shortcuts in their video player:

Services used for the front-end:

- Amazon Cloudfront: Used to deliver the front end. CloudFront is connected to a pipeline that deploys the code whenever a code commit is made. For example, if a commit is pushed to the ‘dev’ branch, the CloudFront page connected to this branch will be updated and the client will be able to see the changes in about 30 minutes.

- Amazon Cognito: Used for user authentication purposes

For the implementation of the front-end video player, the first step of the process was to connect CloudFront and Cognito to the pipeline. Developers then needed to choose the technology to use to implement the front-end. The TrackIt team used React for Fuse Media’s video player.

The implementation of the front-end involved the following steps:

1. Understanding the flow i.e. the navigation sequence that’s required

2. Identifying the different pages and shortcuts that are needed for the video player

3. Creating a custom design that fulfills these requirements

Once implemented, the web-based video player provides all teams within an organization with a single, easy-to-use, web UI where they can log in and get their jobs done with just a few clicks without having to engage other personnel.

Conclusion: A Solution For Any Company That Needs to Review Content on a Regular Basis

The AI/ML content curation pipeline not only can help companies realize time savings in their post-production editing processes but also enhances productivity and collaboration between teams by providing them with a single centralized platform to work on. Companies of all sizes that find themselves dedicating excessive amounts of human resources to edit content can leverage the pipeline discussed in this article to automate and streamline their post-production editing efforts.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.