Written by Thibaut Cornolti, Engineering Manager at TrackIt

Contents

What is Social Listening?

Understanding audience behavior on social media has become crucial for brands looking to stay relevant and competitive. Social listening refers to the process of monitoring, analyzing, and extracting insights from online conversations. Traditionally, this has been applied to text-based platforms like Twitter and Facebook, where tracking keywords and sentiment is relatively straightforward.

TikTok, however, presents new challenges due to its video-first nature, requiring more advanced analysis techniques. The sections below explore how social listening on TikTok can help brands uncover valuable insights and make data-driven decisions.

Challenges with Analyzing TikTok Content

Analyzing TikTok content is more complex than traditional social media monitoring due to the platform’s reliance on short-form videos. Unlike text-based posts, TikTok videos include a mix of audio, visuals, captions, and user interactions, all of which contribute to meaning.

Extracting useful insights requires AI-driven tools capable of processing these different elements simultaneously. The goal is to categorize and analyze trends, sentiment, and engagement patterns with accuracy. A structured approach allows brands to transform raw TikTok data into actionable marketing strategies.

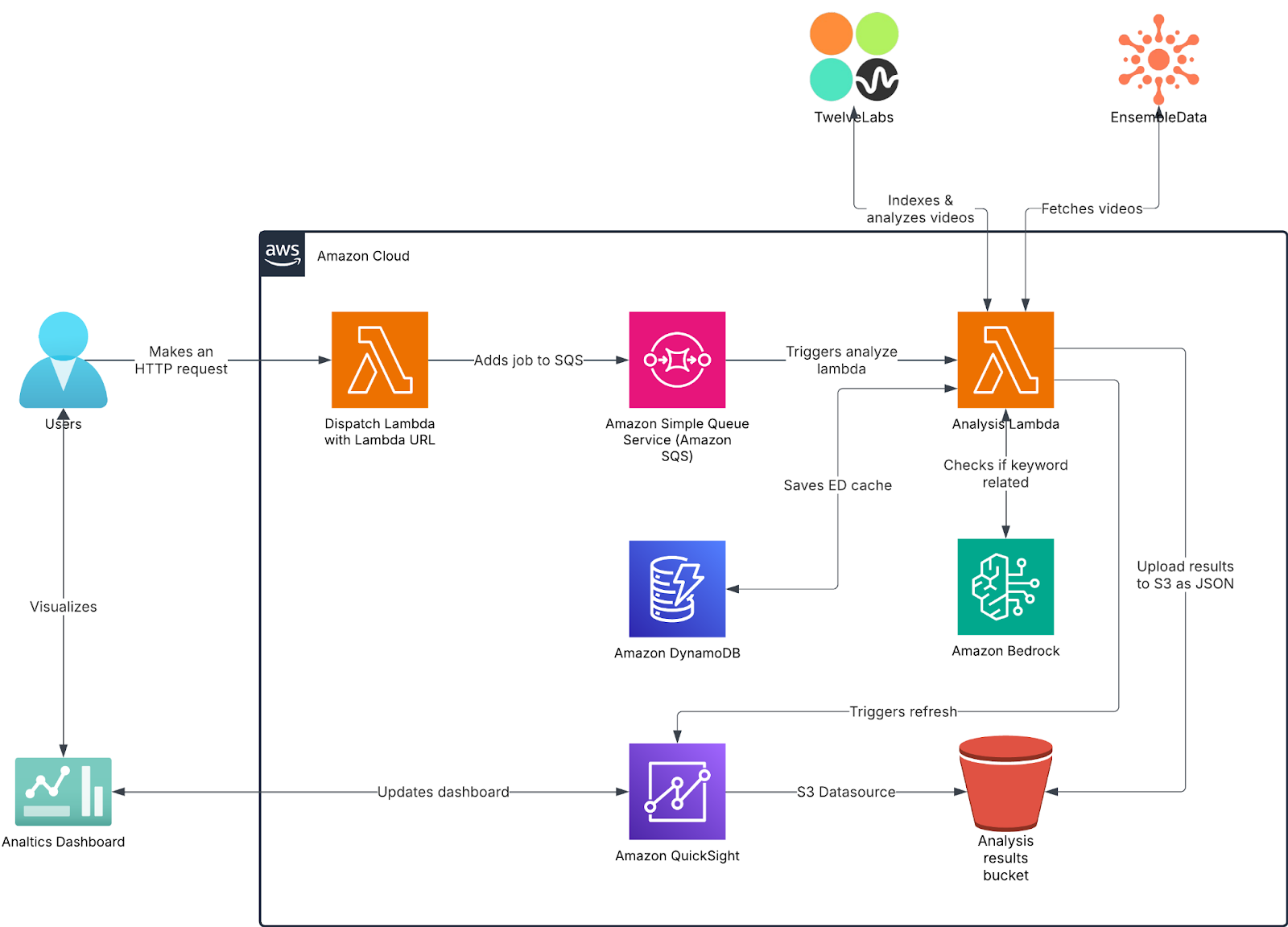

Social Listening Solution Built by TrackIt

To address these challenges, TrackIt developed a modular, serverless pipeline that automates the analysis of TikTok content at scale. The solution ingests user-generated video data, evaluates its relevance to specific keywords, detects emotional tones, and aggregates the findings into a visual dashboard for strategic review. Described below is the architecture and workflow of this TikTok social listening system.

Solution Architecture

Services Used

The following services were used to build the solution:

- AWS Lambda: Hosts two functions—one that receives incoming HTTP requests (via Lambda URL) and another that processes data and orchestrates external API calls.

- Amazon SQS (Simple Queue Service): Buffers requests between the dispatch and analysis Lambda functions to handle concurrency and manage API rate limits. It ensures that only one Analysis Lambda function runs at a time.

- EnsembleData: Third-party API used to scrap (programmatically extract) social media posts from specified users, including metadata such as video URLs and descriptions.

- Amazon DynamoDB: Serves as a cache to avoid redundant EnsembleData requests.

- Amazon Bedrock: Analyzes post descriptions to determine relevance based on a target keyword.

- TwelveLabs: TwelveLabs is the main API used in this workflow. It indexes video content and returns structured data identifying the emotions present in each clip, along with associated confidence scores.

- Amazon S3: Stores the analysis output in structured JSON format.

- Amazon QuickSight: Visualizes the aggregated results using updated data from S3

TwelveLabs – How It Works

TwelveLabs is a multimodal video understanding platform designed to extract high-level insights such as sentiment, emotions, objects, and actions from video content. It uses a combination of vision-language models and temporal analysis to process and interpret videos beyond simple metadata. Once a video is indexed, the platform allows for querying via natural language or structured prompts to retrieve nuanced information. Here’s a simplified breakdown of how it works:

- Video Ingestion: Raw video files are uploaded or streamed to TwelveLabs, where they are pre-processed and stored securely.

- Multimodal Embedding: The video is segmented and encoded using a model that captures visual frames, audio signals, textual elements (e.g., on-screen text), and temporal dynamics.

- Indexing: These embeddings are indexed in a vector database, allowing for fast similarity searches and semantic queries.

- Prompting: Users or systems can prompt the model with structured queries (e.g., “What is the mood of the speaker?”) to receive a structured JSON or XML response.

- Confidence Scoring: The output includes key-value pairs with labels (e.g., emotions, actions) and associated confidence scores.

- Scalability: The platform is designed to handle thousands of videos concurrently with asynchronous processing and API-first architecture.

Prompt Engineering for Claude and Nova

Claude and Nova are two large language models (LLMs) available through Amazon Bedrock. Claude, developed by Anthropic, is known for its strong reasoning and safety alignment. Nova is Amazon’s proprietary family of foundation models, designed to handle a wide range of enterprise use cases efficiently. Both are accessible via Bedrock, which also hosts models like Cohere’s Command R+ and AI21’s Jurassic.

Choosing the Right Prompt Builder

When working with large language models (LLMs), selecting the appropriate tooling is key to building effective prompts. Claude and Amazon Nova are trained differently and respond best to model-specific strategies. It is advisable to use their respective prompt builders—Claude’s Workbench and Nova’s Prompt Management tool—to structure, test, and refine prompts. These platforms provide a controlled environment for iteration and evaluation.

Best Practices for Prompt Design

Claude performs well with XML-formatted prompts, especially when metadata is embedded and an output schema is defined. Including examples, using prefilling, and specifying expected output formats can improve consistency. For complex tasks, prompting the model to think step by step or listing clear instructions enhances reasoning. Nova is generally more JSON-friendly but still benefits from structured prompts. For more guidance, refer to the Claude documentation and Nova documentation.

Workflow Steps

Step 1: An HTTP request is made to a dispatch Lambda via Lambda URL. The request includes a payload containing the target keyword and a list of user handles.

Step 2: The dispatch Lambda places the HTTP payload as a message int o an Amazon SQS queue and returns an HTTP 200 response to confirm successful receipt.

Step 3: The analysis Lambda consumes the new message from the SQS queue.

Step 4: The analysis Lambda parses the payload and extracts the list of users. It then retrieves the latest posts (including raw video URLs and descriptions) from these users using the EnsembleData API.

Step 5: The list of post descriptions is sent to Amazon Bedrock for keyword relevance analysis using the Nova language model. Nova evaluates each description to determine whether it relates to the target keyword initially provided in the payload. This involves identifying semantic matches, even if the keyword is not explicitly mentioned. Based on Nova’s output, the Lambda function filters and retains only the videos whose descriptions are deemed relevant to the keyword.

Step 6: The Lambda indexes all related videos in TwelveLabs. It prompts the model to return a JSON object containing a key-value mapping of emotions and confidence scores for each video.

Step 7: All the results are concatenated in a single JSON file and uploaded to S3.

Step 8: Quicksight DataSet is refreshed by the Lambda to update the results Dashboard.

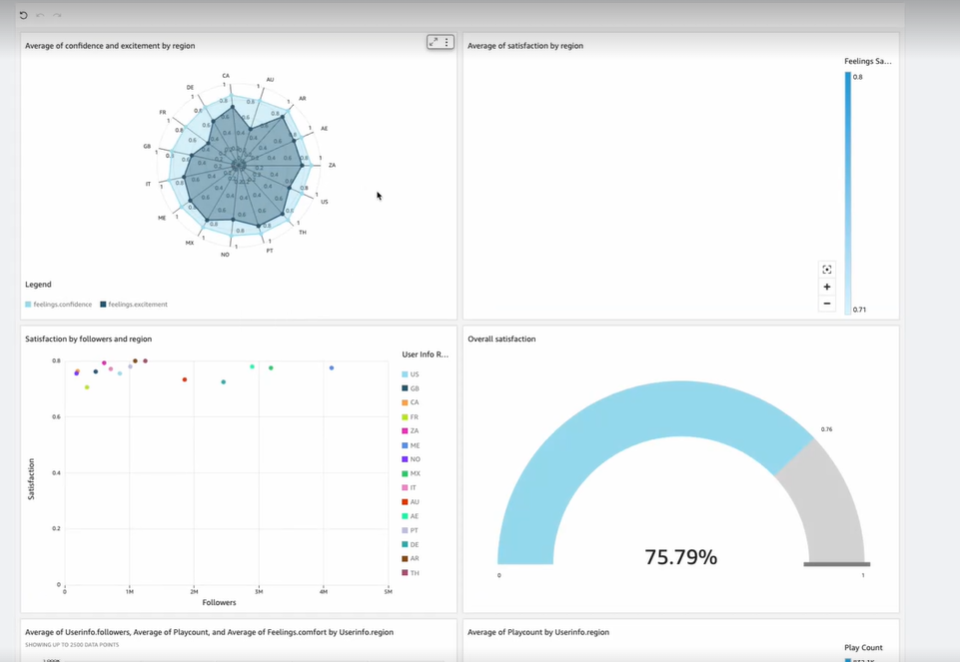

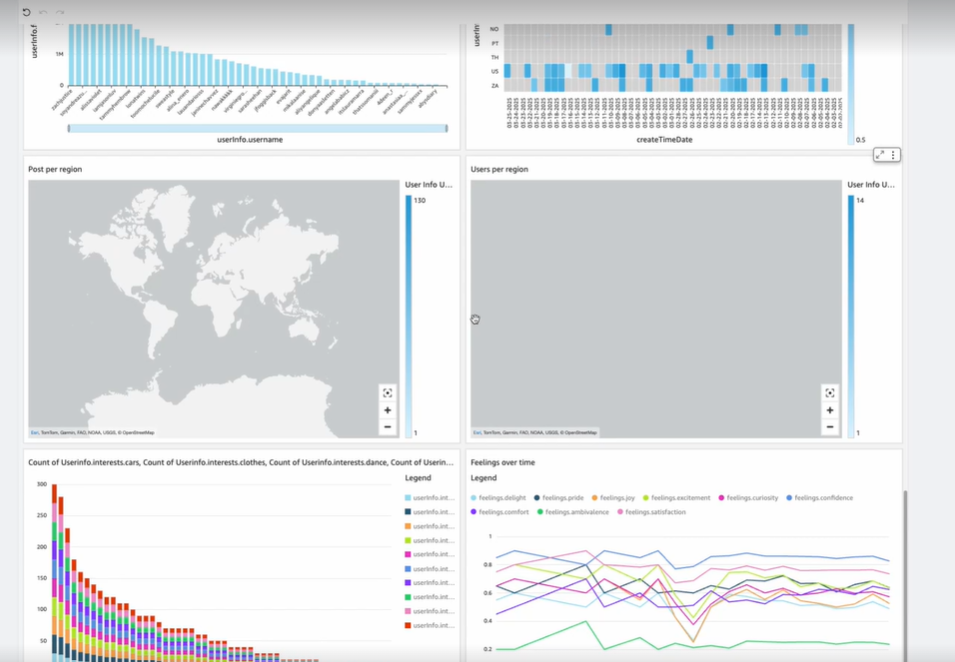

QuickSight Dashboard

Total Cost of Ownership

The cost model for this pipeline is designed to be both scalable and predictable:

- Flat Fees: $200/month. This includes fixed infrastructure components such as the database.

- Pay-As-You-Go: $0.10 per minute of processed video. This covers the cost of video analysis via TwelveLabs.

Example Calculation

For a workload of 1,000 videos per month, with each video averaging 60 seconds:

- Flat Fee: $200

- Variable Cost: 1,000 videos × 1 minute × $0.10 = $100

- Total Estimated Monthly Cost: $300

This pricing structure allows for flexibility as volume scales, while keeping base operational costs predictable.

Conclusion

This pipeline demonstrates how generative AI can be applied to extract actionable insights from video-first platforms like TikTok—something that traditional text-based social listening tools are not equipped to handle. By combining event-driven architecture with targeted prompt engineering for models like Claude and Nova, the solution efficiently processes multimedia content at scale. It highlights the potential of GenAI not just for content analysis, but as a foundation for building more adaptive, intelligent marketing and monitoring tools across industries.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.