Written by Alexandre Sauner, Software Engineer at TrackIt

Quality assurance (QA) is a critical component of the software development lifecycle, ensuring that applications are reliable, secure, and meet stakeholder expectations. A well-structured QA strategy integrates testing and validation throughout the development process, minimizing defects and enhancing software quality. Outlined below are QA best practices that help establish a robust and scalable approach to software testing.

Contents

Integrating QA Early in Development

A proactive QA strategy begins at the earliest stages of development. By incorporating QA during requirements analysis and design, teams can identify potential issues before they become costly to fix. This approach fosters collaboration between developers, testers, and stakeholders, ensuring that quality is built into the product from the outset.

Before development begins, it is essential to define a clear QA strategy, outlining what types of tests will be written, when they will be implemented, and how they will be executed. Even during feature development, tests can be written before the actual code—a practice known as test-driven development (TDD). Since the expected output is already known, tests can be created first, even though they will initially fail. Developers then write the corresponding code until all tests pass, ensuring that functionality is validated from the start.

With a solid QA foundation established early, the next step is ensuring rigorous testing at different levels of the system, starting with backend validation.

Backend Testing

Backend testing involves validating business logic, data processing, and integrations. It typically includes:

Unit Testing

These tests follow a structured architecture, such as hexagonal architecture, which allows for testing each component in isolation, using mock repositories where necessary. Unit tests can be categorized based on how they interact with the system. Two key aspects to consider are the Driving Side and the Driven Side, each addressing different facets of testing interactions within the architecture.

- Driving Side Testing: This focuses on testing how the system interacts with external inputs, such as API adapters. For example, in an HTTP API, tests verify that requests are correctly parsed, responses are appropriately formatted, and proper HTTP codes are returned for different errors (e.g., 401 for unauthorized access, 403 for forbidden access, and 400 for malformed requests). Ensuring that the domain logic is called with the expected arguments is a key aspect of driving side testing.

- Driven Side Testing: This ensures that the system correctly interacts with external dependencies like databases and third-party services. Each repository is tested to confirm that it performs the intended function, whether it retrieves, processes, or stores data. For cases where repositories interact with databases, testing is conducted using local database instances within an isolated environment (e.g., DynamoDB, OpenSearch, MongoDB). This prevents conflicts between test cases and ensures the reliability of database interactions.

End-to-End (E2E) Testing

End-to-end tests are used to ensure that the integration with the infrastructure is functioning correctly. Ideally, every user action or automation should have at least one test to prevent regressions.

Writing end-to-end tests involves verifying that all components are properly connected. This is particularly important in event-driven and serverless environments.

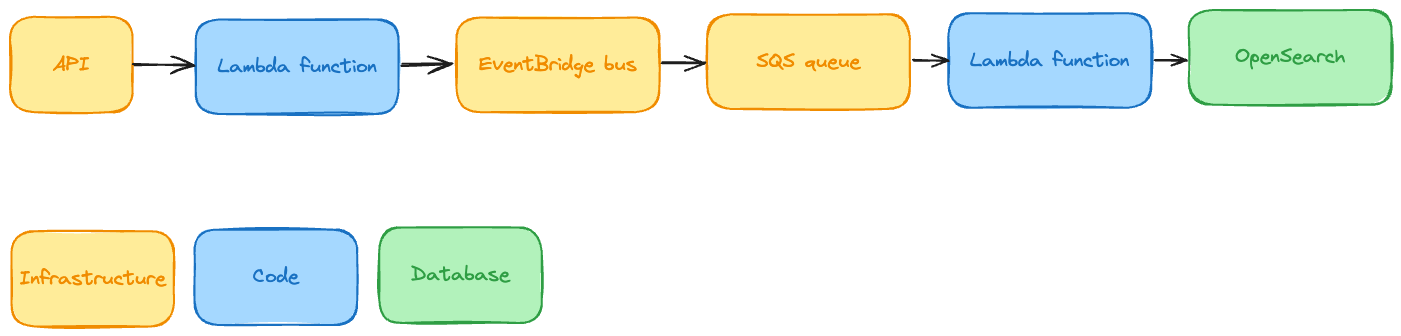

For example, in this architecture:

- The API must be correctly linked to the Lambda function, which could be a Lambda data source or resolver connected to an AppSync GraphQL API.

- The Lambda function must publish the correct message on the event bus and have the necessary permissions to do so.

- An EventBridge rule must forward the correct messages to an SQS queue, which then triggers a Lambda function with the appropriate permissions and network configuration to write to an OpenSearch cluster in a private subnet.

Frontend Testing

A robust frontend testing strategy ensures that user interfaces behave as expected. Key approaches include:

- Unit Testing: Stores and business logic are tested independently of UI components to ensure accurate state management and proper application behavior.

- Component Testing: Using headless browsers, individual UI components are tested in isolation, ensuring they correctly render data and respond to user interactions.

- End-to-End Testing: Validates complete user workflows with a live backend to catch regressions and ensure system-wide consistency.

Manual Review Processes

While automation is essential, manual reviews provide additional oversight and catch issues that automated tests might miss.

Design Document Reviews

Before implementing new features, a design document outlining technical goals, business rules, and proposed architecture should be created. Peer review ensures alignment with project goals and identifies potential design flaws before development begins.

Code Reviews

Code reviews play a vital role in maintaining high-quality software. This process helps:

- Ensure adherence to coding standards and best practices.

- Identify bugs, security vulnerabilities, and inefficiencies.

- Promote knowledge sharing and maintain consistency across the codebase.

Ticket Validation

Before release, manual testing verifies that new features and bug fixes meet functional requirements and perform well under real-world conditions. This final layer of validation helps ensure a stable and reliable product.

Continuous Improvement

A successful QA strategy evolves with the needs of the project. Regularly reviewing test coverage, analyzing defect trends, and refining testing methodologies contribute to continuous improvement. By fostering a culture of quality and collaboration, teams can build resilient software that meets high standards of reliability and performance.

By integrating QA throughout the development lifecycle and employing a combination of automated testing, manual reviews, and continuous improvement practices, teams can enhance software quality and reduce the risk of defects reaching production.ork needed to ensure data remains both accessible and secure.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.