When we talk about log management, we talk about dealing with a large amount of computer-generated files and how to collect them, how to centralize them, how to aggregate them and how to analyze them. Logs can be produced everywhere by different types of systems. Collecting them is easy, but processing this large amout of data in order to obtain reliable information is a real challenge. Nowadays, we have tools to help us and this article describes an approach from log collecting to log analyzing, using Sensu and ELK stack.

Sensu is a software for monitoring servers, applications or services. Basically, you have Sensu server with a web interface and some Sensu client on your servers where you run your services. The client performs defined checks, collects metrics and sends them to the server. With the web interface, you can see in-real-time what is happening on your services, if something goes wrong or if everything runs smoothly.

ELK stack stands for ElasticSearch, Logstash and Kibana. It is a combination of 3 powerful tools used for log processing and analytics. Logstash is used to receive and process logs, ElasticSearch is a search engine and Kibana is a data analytic front-end. These are used together to analyze huge amounts of data and extract useful information about your system.

In this article, we will explain how to combine these software to obtain a complete log analytic solution using Sensu for gathering, Logstash for processing, ElasticSearch for indexing, and Kibana for analyzing. Usually, we use Filebeat, a software provided by Elastic, in order to gather log. Why are we using Sensu instead of Filebeat you would ask? In our case, we already monitor our infrastructure with Sensu. Then on our servers, we have a Sensu client running, checking the server status and return it to the Sensu server. So, we already have a service running on each server that send informations to a master server and Sensu, with its plugin system, allow us to customize these checks. Why bother installing another service that has exactly the same function, gather informations on clients and send it to a master server? Let’s create our own Sensu plugin that gathers the log on the clients and send it to the Sensu server that forward it to Logstash.

We will use 2 servers on Ubuntu 16.04 LTS to deploy our solution: one running Sensu Server and ELK stack, the second running Sensu client. As we will discuss, this is just an example and multiple designs are possible, especially if you want it clustered.

Contents

Setting up Sensu server

First, we need to install Sensu dependencies: RabbitMQ and Redis. RabbitMQ, a messaging broker, used by Sensu services to communicate between them.

$ wget -O- http:/www.rabbitmq.com/rabbitmq-signing-key-public.asc | sudo apt-key add - OK $ echo "deb http:/www.rabbitmq.com/debian/ testing main" | sudo tee /etc/apt/sources.list.d/rabbitmq.list deb http:/www.rabbitmq.com/debian/ testing main $ sudo apt-get update && sudo apt-get -y install rabbitmq-server [...]

The RabbitMQ broker is now installed and has already started.

Then we need to add Sensu vhost, user, and its permission in order to use RabbitMQ to transfer message between the server and client.

$ sudo rabbitmqctl add_vhost /sensu Creating vhost "/sensu" ... $ sudo rabbitmqctl add_user sensu password Creating user "sensu" ... $ sudo rabbitmqctl set_permissions -p /sensu sensu ".*" ".*" ".*" Setting permissions for user "sensu" in vhost "/sensu" ...

We could use RabbitMQ in the current state but the communication are not encrypted, let’s create some SSL certificate (they will be valid for 5 years).

$ sudo apt-get install openssl $ openssl version OpenSSL 1.0.2g 1 Mar 2016 $ cd $(mktemp -d) && wget http:/sensuapp.org/docs/0.25/files/sensu_ssl_tool.tar [...] $ tar -xvf sensu_ssl_tool.tar sensu_ssl_tool/ sensu_ssl_tool/sensu_ca/ sensu_ssl_tool/sensu_ca/openssl.cnf sensu_ssl_tool/ssl_certs.sh $ cd sensu_ssl_tool && ./ssl_certs.sh generate [...] $ mkdir /etc/rabbitmq/ssl && cp sensu_ca/cacert.pem server/cert.pem server/key.pem /etc/rabbitmq/ssl

Finally, let’s update our RabbitMQ configuration in order to use these certificate in its communication with Sensu.

Open `/etc/rabbitmq/rabbitmq.config` and paste this content:

[

{rabbit, [

{ssl_listeners, [5671]},

{ssl_options, [{cacertfile,"/etc/rabbitmq/ssl/cacert.pem"},

{certfile,"/etc/rabbitmq/ssl/cert.pem"},

{keyfile,"/etc/rabbitmq/ssl/key.pem"},

{versions, ['tlsv1.2']},

{ciphers, [{rsa,aes_256_cbc,sha256}]},

{verify,verify_none},

{fail_if_no_peer_cert,false}]}

]}

].

Rabbitmq is now ready to be used by Sensu, let’s set up Redis, a key-value database used for storing data.

$ sudo apt-get -y install redis-server [...] $ redis-cli ping PONG

The server is now ready to be installed.

$ wget -q https://sensu.global.ssl.fastly.net/apt/pubkey.gpg -O- | sudo apt-key add - OK $ echo "deb https://sensu.global.ssl.fastly.net/apt sensu main" | sudo tee /etc/apt/sources.list.d/sensu.list $ sudo apt-get update && sudo apt-get install sensu uchiwa [...]

The /etc/sensu/config.json contains connections information about RabbitMQ and Redis.

{

"rabbitmq": {

"host": "127.0.0.1",

"ssl": {

"cert_chain_file": "/etc/sensu/ssl/cert.pem",

"private_key_file": "/etc/sensu/ssl/key.pem"

},

"port": 5671,

"user": "sensu",

"password": "password",

"vhost": "/sensu"

},

"redis": {

"host": "127.0.0.1",

"port": 6379,

"reconnect_on_error": true,

"auto_reconnect": true

},

"api": {

"host": "127.0.0.1",

"port": 4567

}

}

Let’s copy our SSL certificate.

$ mkdir /etc/sensu/ssl && cp /etc/rabbitmq/ssl/cert.pem /etc/rabbitmq/ssl/key.pem /etc/sensu/ssl/

Then Sensu is ready, we can start it and make it persistent on reboot:

$ sudo update-rc.d sensu-server defaults $ sudo update-rc.d sensu-api defaults $ sudo update-rc.d uchiwa defaults $ sudo /etc/init.d/sensu-server start * Starting sensu-server [ OK ] $ sudo /etc/init.d/sensu-api start * Starting sensu-api [ OK ] $ sudo /etc/init.d/uchiwa start uchiwa started.

The server is now up and running, let’s set up the client on the second server. We will come back later on the Sensu server in order to configure the check we want to perform.

Setting up Sensu client

On your second server, you just have to perform the same operation as the Sensu server, in fact Sensu client is a part of Sensu core but it is not activated by default.

$ wget -q https://sensu.global.ssl.fastly.net/apt/pubkey.gpg -O- | sudo apt-key add - OK $ echo "deb https://sensu.global.ssl.fastly.net/apt sensu main" | sudo tee /etc/apt/sources.list.d/sensu.list deb https://sensu.global.ssl.fastly.net/apt sensu main $ sudo apt-get update && apt-get -y install sensu [...]

Since we use Sensu client on a remote host, we need to configure the client to send information to the server to the RabbitMQ.

First, get the SSL certificate we created previously then copy it on the /etc/sensu/ssl folder.

$ mkdir /etc/sensu/ssl && cp cert.pem key.pem /etc/sensu/ssl

We need to create the /etc/sensu/conf.d/rabbitmq.json on the client.

{

"rabbitmq": {

"host": "rabbitmq-server_ip",

"port": 5671,

"ssl": {

"cert_chain_file": "/etc/sensu/ssl/cert.pem",

"private_key_file": "/etc/sensu/ssl/key.pem"

},

"vhost": "/sensu",

"user": "sensu",

"password": "password"

}

}

Just replace rabbitmq-server_ip by the RabbitMQ server IP or hostname.

Then, create the client definition file, this file is very important because it is where you will describe your client and enable some checks.

{

"client": {

"name": "client",

"address": "127.0.0.1",

"subscriptions": [ "port_open" ]

}

}

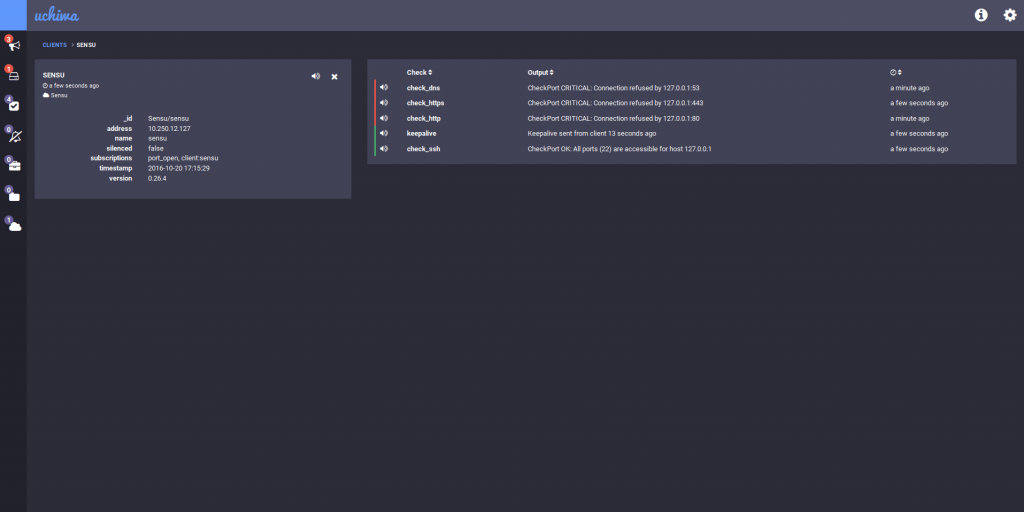

Name and address is used to describe your client, it will be show on the Uchiwa interface and will be useful to recognize the client metrics in the Elastic Stack.

I will explain subscription later when we will configure Sensu to forward metrics. This is enough for now, start the client.

$ update-rc.d sensu-client defaults $ /etc/init.d/sensu-client start * Starting sensu-client [ OK ]

The part on Sensu installation is finished, you now have a Sensu client and a Sensu server running on two different servers that communicate. If you go to your browser and open http:/:3000, you should see the client on the web interface. Now, we will configure Sensu to collect metrics from client, and send them to the Elastic Stack. Then first, we need to install and configure Elastic Stack on the server.

Setting up Logstash

Before installing the Elastic Stack, we will need to install Oracle JDK 8. It is not compactible with Java 9 yet.

$ sudo apt-get install software-properties-common [...] $ sudo add-apt-repository ppa:webupd8team/java [...] $ sudo apt-get update [...] $ sudo apt-get install oracle-java8-installer [...] $ java -version java version "1.8.0_101" Java(TM) SE Runtime Environment (build 1.8.0_101-b13) Java HotSpot(TM) 64-Bit Server VM (build 25.101-b13, mixed mode)

Let’s install Logstash.

$ wget -qO - https://packages.elastic.co/GPG-KEY-elasticsearch | sudo apt-key add - OK $ echo "deb https://packages.elastic.co/logstash/2.4/debian stable main" | sudo tee -a /etc/apt/sources.list deb https://packages.elastic.co/logstash/2.4/debian stable main $ sudo apt-get update && sudo apt-get install logstash [...] $ sudo update-rc.d logstash defaults $ sudo service logstash start

We will configure it later, now we will setup ElasticSearch.

Setting up ElasticSearch

Next we will install Elastic Search on the server, using APT repository. First, we need to install Java 1.8, then we download and install ElasticSearch 2.0 and set it up as a service.

$ curl -L -O https://download.elastic.co/elasticsearch/release/org/elasticsearch/distribution/deb/elasticsearch/2.4.1/elasticsearch-2.4.1.deb

$ sudo dpkg -i elasticsearch-2.4.1.deb

$ sudo update-rc.d elasticsearch defaults

$ sudo /etc/init.d/elasticsearch start

[ ok ] Starting elasticsearch (via systemctl): elasticsearch.service.

$ curl 'localhost:9200'

{

"name" : "Angel Salvadore",

"cluster_name" : "elasticsearch",

"cluster_uuid" : "TYVW3XWRTOywkyF6rAa-VQ",

"version" : {

"number" : "2.4.1",

"build_hash" : "c67dc32e24162035d18d6fe1e952c4cbcbe79d16",

"build_timestamp" : "2016-09-27T18:57:55Z",

"build_snapshot" : false,

"lucene_version" : "5.5.2"

},

"tagline" : "You Know, for Search"

}

ElasticSearch is now ready to index our logs, let’s set up Kibana.

Setting up Kibana

$ curl -L -O https://download.elastic.co/kibana/kibana/kibana-4.6.1-amd64.deb [...] $ sudo dpkg -i kibana-4.6.1-amd64.deb [...] $ sudo update-rc.d kibana defaults $ sudo /etc/init.d/kibana start kibana started

Then, go check http:/localhost:5601 to see Kibana working.

Now, the ELK stash is ready to be used! We are all set with the installation, we will now work on the most interesting part of this tutorial, how to setup Sensu in order to forward metrics to Logstash.

We will go back to Sensu, we will need to configure some checks in order to get metrics that we will forward to Elastic Stack.

Configure Sensu to forward logs to Logstash

First, get the Sensu plugins which get the metrics you want. For this article, I will use the netstat plugins. You can find a lot of different check plugins in the Sensu Community Github page. Here, we will try to get some stats about our server using the check-ports.rb script. Go on your client and run these commands.

$ sudo apt-get install ruby-dev build-essential [...] $ sudo gem install sensu-plugins-disk-checks [...] $ metrics-sockstat.rb network.sockets.total_used 121 1476689348 network.sockets.TCP.inuse 17 1476689348 network.sockets.TCP.orphan 0 1476689348 network.sockets.TCP.tw 43 1476689348 network.sockets.TCP.alloc 25 1476689348 network.sockets.TCP.mem 2 1476689348 network.sockets.UDP.inuse 2 1476689348 network.sockets.UDP.mem 1 1476689348 network.sockets.UDPLITE.inuse 0 1476689348 network.sockets.RAW.inuse 0 1476689348 network.sockets.FRAG.inuse 0 1476689348 network.sockets.FRAG.memory 0 1476689348

The next step is to register this check, create the file /etc/sensu/conf.d/check.json on Sensu client and write the description of your check.

{

"checks": {

"check_ssh": {

"type": "metric",

"command": "check-ports.rb -h 127.0.0.1 -p 22 -t 30"

"interval": 60,

"subscribers": ["port_open"],

"handlers": ["logstash"]

},

"check_dns": {

"type": "metric",

"command": "check-ports.rb -h 127.0.0.1 -p 53 -t 30"

"interval": 60,

"subscribers": ["port_open"],

"handlers": ["logstash"]

},

"check_http": {

"type": "metric",

"command": "check-ports.rb -h 127.0.0.1 -p 80 -t 30"

"interval": 60,

"subscribers": ["port_open"],

"handlers": ["logstash"]

},

"check_https": {

"type": "metric",

"command": "check-ports.rb -h 127.0.0.1 -p 443 -t 30"

"interval": 60,

"subscribers": ["port_open"],

"handlers": ["logstash"]

}

}

}

This file describes each check to the client. It is pretty easy to understand what each field means. Except maybe subscribers and handlers.

Subscribers field contains some tags given to a group of check. On the client, you describe the checks on the /etc/sensu/conf.d/check.json file, give them different tags, then put the same tag on /etc/sensu/conf.d/client.json file and the Sensu clients will perform these checks.

Handlers field is used to choose the handler script that will process the metrics. This is the mecanism we will use to tell Sensu to forward the log to Logstash.

How, your Uchiwa UI should look like this.

Create the file /etc/sensu/conf.d/handlers.json on Sensu server and add the content below.

{

"handlers": {

"logstash": {

"type": "udp",

"socket": {

"host": "127.0.0.1",

"port": 5514

}

}

}

}

As describe in this configuration file, we will send each metric gathered by Sensu server to Logstash using udp connexion on port 5514.

The server configuration and it should be ready. Logstash needs to be configure in order to get the metrics and insert them in ElasticSearch.

Copy this configuration file in /etc/logstash/conf.d/00-sensu-metrics.conf. This file describes how Logstash should listen to in order to get logs.

input {

udp {

port => 5514

codec => "json"

type => "rsyslog"

}

}

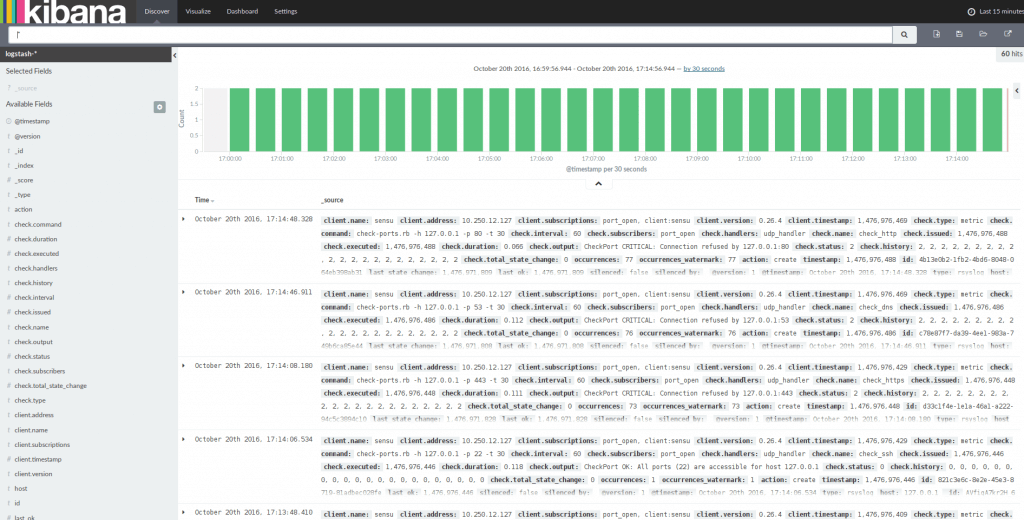

Restart Sensu client, Sensu server and Logstash, open Kibana on your Internet browser then you should see some metrics appear on Kibana.

This is now the end of this article. We have seen how to install ELK and Sensu, how to get metrics using Sensu checks and how to forward it as log to ElasticSearch.

In the next article, based on what we did here, we will use this to get logs from a service and try to get informations from it. We will see how to create our own Sensu check in order to get metrics from , then configure Logstash to process it with Grok and finally, we will draw some charts with these data in Kibana. See you next time !

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.