Written by Maxime Roth Fessler, DevOps & Backend Developer at TrackIt

Deploying large language models (LLMs) on Kubernetes provides clear advantages in terms of portability, scalability, and seamless integration with cloud-native services. However, managing the efficient scaling of these models—particularly when relying on GPU resources—introduces specific operational challenges. Unlike CPU-based workloads, GPU-intensive applications demand careful resource allocation and real-time monitoring to maintain performance and cost efficiency.

The following guide demonstrates how to dynamically scale LLMs such as DeepSeek on Amazon EKS (Elastic Kubernetes Service) using Auto Mode. By leveraging GPU utilization metrics and configuring Horizontal Pod Autoscaling (HPA), the approach enables responsive, automated scaling of inference workloads based on actual demand.

Contents

- What are LLMs?

- Why use Amazon EKS to deploy LLMs?

- Why use EKS Auto Mode?

- Benefits of Stateless LLM APIs for Scaling

- Deploying the Amazon EKS Cluster

- Setting Up Autoscaling with GPU Metrics

- Exporting GPU Metrics with the DCGM-Exporter

- Scraping the Metrics with Prometheus

- Transforming Prometheus Metrics to Kubernetes Metrics

- Scaling Based on Custom Kubernetes Metrics

- Load Balancing with ALB for Efficient Traffic Distribution

- Testing the Deepseek LLM

- Conclusion

- About TrackIt

What are LLMs?

LLMs are advanced AI models trained on massive volumes of text data to understand and generate human language. Built using deep learning techniques, these models are capable of performing a wide range of language-related tasks—answering questions, summarizing documents, translating text, and even generating creative writing.

Their ability to grasp complex linguistic patterns has made them essential to modern natural language processing, helping improve everything from chatbots and virtual assistants to content automation and knowledge extraction.

Why use Amazon EKS to deploy LLMs?

Amazon EKS provides numerous benefits for deploying GPU-intensive LLMs. It offers elastic scalability to handle varying workloads, along with cost optimization through dynamic resource management. The platform’s monitoring and alerting features enhance operational efficiency by enabling quicker issue detection and resolution. EKS also integrates seamlessly with infrastructure-as-code tools like Terraform, facilitating automated and consistent deployments.

For teams already familiar with Kubernetes, deploying LLMs on EKS allows them to leverage their existing expertise in container orchestration, ensuring a smoother and more efficient deployment process.

Why use EKS Auto Mode?

EKS Auto Mode significantly simplifies the management of GPU resources in a Kubernetes cluster. With Auto Mode, simply specifying that a pod requires a GPU ensures that EKS automatically schedules the pod on a node equipped with the necessary GPU hardware. This eliminates the need for manual node configuration and driver installation, as EKS handles the provisioning of GPU-enabled nodes and the installation of NVIDIA drivers.

As a result, only the Horizontal Pod Autoscaler (HPA) needs to be configured for scaling pods, since node scaling is seamlessly managed by EKS Auto Mode.

Benefits of Stateless LLM APIs for Scaling

Stateless Large Language Models (LLMs) are deployed as APIs that do not retain any information between requests—each interaction is processed independently. This stateless architecture provides several advantages for scaling and reliability.

It enables seamless integration with load management tools like AWS’s Application Load Balancer (ALB), as requests can be distributed evenly across all available instances without requiring session affinity. The lack of internal state also simplifies infrastructure, reduces coordination overhead, and enhances system resilience.

When orchestrated using Amazon EKS, stateless LLM APIs benefit from automated scaling based on traffic. Kubernetes can dynamically adjust resources in response to demand, ensuring efficient handling of usage spikes without requiring complex configuration or manual intervention.

Deploying the Amazon EKS Cluster

The Terraform configuration used in this tutorial is available at: https://github.com/trackit/eks-auto-mode-gpu.

This guide walks through the process of deploying an Amazon EKS cluster using Terraform. Once the cluster is up and running, it covers the deployment of a Large Language Model—DeepSeek in this case—followed by the configuration of the Horizontal Pod Autoscaler (HPA) to automatically adjust compute resources based on GPU utilization metrics.

Prerequisites for this tutorial:

- Helm: A package manager for Kubernetes that simplifies deploying and managing applications on the EKS cluster. Installation instructions are available in the official Helm documentation.

- Terraform: An infrastructure-as-code tool used to define and provision the EKS cluster. Installation guidelines can be found on the Terraform website.

- AWS CLI: The command-line interface for interacting with AWS services, including EKS. Ensure it is installed and configured with the necessary permissions. Refer to the AWS CLI installation page for details.

- kubectl: The command-line tool for managing Kubernetes clusters. Installation instructions are provided in the official Kubernetes documentation.

Cluster Deployment Process

Once the required tools are confirmed to be installed, the next step is to create a terraform.tfvars file. The sample.tfvars file serves as a reference to guide this process. When setting values in the Terraform tfvars file, ensure that the relevant flags are set to false; their purpose will be explained later in the tutorial.

To generate an execution plan and save it as plan.out, run the following command:

| `terraform plan -out=plan.out` |

This command analyzes the Terraform configuration and creates a plan detailing the actions needed to reach the desired state.

After reviewing and approving the plan, apply it by running:

| `terraform apply “plan.out”` |

This will execute the planned actions, including creating the EKS cluster.

Finally, use the AWS CLI to update the kubectl configuration with the EKS cluster details. Replace the region and cluster name with the appropriate values:

| `aws eks –region us-west-2 update-kubeconfig –name eks-automode-gpu` |

Setting Up Autoscaling with GPU Metrics

The Horizontal Pod Autoscaler (HPA) in Kubernetes is natively designed to scale applications based on CPU and memory metrics. However, to leverage GPU metrics or other custom metrics, such as HTTP requests per second, additional configuration is required. This involves setting up a custom metrics server or using tools like Prometheus and the Prometheus Adapter for Kubernetes to collect and expose these metrics.

For this tutorial, the DCGM-Exporter container by NVIDIA will be used to expose GPU metrics for Prometheus. The Prometheus operator will scrape data from the DCGM-Exporter, and the Prometheus adapter will expose these metrics to the Kubernetes Metrics Server, enabling the HPA to scale applications based on GPU utilization.

To deploy the autoscaling components with the required configurations, open the Terraform variables file (tfvars) and ensure the following variables are set to true:

| “`hcl deploy_deepseek = true enable_autoscaling = true enable_gpu = true enable_auto_mode_node_pool = true “` |

Next, generate the plan file:

| `terraform plan -out=plan.out` |

Then, apply the plan to the cluster:

| `terraform apply “plan.out”` |

This sets up a cluster capable of autoscaling based on application workload usage. The next sections will explore the autoscaling process in greater detail.

Exporting GPU Metrics with the DCGM-Exporter

The DCGM (Data Center GPU Management) Exporter is a tool developed by NVIDIA that collects and exposes GPU metrics in a format compatible with Prometheus, a widely used monitoring and alerting toolkit. Once the autoscaling setup is complete, a DCGM pod is deployed on every node equipped with a GPU. Verification can be performed by running the following command to list the pods in the gpu-monitoring namespace:

| “`bashkubectl get pods -n gpu-monitoring“` |

This command displays all the DCGM daemonsets, confirming that they are running on the appropriate GPU-enabled nodes.

To inspect the metrics exposed by a daemonset, use the following command with a DCGM pod ID:

| `kubectl port-forward dcgm-exporter-your-id 9400:9400 -n gpu-monitoring` |

Then, access http:/127.0.0.1:9400/metrics to view the GPU metrics exposed by the pod in Prometheus format.

Autoscaling decisions will primarily be based on the GPU utilization metric. However, the DCGM exporter provides a wide range of metrics, allowing for more customized and specific scaling behaviors based on other parameters such as GPU memory usage, temperature, or power consumption.

Scraping the Metrics with Prometheus

The Prometheus Operator is a powerful tool that simplifies the deployment and management of Prometheus and its related monitoring components within a Kubernetes cluster. It automates the configuration of Prometheus servers, Alertmanager, and other monitoring tools, ensuring they are set up correctly and can scale with the infrastructure.

In this configuration, the Prometheus Operator is set up to monitor specific ServiceMonitor resources. These resources define how Prometheus should scrape metrics from various services running in the cluster. By configuring the serviceMonitorNamespaceSelector and serviceMonitorSelector, the operator is instructed to watch for ServiceMonitor resources in the gpu-monitoring namespace that have the label app.kubernetes.io/name: dcgm-exporter. This setup allows Prometheus to automatically discover and scrape GPU metrics exposed by the DCGM exporter.

To list the pods related to Prometheus, run:

| `kubectl get po -n monitoring` |

To verify if the operator is scraping GPU metrics from the DCGM exporter, access the Prometheus dashboard with the following command:

| `kubectl port-forward prometheus-prometheus-operator-kube-p-prometheus-0 9090:9090 -n monitoring` |

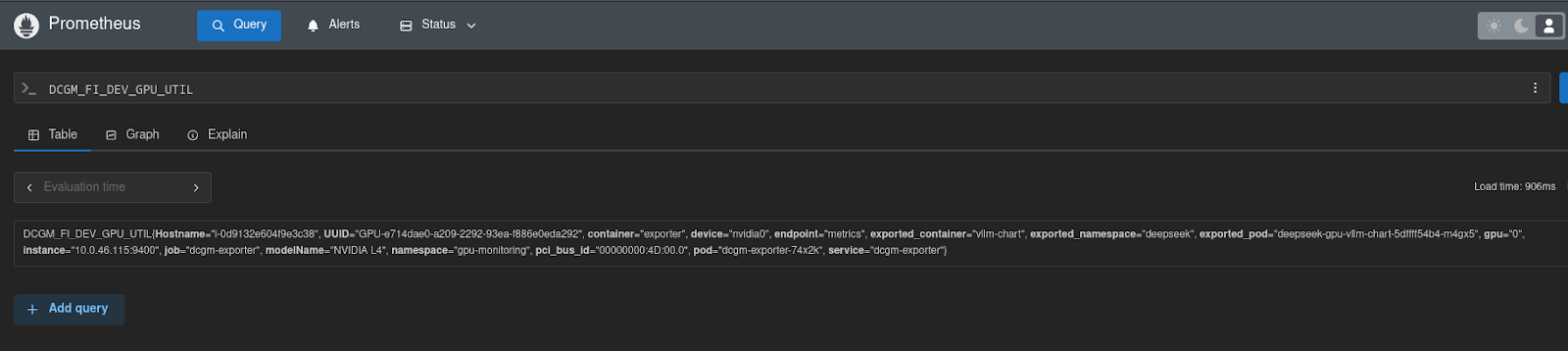

Then navigate to http:/127.0.0.1:9090 and enter the metric DCGM_FI_DEV_GPU_UTIL in the query field and execute.

The Prometheus query DCGM_FI_DEV_GPU_UTIL provides GPU utilization metrics for a specific NVIDIA L4 GPU on host i-0d9132e604f9e3c38. The data is collected by the dcgm-exporter in the gpu-monitoring namespace, showing the GPU’s usage by the deepseek-gpu-vllm-chart-5dffff54b4-m4gx5 pod in the deepseek namespace. Seeing such data indicates that the Prometheus Operator is functioning correctly.

Transforming Prometheus Metrics to Kubernetes Metrics

The Prometheus Adapter for Kubernetes Metrics Server enables the Horizontal Pod Autoscaler (HPA) to utilize custom metrics from Prometheus. The adapter is configured to query the Prometheus server for GPU utilization metrics (DCGM_FI_DEV_GPU_UTIL) and convert them into a format compatible with Kubernetes for autoscaling decisions. The custom rule defined in the configuration allows the HPA to scale pods based on average GPU utilization, mapped to specific namespaces and pods.

Scaling Based on Custom Kubernetes Metrics

The configuration establishes a Horizontal Pod Autoscaler (HPA) to dynamically adjust the number of pod replicas for a specific deployment (the DeepSeek deployment) based on GPU utilization.

When the HPA scales the pods, additional GPU-enabled nodes are automatically provided by EKS Auto Mode. The HPA is configured to scale the deployment between one and five replicas, with this range adjustable as needed. It relies on the custom GPU utilization metric (DCGM_FI_DEV_GPU_UTIL), targeting an average utilization of 50%, which is also configurable.

The setup includes defined scaling behaviors with stabilization windows and policies to ensure efficient and responsive scaling, optimizing resource usage for GPU-intensive workloads.

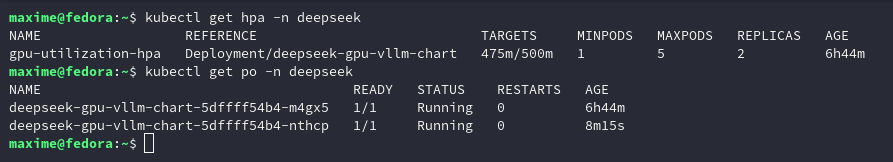

The current HPA status can be checked with the following command:

| `kubectget hpa -n deepseek` |

This command outputs GPU utilization, for example, 400m/500m (40%/50%).

Load Balancing with ALB for Efficient Traffic Distribution

In addition to the Horizontal Pod Autoscaler (HPA), the AWS Application Load Balancer (ALB) is used to distribute incoming traffic evenly across available pods. The ALB ensures balanced workloads, enhancing application performance and reliability. By routing requests effectively, the ALB prevents any single pod from becoming a bottleneck. This combination of HPA for scaling and ALB for load distribution creates a robust and efficient infrastructure capable of handling varying levels of demand.

The ingress details can be retrieved with the following command:

| `kubectl get ingress -n deepseek` |

This command outputs the URL of the ALB, which can be used to test the application.

Testing the Deepseek LLM

The setup is now fully functional and capable of handling the DeepSeek LLM. The API can be tested using curl to verify that it responds correctly and meets the expected requirements. Adjust parameters such as the model name as necessary during testing.

| “`shcurl -s -X POST “http:/$(terraform output -raw deepseek_ingress_hostname)/v1/chat/completions” \ -H “Content-Type: application/json” \ –data ‘{ “model”: “deepseek-ai/DeepSeek-R1-Distill-Qwen-1.5B”, “messages”: [ { “role”: “user”, “content”: “What is Kubernetes?” } ] }’ | jq -r ‘.choices[0].message.content’ “` |

Additionally, the stress-test.sh script from the repository can be used to send continuous requests to the LLM. Running this script for a few minutes demonstrates the Horizontal Pod Autoscaler (HPA) in action.

The following image shows the HPA scaling up to two pods in response to increased load. GPU utilization is at 475m out of the 500m target, indicating efficient resource management and effective scaling to meet demand.

Conclusion

By leveraging Amazon EKS with Auto Mode and GPU metrics, a scalable and efficient infrastructure has been established for deploying and managing Large Language Models (LLMs) such as DeepSeek.

This setup utilizes the Horizontal Pod Autoscaler (HPA) to dynamically adjust resources based on real-time GPU utilization, ensuring optimal performance and cost-efficiency. The integration of Prometheus for monitoring and the Application Load Balancer (ALB) for traffic distribution further enhances the system’s reliability and responsiveness.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.