Written by Maxime Roth Fessler, DevOps & Backend Developer at TrackIt

The adoption of cloud-native technologies has transformed the way media workflows are designed and deployed. As organizations face growing volumes of digital assets and increasingly complex distribution needs, scalable and resilient infrastructure becomes essential. Kubernetes has emerged as a popular choice for orchestrating such workloads, but managing stateful services within clusters can introduce operational challenges.

Below is a detailed overview of deploying a Media Asset Management (MAM) solution—Phraseanet—in a production environment using Amazon EKS (Elastic Kubernetes Service). While the application is containerized and orchestrated with Kubernetes, critical stateful components such as the database, Redis, Elasticsearch, and RabbitMQ are offloaded to AWS managed services. This hybrid approach reduces operational complexity and enhances reliability, allowing the MAM to meet production-grade performance and scalability requirements.

Note: While this article focuses primarily on Phraseanet, the concepts discussed—such as auto-scaling and managed services—are broadly applicable and can be adapted for other MAM systems.

Contents

- Why Use Amazon Managed Services Instead of Pods?

- Phraseanet’s Infrastructure Recap

- Transitioning to AWS Managed Solutions

- Monitoring & Alerts Management

- Monitoring Managed Services

- Monitoring Pods and the EKS Cluster

- Automating the Infrastructure with Auto Mode

- How Does EKS Auto Mode Scale Automatically?

- Difference from Managed Node Groups

- Configuring Auto Mode

- Conclusion

- About TrackIt

Why Use Amazon Managed Services Instead of Pods?

Leveraging AWS managed services enhances performance, reliability, and scalability while minimizing the operational burden associated with managing these components within a Kubernetes cluster. Services such as Amazon RDS and Amazon ElastiCache for Redis deliver optimized performance and reliability through continuous monitoring and maintenance by AWS, offering features like high availability and automatic failover.

Built-in scalability allows seamless capacity adjustments based on demand, a critical feature for managing unpredictable traffic patterns in media asset workflows. These services also integrate efficiently with the broader AWS ecosystem, contributing to a cohesive and secure cloud infrastructure. Compliance standards and robust security features are supported by default.

Phraseanet’s Infrastructure Recap

To determine which services are suitable for replacement with AWS-managed solutions, below is a summary of the components used in the current Phraseanet infrastructure:

- Phraseanet Gateway: Serves as the main access point, routing traffic and handling frontend requests.

- Database (MySQL): Stores metadata and associated information for media assets, supporting search, retrieval, and scalability.

- Worker Service: Manages background tasks such as media processing, transcoding, and workflow automation.

- Elasticsearch: Indexes and searches large media libraries, improving metadata retrieval and content discovery.

- FPM (FastCGI Process Manager): Handles PHP processes for frontend and backend interfaces, ensuring responsive user interactions.

- RabbitMQ: Facilitates messaging between services, enabling reliable communication for components like the worker service.

- Redis: Provides caching and session management to reduce database load and improve performance.

- Phraseanet Setup: Initializes the environment by configuring databases and essential system settings.

These services are currently deployed as Kubernetes pods. The objective is to migrate selected components to AWS managed services.

Transitioning to AWS Managed Solutions

The key components suitable for AWS-managed replacements include Redis, RabbitMQ, Elasticsearch, and the MySQL database. These are mapped to the following AWS services:

- Amazon RDS: A managed database offering high availability, automated backups, and seamless scaling.

- Amazon ElastiCache: A managed Redis or Valkey service optimized for caching and real-time use cases, with built-in security and failover.

- Amazon OpenSearch: A managed Elasticsearch service providing scalable search and analytics capabilities.

- Amazon MQ: A managed RabbitMQ service that simplifies messaging and integrates well with other AWS services.

Valkey was selected over Redis for ElastiCache. As an open-source fork of Redis, Valkey offers enhanced multi-threading performance and improved memory efficiency, while maintaining full compatibility with Redis APIs. It is also supported by AWS with pricing up to 33% lower, making it a more cost-effective option.

Monitoring & Alerts Management

Amazon CloudWatch is used for monitoring and alert management. As a native AWS service, CloudWatch provides seamless integration, real-time monitoring, and automated alerts. It supports performance tracking, anomaly detection, and rapid incident response to ensure system reliability and operational efficiency.

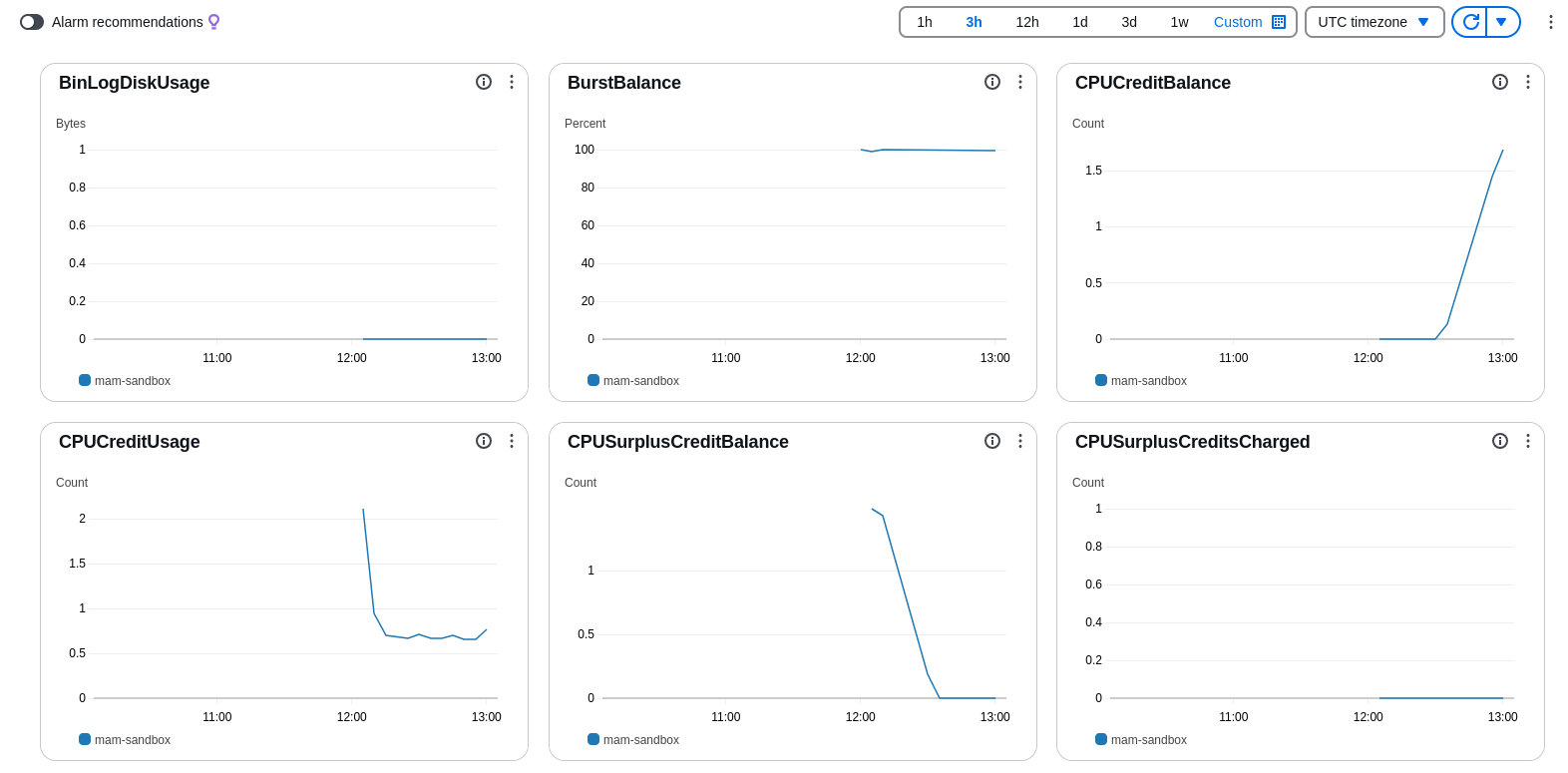

Monitoring Managed Services

For Amazon RDS, an email alert notifies administrators when CPU usage exceeds 80% over a specified time period. This proactive measure helps maintain performance and prevent potential disruptions. Similar alerts can be configured for other managed services, monitoring critical metrics such as CPU utilization, memory usage, and disk space.

Amazon RDS Metrics

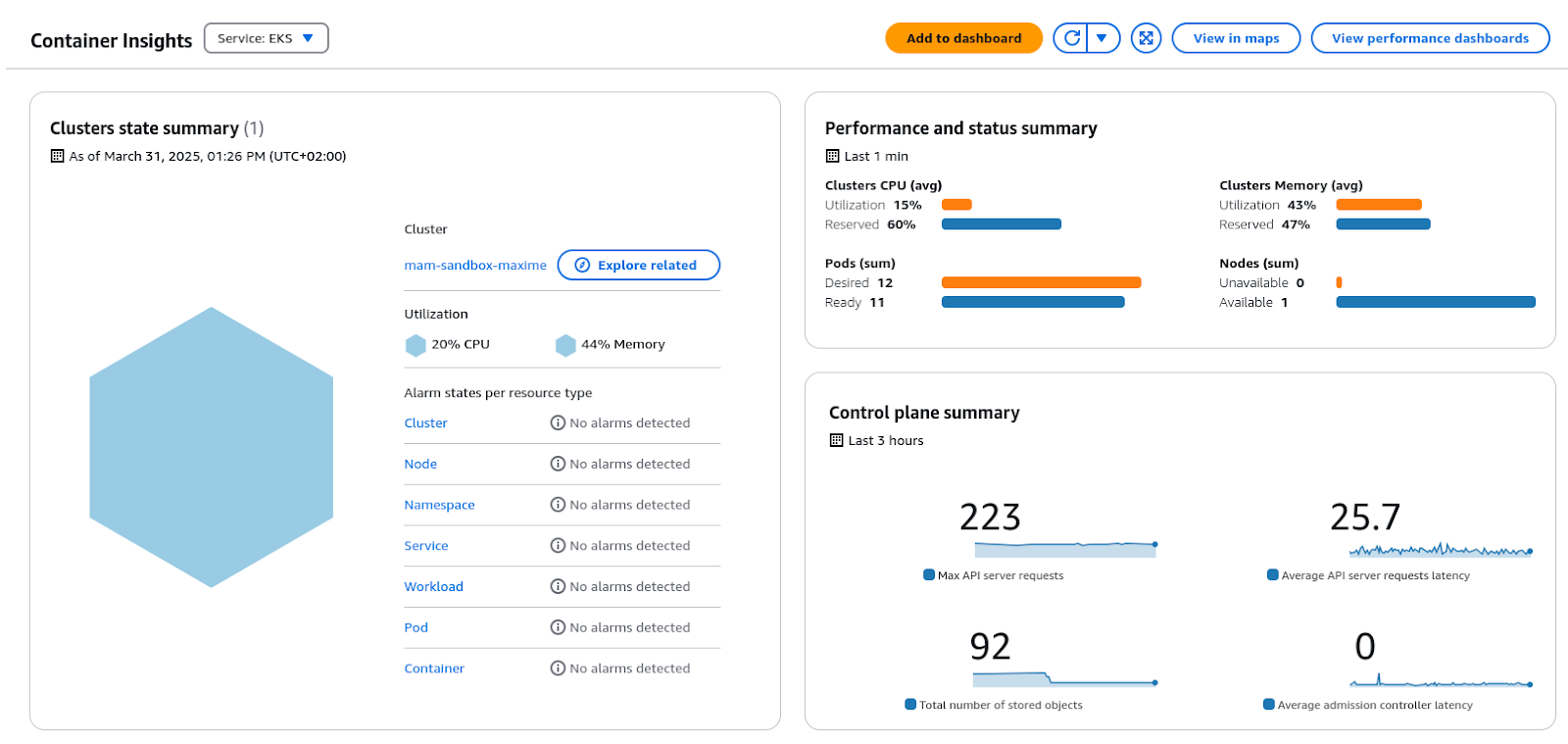

Monitoring Pods and the EKS Cluster

Amazon CloudWatch Container Insights is used to monitor the EKS cluster and associated pods. This service provides visibility into the performance and health of the Kubernetes environment by collecting and displaying critical metrics. It enables tracking of resource utilization, including CPU and memory usage, across nodes and individual pods.

Alarms can be configured for a wide range of components—nodes, namespaces, pods, and more—triggering notifications in the event of anomalies or threshold breaches.

CloudWatch Container Insights for EKS

Automating the Infrastructure with Auto Mode

EKS Auto Mode is used to deploy the MAM system due to its numerous benefits over the traditional EKS setup. Auto Mode simplifies cluster management by automating provisioning, scaling, and maintenance tasks for compute, storage, and networking infrastructure. This reduces the need for manual configuration and allows teams to concentrate on deploying and managing applications.

How Does EKS Auto Mode Scale Automatically?

EKS Auto Mode dynamically adjusts the number of EC2 instances based on workload demands. It integrates with Karpenter to provision and scale nodes efficiently, ensuring that clusters are resourced appropriately, reducing idle capacity, and improving responsiveness under load.

Difference from Managed Node Groups

Managed Node Groups in EKS offer automated management and scaling for EC2 worker nodes, but still require user-defined instance types, scaling policies, and update configurations. While this allows for greater customization, it also increases operational complexity compared to Auto Mode, which is fully automated.

Configuring Auto Mode

The use of Auto Mode is specified in the Terraform configuration, along with a general-purpose node pool. When deploying YAML files via HELM, EKS automatically selects the nodes best suited to the workload. This setup, managed through Terraform, offers a straightforward deployment experience.

Additional details on EKS Auto Mode can be found in the full article available here: https://trackit.io/kubernetes-management-with-eks-auto-mode

Conclusion

Deploying a Media Asset Management system in a production environment becomes significantly more scalable and efficient with EKS Auto Mode and AWS managed services. Auto Mode reduces infrastructure management complexity, while managed services enhance performance, security, and reliability.

Automated scaling, real-time monitoring, and proactive alert systems contribute to a robust and responsive architecture, well-suited to dynamic production environments and the evolving demands of media asset workflows.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.