TL;DR: Pull ssh:/git@github.com/trackit/ec2-hashicorp-tutorial.git, it contains Packer files for Consul and Nomad nodes, and a Terraform spec so you can get up and running with a minimal setup as quick as possible.

In this article series I will show you how you can use Hashicorp’s Packer, Terraform, Consul and Nomad on AWS EC2 as a platform to run your product. I have used Packer to build the EC2 AMIs (from a Debian AMI), Terraform to setup the VPC and its resources, Consul to allow service discovery between the instances and Nomad to manage the Docker tasks. This article will describe a minimal setup for this scheme, for the sake of brevity. You can, however, find the source code of our current setup on GitHub https://github.com/trackit/ec2-hashicorp-tutorial. I will assume you are comfortable with using AWS EC2. If you are a quick search should lead you to many good tutorials and documentations.

Contents

Configuring Consul and Nomad

In this section I will show you a minimal configuration for both slave and master instances for Consul and Nomad. You can find them at ssh:/git@github.com/trackit/ec2-hashicorp-tutorial.git along with all other resources linked to this tutorial. In a later section we’ll see how to build these configurations into AMIs using Hashicorp Packer. The infrastructure we want to achieve needs two kinds of instances: master instances will host Consul and Nomad servers, while slave instances will host Consul and Nomad clients and run tasks. These configurations are built for EC2 instances only and may need tweaking to run with some other setup.

Master instances

Master instances are the brain of the infrastructure: the Consul servers there will hold the service discovery data and key/value store while the Nomad servers will direct the Nomad nodes and place the tasks you want to run in the most efficient manner they can. For both Consul and Nomad, Hashicorp recommends that three or five servers be used to provide redundancy and to prevent ties during leader elections (read more on that at https://www.consul.io/docs/guides/leader-election.html and https://www.nomadproject.io/docs/internals/consensus.html). So in this article I will assume three master instances will be running.

Consul servers

First we need our Consul servers to discover each other. Thankfully they have a built-in discovery mechanism for EC2 deployments using instance meta-data. I will configure them as follows.

{

"server": true,

"datacenter": "my-little-datacenter",

"retry_join_ec2": {

"tag_key": "ConsulAgent",

"tag_value": "Server"

},

"bootstrap_expect": 3

}

The retry_join_ec2 configuration instructs Consul to use EC2 metadata to try and find servers: it will look for instances with tag ConsulAgent=Server, so you will need to set these tags on your instances. Because of the bootstrap_expect configuration servers will only start operating once they’ve constituted a cluster of three or more servers. With this the master instances should be able to discover each other and form a Consul cluster shortly after being brought up.

This will immediately be useful to Nomad as the Nomad agent can use a Consul client (Consul servers are Consul clients) on the same node to announce itself and discover other Nomad servers. The datacenter will become useful when your infrastructure will grow to span multiple datacenters, as Consul is built to be region and datacenter aware. For example, when doing service discovery it can be instructed to only return services present in the current datacenter to limit latency.

Nomad servers

datacenter = "my-little-datacenter"

region = "my-region"

data_dir = "/var/lib/nomad/data/"

server {

enabled = true

bootstrap_expect = 3

}

client {

enabled = false

}

consul {

auto_advertise = true

server_auto_join = true

}

Most options for Nomad are either explicit or similar to the Consul configuration we saw earlier. datacenter and region inform the server of its location, so that we can specify jobs that run only in some places. A Nomad agent can be a server, a client, or both (there is also a dev mode, which you obviously should not use in production). The data_dir option must be present and must be an absolute path to the directory Nomad will store its data in.

It should not be a temporary directory as Nomad can re-join a cluster it lost contact with, and recover using this data. The consul block is especially interesting since its contents instruct Nomad to use the local Consul client to announce itself and discover other servers. (The Consul and Nomad configuration defaults coincide so that the Consul and Nomad agents can talk to each other.) Just like we did for Consul, we use bootstrap_expect to specify that the Nomad cluster should start operating once three servers participate.

Slave instances

The slave instances’ task is to run the Docker containers as directed by the master instances: they are the work force of your cluster. They will discover the master instances and register there.

Consul clients

Consul clients, as opposed to servers, do not hold data. However they are used to reach the masters: instead of performing HTTP requests on the Consul masters, it is preferred to run a Consul client and to perform requests through it. Its configuration is even simpler than the masters’, and still uses same metadata-based discovery system.

{

"server": false,

"datacenter": "my-little-datacenter",

"retry_join_ec2": {

"tag_key": "ConsulAgent",

"tag_value": "Server"

}

}

Nomad nodes

In Nomad terminology, the instances running the tasks are called the “nodes”. It is generally recommended that the servers and the nodes be different instances. Once again the configuration is very similar to the masters’:

datacenter = "my-little-datacenter"

region = "my-region"

server {

enabled = false

}

client {

enabled = true

}

consul {

auto_advertise = true

client_auto_join = true

}

data_dir = "/var/lib/nomad"

Most importantly, the server and client sections are changed to reflect that the agent must act as a client and not as as server, and server_auto_join becomes client_auto_join.

Running Consul and Nomad

Consul and Nomad both come as statically linked binaries, which means they have no dependencies. Installing them is as simple as moving them to some directory in your path and making sure they are marked as executable on your filesystem. On our example setup, we run them on Debian 9 “Stretch”, which uses SystemD. It follows naturally that we use unit files to have SystemD manage our services. You will find the unit files in the example repository.

Building the AMIs

In order to build AMIs we can deploy on EC2 we will use Packer, another tool by Hashicorp whose aim is to facilitate the building of any sort of images or packages. For example it can build EC2 AMIs, Docker images, VirtualBox images, and many more. Packer uses a JSON file to describe the build process for the image.

This JSON file contains two important sections: builders is a list of maps, each describing a platform for your build process. By providing several builders you can reproduce identical provisioning processes with various types of output: you can build EC2 AMIs for production and Vagrant-wrapped VirtualBox images for your developers! As per usual with Hashicorp, most options are quite explicit.

{

"description": "Master",

"builders": [

{

"type": "amazon-ebs",

"ssh_username": "admin",

"region": "us-east-1",

"secret_key": "{{user `aws_secret_key`}}",

"access_key": "{{user `aws_access_key`}}",

"source_ami": "ami-ac5e55d7",

"instance_type": "m4.large",

"ami_name": "master-{{timestamp}}"

}

],

"provisioners": [

{

"type": "file",

"source": "files",

"destination": "/tmp/"

},

{

"type": "shell",

"scripts": [

"scripts/packages.sh",

"scripts/download-files.sh",

"scripts/consul.sh",

"scripts/nomad.sh"

],

"execute_command": "{{.Vars}} sudo --preserve-env bash -x '{{.Path}}'"

}

]

}

Two such configurations are provided in the accompanying repository at /packer/master/packer.json and /packer/slave/packer.json. You can build your AMIs by simply running going into the repository containing the JSON document and running packer build packer.json.

Packer will then look for AWS credentials in the standard places (~/.aws/, environment variables, or it can ask for them interactively) to spin up a new instance. This instance will be provisionned as per each entry in provisioners, before being snapshot and turned into an AMI you can deploy!

TIP: I like to save a copy of the build log by doing

packer build packer.json | tee build.logas the log files contain useful informations such as the ID of the generated AMI, and also because the logs can get quite long.

Deploying with Terraform

Terraform is a very powerful tool which lets you describe your desired infrastructure as code, and to automate the deployment and update of that infrastructure. Each time you tell Terraform to deploy, it will build a diff of what your infrastructure is and what it ought to be, and perform all necessary operations to arrive to what you described. If you have experience with AWS CloudFormation you will certainly feel at home.

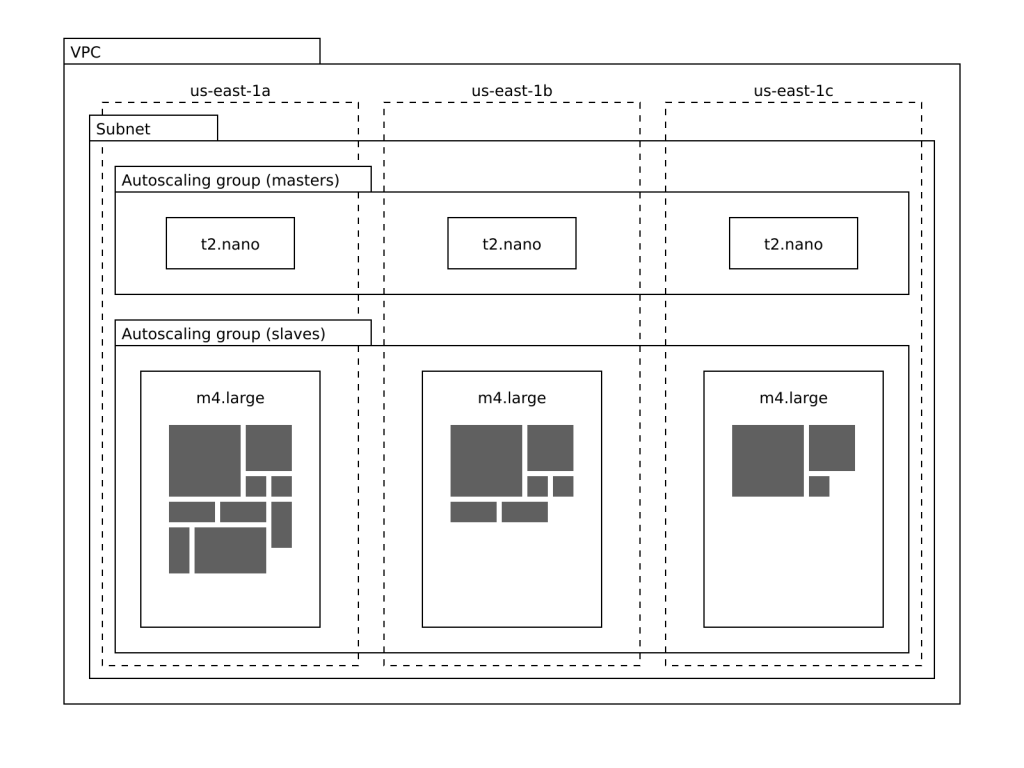

One advantage of Terraform over CloudFormation, though, is that it supports multiple providers and data sources. The repository for this tutorial contains a Terraform configuration you can use to deploy a minimal setup with the AMIs I showed you how to build. First you should replace the contents of /terraform/ssh.pub with your SSH public key, so that you can connect to your instances. Then you should place your AMI IDs in /terraform/amis.tf. With that done, you are ready for Terraform to deploy the following and their dependencies:

- A VPC with a single subnet with public IPs.

- An autoscaling group of three _t2.nano_ master instances.

- An autoscaling group of two _m4.large_ slave instances.

- Security groups which allow SSH access to your instances, and all egress.

- An instance profile to allow Consul to get your EC2 metadata.

The example Terraform specification spawns a VPC with a subnet and two autoscaling groups.

Now, you can deploy with these three steps:

- Run

terraform plan -out tf.plan. - Read what Terraform intends to do to get you the infrastructure you want.

- If all is in order, run

terraform apply tf.plan.

TIP: While you could deploy by simply running terraform apply, I strongly recommend you do it in two steps:

terraform plan -out tf.planand thenterraform apply tf.plan. This will let you inspect Terraform intended course of actions before it affects anything in your infrastructure. Most of the times it will be exactly what you intend, but there are times I wish I had carefully read it before applying it.

Once you have deployed your infrastructure you can freely SSH into your instances: running consul members will let you confirm EC2 metadata-based discovery for Consul worked. nomad server-members will give you the list of Nomad servers and the cluster’s leader, and nomad node-status will give you some infos about your slave instances.

NOTE: If you used the sample code which builds Debian 9 instances, you can connect via SSH using the

adminuser.

In order to destroy the infrastructure you created you can simply instruct Terraform to plan for the destruction using terraform plan -destroy -out tf.plan, and then applying it with the usual terraform apply tf.plan. In the next part of this tutorial I will show you how you can use Nomad to run tasks on your instances in an efficient manner, while automatically recovering from node failure.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.