TrackIt launched a proof-of-concept (POC) project to delve into the potential of video upscaling. Utilizing Amazon SageMaker, the primary objective was to develop a super-resolution video AI that seamlessly integrates advanced machine learning techniques to elevate video quality. The following sections provide an overview of the project and the insights acquired.

Contents

Solution Architecture

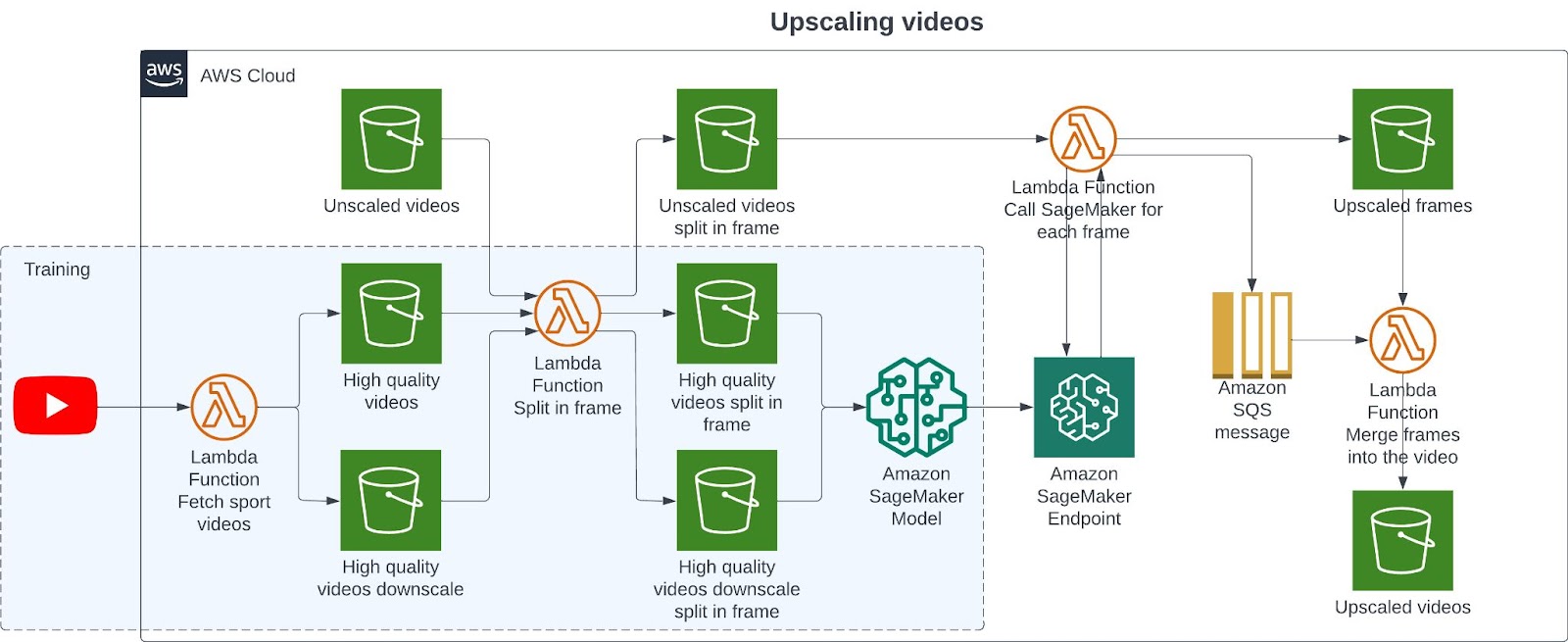

The proposed architecture is a robust framework designed to enhance video resolution using Amazon Web Services (AWS) cloud computing services. This solution is tailored to upscale videos utilizing AI without relying on traditional hardware infrastructure.

The process begins in the AWS Cloud and is divided into two key phases: Training and Upscaling. In the Training phase, an AWS Lambda function fetches sports videos from platforms such as YouTube, storing them in scalable Amazon S3 buckets. This establishes the foundational dataset for training machine learning models. Another Lambda function processes high-quality videos into individual frames, creating a dataset for model learning.

The Upscaling phase involves dividing unscaled videos into frames, processed by a Lambda function using Amazon SageMaker to apply a machine-learning model to each frame. Amazon SageMaker provides the computational environment for model training and deployment at scale. Processed frames are queued through Amazon Simple Queue Service (SQS), with a final Lambda function merging these enhanced frames into a single upscaled video stored in an S3 bucket.

This architecture showcases an innovative use of cloud technology in multimedia content processing. It creates a seamless, automated pipeline leveraging the agility offered by AWS services to efficiently enhance video quality.

Amazon SageMaker Implementation

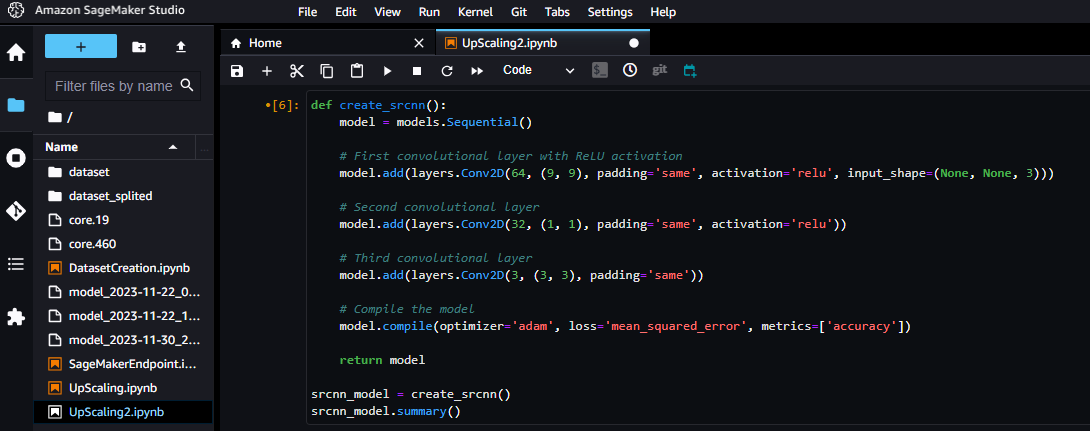

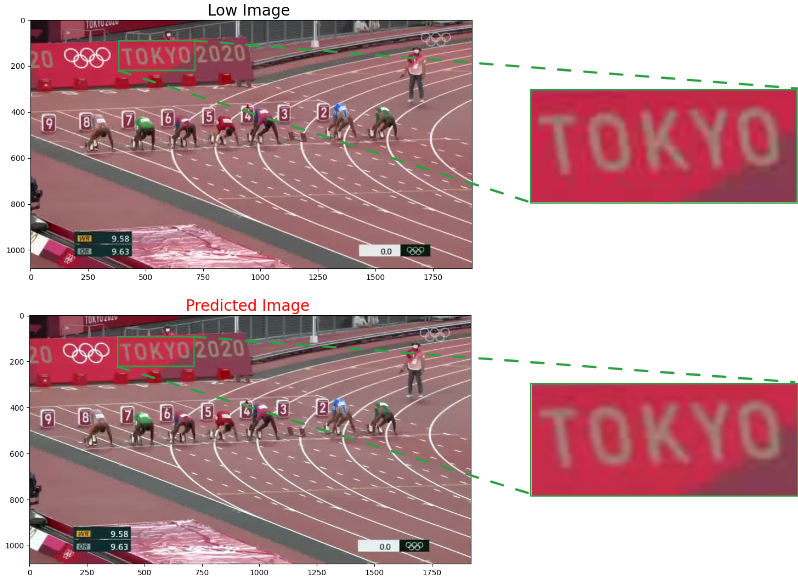

Initial Upscaling Experiment with TensorFlow

In an initial attempt at upscaling video frames, a sequential TensorFlow model with four convolutional 2D (Conv2D) layers was used. This would enable the upscaling of images from 480p to 1080p. With an initial dataset of 1000 images, the training process was quick and the results were promising. However, the computational demands quickly exceeded the capabilities offered by a personal computer, prompting a transition to AWS.

Leveraging an Amazon SageMaker ml.r5.2xlarge instance, equipped with 8 vCPUs and 64 GiB of memory, addressed this challenge. This strategic move toward enhanced resource allocation empowered the handling of larger datasets and the execution of more intricate model training, overcoming the limitations posed by local hardware.

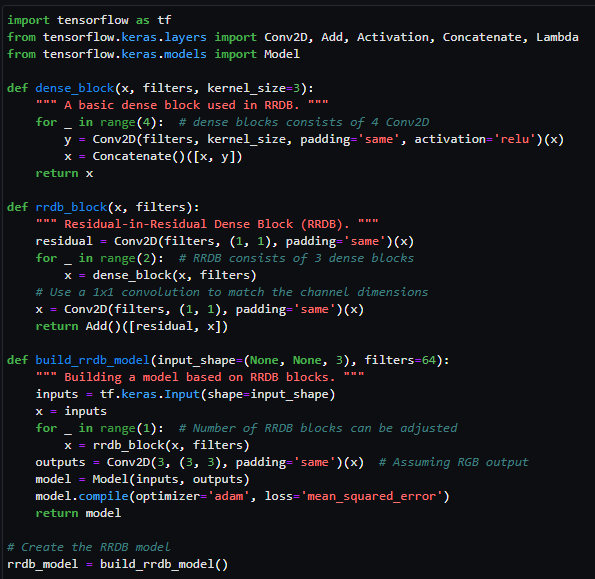

Advancing to ESRGAN

In the pursuit of enhancing resolution quality, attention shifted to a more advanced model known as Enhanced Super-Resolution Generative Adversarial Networks (ESRGAN), inspired by the research of Xintao Wang et al. Given the intricate architecture and computational demands inherent in ESRGAN, a simplified version of the neural network was implemented.

To meet the heightened resource requirements of this complex model, the Amazon SageMaker instance was scaled from an ml.r5.2xlarge to an ml.m5.24xlarge, offering 96 virtual CPUs and 384 GiB of memory. The expansive neural network of the ESRGAN-based model comprised approximately 67 million parameters, a significant increase from the 20,000 parameters in the initial TensorFlow model.

Despite the upscaling of computational resources, the training duration and data requirements for the simplified ESRGAN model were substantial. After four hours of training, suboptimal outcomes indicated the need for prolonged training periods, a more extensive dataset, and meticulous hyperparameter tuning. The utilization of a simplified version of ESRGAN, though less resource-intensive than the full model, incurred considerable costs due to the high demand for computational power and time. This introduces a financial consideration, as extended use of such powerful AWS instances can result in significant expenses.

Conclusion & Thoughts

In summary, the exploration of video upscaling with Amazon SageMaker has uncovered that while the initial use of a TensorFlow sequential model delivered quick and intriguing results, the complexity of the task demands more powerful computational resources than those typically found in personal computers. Further testing with a simplified ESRGAN model highlighted the resource-intensive nature of cutting-edge upscaling techniques.

Despite moving to a robust Amazon SageMaker instance, the simplified ESRGAN demanded more time for training and a larger dataset to achieve optimal performance. This project serves as a real-world illustration of the challenges encountered when deploying advanced machine learning models in a cloud computing environment. It highlights the trade-offs in balancing upscaling quality, computational resource requirements, and associated costs.

https://github.com/trackit/upscaling-videos

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.