You have probably all heard about Docker, the tool that allow every DevOps to automate the deployment of an application with a disconcerting speed. It’s a kind of virtualization that allow independent “containers” to run within a single Linux instance.

You have probably all heard about Docker, the tool that allow every DevOps to automate the deployment of an application with a disconcerting speed. It’s a kind of virtualization that allow independent “containers” to run within a single Linux instance.

So having said that, we are assuming that you know what is Docker.

In this article, we are going to see how to manage a collection of containers running within separate hosts by using Docker Swarm.

Orchestrating these containers requires a multi-host approach.

We are going to discuss the method of orchestration via Swarm, in the context of the establishment of an infrastructure composed of a master machine and two node machines component our cluster.

Contents

Prerequisite: Certificates generation

Docker Swarm is native clustering for Docker. It turns a pool of Docker hosts into a single virtual Docker host.

At first, we need to generate keys and certificates that allow us to authenticate on our individual machines and also to have TLS secure communication between all our machines.

To do this, you can refer to the official documentation Docker:

https://docs.docker.com/articles/https/

Once we have generated our certificates, we copy them in the /etc/docker/certs/ on our nodes via the command:

scp ca.pem server-cert.pem server-key.pem user@<node1>:/etc/docker/certs/ scp ca.pem server-cert.pem server-key.pem user@<node2>:/etc/docker/certs/

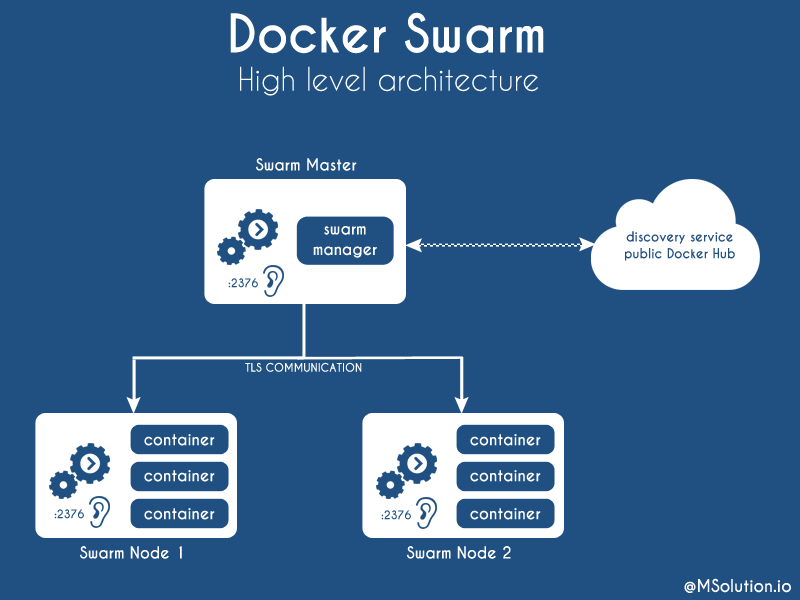

Before to go further, take a look to the following diagram to have a better idea of what we are going to do.

Nodes configuration

Nodes configuration

Docker daemon listener activation

Now we need to activate the docker daemon listener on port 2376 (which is the conventional port) on each node composing our cluster in order to have secure exchanges between all our machines.

To do that, start by stopping docker service on each node :

service docker stop

Then, launch docker on port 2376 with TLS communication on each node:

docker -d --tlsverify --tlscacert=/etc/docker/certs/ca.pem --tlscert=/etc/docker/certs/server-cert.pem --tlskey=/etc/docker/certs/server-key.pem -H=0.0.0.0:2376 --label name=node1 docker -d --tlsverify --tlscacert=/etc/docker/certs/ca.pem --tlscert=/etc/docker/certs/server-cert.pem --tlskey=/etc/docker/certs/server-key.pem -H=0.0.0.0:2376 --label name=node2

Note that we assigned a name for each node of the cluster.

This will be useful later when we will need to launch a specific container on a specific host.

Docker Swarm cluster declaration

Once it’s done, we can launch the declaration of the cluster via Swarm.

The first step is to generate the token (unique ID) that will define our cluster.

On the master machine, run these commands :

docker pull swarm:latest docker run --rm swarm create -> 97928c6cd133478dde00ec60539f1e28

By default, the discovery is done by using the public Docker Hub, but there are other methods if you do not want to depend on the Hub. For more information on this topic please refer to the following documentation:

https://github.com/docker/swarm/tree/master/discovery#hosted-discovery-with-docker-hub

Then, still from the master machine, we will start the Swarm agent on each of our nodes and their integration into the cluster by taking the token previously obtained via the commands:

docker --tls --tlscacert=/etc/docker/certs/ca.pem --tlscert=/etc/docker/certs/cert.pem --tlskey=/etc/docker/certs/key.pem -H=tcp://<ip address node1>:2376 run -d swarm join --addr=<ip address node1>:2376 token://97928c6cd133478dde00ec60539f1e28 docker --tls --tlscacert=/etc/docker/certs/ca.pem --tlscert=/etc/docker/certs/cert.pem --tlskey=/etc/docker/certs/key.pem -H=tcp://<ip address node2>:2376 run -d swarm join --addr=<ip address node2>:2376 token://97928c6cd133478dde00ec60539f1e28

Our nodes are now integrated in the cluster and ready to communicate with our master machine.

Master configuration

Docker Swarm manager initiation

Now, we are going to launch the swarm manager by the following command:

docker run -v /etc/docker/certs/:/home/ --name swarm -d swarm manage --tls --tlscacert=/home/ca.pem --tlscert=/home/cert.pem --tlskey=/home/key.pem token://97928c6cd133478dde00ec60539f1e28

Once this is done we can list the machines attached to our cluster via the command:

docker run --rm swarm list token://97928c6cd133478dde00ec60539f1e28

In order to simplify the usage of swarm, we are going to create aliases:

alias dockip="docker inspect --format '{{ .NetworkSettings.IPAddress }}'"

alias swarmdock="docker --tls -H=tcp://$(dockip swarm):2376"

So now, we can get all the informations about our cluster by running this command:

root@master ~ # swarmdock info Containers: 3 Running: 1 Paused: 0 Stopped: 2 Images: 9 Role: primary Strategy: spread Filters: health, port, dependency, affinity, constraint Nodes: 2 node1: X.X.X.X:2376 └ Status: Healthy └ Containers: 3 └ Reserved CPUs: 0 / 4 └ Reserved Memory: 0 B / 8.178 GiB └ Labels: executiondriver=native-0.2, kernelversion=3.16.0-57-generic, name=node1, operatingsystem=Ubuntu 14.04.3 LTS, storagedriver=aufs └ Error: (none) └ UpdatedAt: 2016-02-08T14:13:15Z node2: X.X.X.X:2376 └ Status: Pending └ Containers: 3 └ Reserved CPUs: 0 / 4 └ Reserved Memory: 0 B / 4.044 GiB └ Labels: executiondriver=native-0.2, kernelversion=3.16.0-59-generic, name=node2, operatingsystem=Ubuntu 14.04.3 LTS, storagedriver=aufs └ Error: (none) └ UpdatedAt: 2016-02-08T14:13:05Z Plugins: Volume: Network: Kernel Version: 3.16.0-30-generic Operating System: linux Architecture: amd64 CPUs: 4 Total Memory: 8.178 GiB Name: d6aa01209f81

Docker Swarm commands

To list all containers launched in our cluster, so we can run the command:

swarmdock ps

We can also list the containers launched on each of our nodes:

docker --tls --tlscacert=/etc/docker/certs/ca.pem --tlscert=/etc/docker/certs/cert.pem --tlskey=/etc/docker/certs/key.pem -H=tcp://<ip address node1>:2376 ps docker --tls --tlscacert=/etc/docker/certs/ca.pem --tlscert=/etc/docker/certs/cert.pem --tlskey=/etc/docker/certs/key.pem -H=tcp://<ip address node2>:2376 ps

Obviously, all the usual docker commands are functional in the cluster.

Provisioning containers in the cluster

Now, let’s see how to run containers into our cluster.

For the example, we are going to run a MySQL database server in a container.

root@master ~ # swarmdock run -e MYSQL_ROOT_PASSWORD=mypassword --name db -v /home/mysql/:/var/lib/mysql/ -d mysql:latest c39e40415eb7cf666d4baf1309b7120a33361aed2f08ca5b8901a84294cabe08

Then to see our container, just run a ps command :

root@master ~ # swarmdock ps CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES c39e40415eb7 mysql:latest "/entrypoint.sh mysql" 39 seconds ago Up 38 seconds 3306/tcp node2/d

As you can see, the container had been launched in the node2.

Something we need to be aware of is that the default placement strategy takes into account the resource requirements of the container and the available resources of the hosts composing the cluster to optimize the placement using a bin packing algorithm.

We could also choose on which node will run our MySQL container by using the constraint parameter.

root@master ~ # swarmdock run -e MYSQL_ROOT_PASSWORD=mypassword -e constraint:name==node1 --name bdd2 -v /home/mysql/:/var/lib/mysql/ -d mysql:latest

Here is some examples of contraints using :

constraint:name!=node1 -> deploys the container on all nodes except the node1

constraint:name==/node[12]/ -> deploy the container on the node 1 and the node 2.

constraint:name!=/node[12]/ -> deploy the container on all nodes except the node 1 and the node 2.

For more information about constraints, you can refer to the official Docker documentation: https://docs.docker.com/swarm/scheduler/filter/

Conclusion

In this article, we have seen how to build a reliable and secure Docker cluster as simple and fast using Docker Swarm. In the next article, we will see how to integrate Docker Compose into our cluster in order to automate our deployment.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.