Contents

- About Chainlink

- Deployment Methods

- Deploying Chainlink on EKS using Terraform

- Solution Architecture

- Step #1: Creating a Virtual Private Cloud (VPC)

- Step #2: Creating a Relational Database Service (RDS) Database

- Step #3: Creating EKS Cluster

- Step #4: Encrypting and Decrypting Secrets Using SOPS and Terraform

- Conclusion

- About TrackIt

About Chainlink

Chainlink is a completely decentralized blockchain oracle network that operates on the Ethereum blockchain, facilitating the transfer of tamper-proof data from off-chain sources to on-chain smart contracts. The network relies on multiple nodes to collect the data necessary for smart contracts. These nodes extract data and information from off-blockchain sources and send it to on-chain smart contracts via data feeds known as oracles.

Chainlink nodes are linked together through a peer-to-peer network, allowing them to work collaboratively to acquire the necessary data. Once the data is obtained, the nodes provide the median calculated data to the Ethereum blockchain, which can then be accessed by smart contracts. By using only one node to save data to the blockchain, the system saves on gas fees that are necessary to carry out transactions on the network. Smart contracts can then use the data to make informed decisions – such as using a stop-loss mechanism to automatically execute a contract when the price of a coin falls below a specific value.

Deployment Methods

There are several methods available for deploying Chainlink on AWS. One option is to deploy Chainlink using Amazon EC2, as demonstrated by the AWS Chainlink Quickstart. It is also possible to deploy it on Amazon ECS and Amazon EKS. The deployment choice made for the Chainlink environment discussed in this article is EKS for reasons provided in the section titled ‘Step #3 -Creating EKS Cluster’.

Deploying Chainlink on EKS using Terraform

Terraform is a popular open-source infrastructure as code (IaC) tool used for managing and provisioning cloud infrastructure resources. It enables developers and operations teams to define, provision, and manage infrastructure resources such as virtual machines, storage, networking, and databases across multiple cloud providers and platforms in a consistent, repeatable, and automated manner.

This article outlines the steps taken by TrackIt to deploy a secure, reliable, and scalable Chainlink environment on AWS. An assortment of Terraform modules furnished by the AWS community was utilized to deploy the AWS infrastructure. It should be noted that the steps outlined in this article are not meant for a production environment; they will, however, guide the reader in setting up their first Chainlink node.

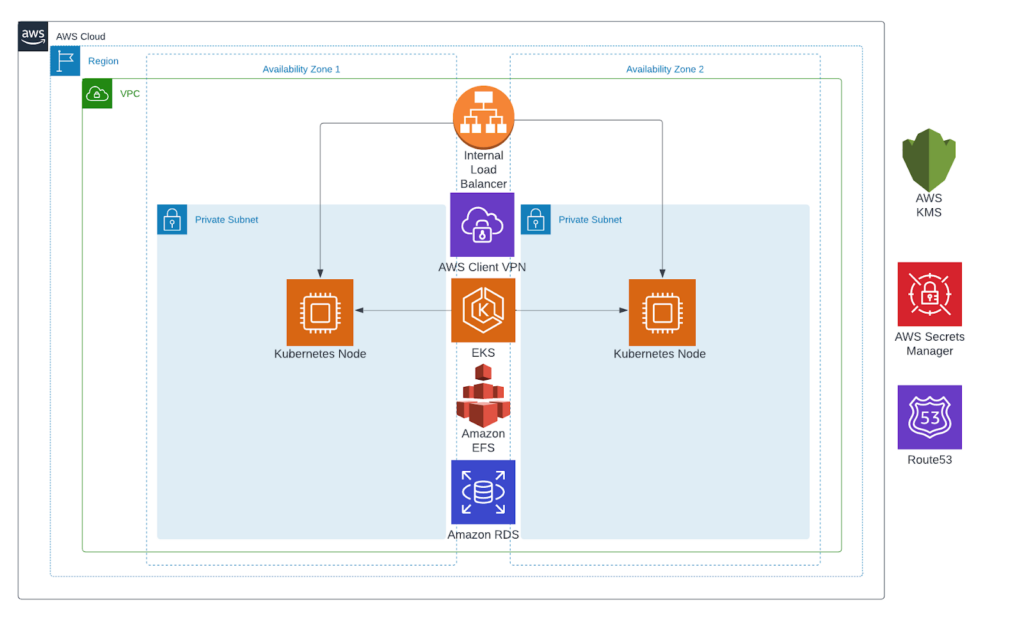

Solution Architecture

Solution Architecture for Chainlink on AWS

Step #1: Creating a Virtual Private Cloud (VPC)

The ‘vpc’ Terraform module is used to create a Virtual Private Cloud (VPC) complete with its subnets, Internet Gateway, and NAT Gateway.

| module “vpc” { source = “terraform-aws-modules/vpc/aws” version = “~> v3.10.0” name = “${var.name}-${var.env}” cidr = var.vpc_cidr azs = var.vpc_azs private_subnets = var.vpc_private_cidrs public_subnets = var.vpc_public_cidrs database_subnets = var.vpc_database_cidrs enable_nat_gateway = true single_nat_gateway = false one_nat_gateway_per_az = true enable_dns_hostnames = true enable_dns_support = true create_igw = true tags = merge(local.tags, { “kubernetes.io/cluster/${var.name}-${var.env}” = “shared” }) public_subnet_tags = merge(local.tags, { “kubernetes.io/cluster/${var.name}-${var.env}” = “shared” “kubernetes.io/role/elb” = “1” }) private_subnet_tags = merge(local.tags, { “kubernetes.io/cluster/${var.name}-${var.env}” = “shared” “kubernetes.io/role/internal-elb” = “1” }) } |

Designated tags can be allocated on the virtual private cloud (VPC) and its corresponding public and private subnetworks to condition the Elastic Kubernetes Service (EKS) cluster and to support the deployment process.

Step #2: Creating a Relational Database Service (RDS) Database

The ‘rds-aurora’ Terraform module facilitates the deployment of the RDS Database. It is important for readers to note that the Chainlink nodes require PostgreSQL version 11 or greater.

| module “rds” { source = “terraform-aws-modules/rds-aurora/aws” version = “~>6.1.4” name = “${var.name}-${var.env}” engine = “aurora-postgresql” instance_class = var.rds_instance_type instances = { 1 = {} 2 = {} } autoscaling_enabled = true autoscaling_min_capacity = 2 autoscaling_max_capacity = 2 monitoring_interval = 60 iam_role_name = “${var.name}-monitor” iam_role_use_name_prefix = true iam_role_description = “${var.name} RDS enhanced monitoring IAM role” iam_role_path = “/autoscaling/” iam_role_max_session_duration = 7200 storage_encrypted = true database_name = var.name master_username = “chainlink” # default: root port = 5432 subnets = module.vpc.database_subnets db_subnet_group_name = module.vpc.database_subnet_group_name create_db_subnet_group = false allowed_cidr_blocks = [var.vpc_cidr] vpc_id = module.vpc.vpc_id create_security_group = true backup_retention_period = 30 skip_final_snapshot = true deletion_protection = false tags = local.tags } |

The ‘rds-aurora’ Terraform module carries out the deployment of the RDS database. After completion of the module, the master password is automatically generated and can be accessed from the module outputs. As demonstrated in the snippet below, a secret is created in AWS Secrets Manager with a preformatted database URL that can be utilized in the Chainlink node environment variables without modification.

| resource “aws_secretsmanager_secret” “rds_url” { name = “${var.name}-${var.env}-psql-url” recovery_window_in_days = 0 tags = local.tags } resource “aws_secretsmanager_secret_version” “url” { secret_id = aws_secretsmanager_secret.rds_url.id secret_string = “postgres://chainlink:${module.rds.cluster_master_password}@${module.rds.cluster_endpoint}/${var.name}?sslmode=disable” } |

Step #3: Creating EKS Cluster

To build a scalable Chainlink environment, TrackIt opted to utilize Amazon Elastic Kubernetes Service (EKS) instead of Amazon Elastic Container Service (ECS). The decision was based on better EKS scalability in task performance than ECS. Since multiple workloads are needed to ensure that Chainlink runs efficiently, the Kubernetes Helm package manager was employed. Helm simplifies the process of creating templates for multiple workload configurations while allowing for easy replication. The Helm public forum also provides an opportunity to draw from a vibrant user community expertise, including the many pre-existing configurations available as Helm charts. EKS was also chosen because it simplifies communication between workloads by providing an efficient means of networking through the provisioning of a Container Networking Interface (CNI). With this feature, workloads can easily communicate with each other using DNS.

The EKS cluster was deployed using the ‘eks’ Terraform module. This module provides all the essential resources for a basic cluster, including the cluster and its nodes.

| resource “aws_kms_key” “eks” { description = “EKS Secret Encryption Key” deletion_window_in_days = 7 enable_key_rotation = true tags = local.tags } module “eks” { source = “terraform-aws-modules/eks/aws” version = “~> 18.0” cluster_name = “${var.name}-${var.env}” cluster_version = “1.22” cluster_endpoint_private_access = true cluster_endpoint_public_access = true cluster_addons = { coredns = { resolve_conflicts = “OVERWRITE” } kube-proxy = {} vpc-cni = { resolve_conflicts = “OVERWRITE” } } cluster_encryption_config = [ { provider_key_arn = aws_kms_key.eks.arn resources = [“secrets”] } ] vpc_id = var.vpc_id subnet_ids = var.private_subnets # Extend cluster security group rules cluster_security_group_additional_rules = { egress_nodes_ephemeral_ports_tcp = { description = “To node 1025-65535” protocol = “tcp” from_port = 1025 to_port = 65535 type = “egress” source_node_security_group = true } } # Extend node-to-node security group rules node_security_group_additional_rules = { ingress_self_all = { description = “Node to node all ports/protocols” protocol = “-1” from_port = 0 to_port = 0 type = “ingress” self = true } egress_all = { description = “Node all egress” protocol = “-1” from_port = 0 to_port = 0 type = “egress” cidr_blocks = [“0.0.0.0/0”] ipv6_cidr_blocks = [“::/0”] } } # EKS Managed Node Group(s) eks_managed_node_group_defaults = { ami_type = “AL2_x86_64” instance_types = [“m5.large”] attach_cluster_primary_security_group = false vpc_security_group_ids = [aws_security_group.additional.id] } eks_managed_node_groups = { node = { min_size = 2 desired_size = 2 } } # aws-auth configmap manage_aws_auth_configmap = true aws_auth_roles = [ { rolearn = var.role_arn username = var.username groups = [“system:masters”] }, ] aws_auth_users = [ { userarn = var.user_arn username = var.username groups = [“system:masters”] }, ] aws_auth_accounts = [ var.account_id, ] tags = local.tags } resource “aws_security_group” “additional” { name_prefix = “${local.name}-additional” vpc_id = var.vpc_id ingress { from_port = 0 to_port = 0 protocol = “-1” cidr_blocks = [ “0.0.0.0/0”, ] ipv6_cidr_blocks = [ “::/0”, ] } egress { from_port = 0 protocol = “-1” to_port = 0 cidr_blocks = [ “0.0.0.0/0”, ] ipv6_cidr_blocks = [ “::/0”, ] } tags = local.tags } |

The EKS cluster and Chainlink nodes require additional resources and services that need to be deployed in tandem. The AWS Load Balancer Controller provides ingress resources for creating a Load Balancer and/or Listener Rules. Amazon Elastic File System (EFS) serves as the storage repository for Prometheus and other pods’ persistent data. EFS was chosen instead of Amazon Elastic Block Store (EBS) since the former is a highly available storage system that can be used in multiple Availability Zones (AZs). Lastly, the External DNS automatically generates AWS Route53 records.

The resources and services mentioned are provided as Helm modules and can be utilized accordingly. These modules are included in Terraform and are automatically deployed when applied.

Deploying the Chainlink Node

TrackIt created its own Helm Chart for Chainlink nodes to deploy Kubernetes resources. The chart deploys various resources that are required to ensure the successful running of Chainlink nodes. These resources include StatefulSet for pod deployment, Service to make Chainlink available inside the cluster, Ingress to make Chainlink available outside the cluster, PodDisruptionBudget to ensure that there is an appropriate minimum of available pods, Job to facilitate pod replication for high availability, and Secrets that store database credentials required for use in the Chainlink Environment.

The variables and parameters utilized in the custom Helm Chart for Chainlink nodes are declared in this GitHub file.

| resource “helm_release” “chainlink” { chart = “${path.module}/../../../helm/chainlink” name = “chainlink” version = “0.1.27” recreate_pods = true force_update = true set { name = “config.eth_url” value = var.eth_url } set { name = “config.chainlink_dev” value = var.env == “dev” ? “true” : “false” } set { name = “config.db_url” value = sensitive(“postgres://chainlink:${module.rds.cluster_master_password}@${module.rds.cluster_endpoint}/${var.name}?sslmode=disable”) } set { name = “ingress.host” value = var.chainlink_domain_name } set { name = “config.p2p_bootstrap_peers” value = var.p2p_bootstrap_peers } set { name = “replicaCount” value = 2 } set { name = “ingress.acm_certificate_arn” value = var.chainlink_acm_certificate_arn } depends_on = [ kubernetes_secret.api_secrets ] } |

Through Terraform, a Kubernetes Secret is set up to securely store .api and .password files. The .api file contains administrative credentials while the .password file contains the wallet password. The secret is mounted as a volume in the Chainlink pod. The secrets are encrypted with SOPS (Secrets OPerationS). Before continuing with the remainder of Step #3, readers can choose to implement SOPS by following ‘Step #4: Encrypting and Decrypting Secrets Using SOPS and Terraform’.

| data “sops_file” “secrets” { source_file = “${path.module}/secrets/${var.env}/encrypted-secrets.yaml” } resource “kubernetes_secret” “api_secrets” { metadata { name = “api-secrets” } data = { “.password” = templatefile( “${path.module}/secrets/password.tpl”, { wallet-password : sensitive(data.sops_file.secrets.data[“wallet_password”]), } ) “.api” = templatefile( “${path.module}/secrets/api.tpl”, { email : var.user_email password : sensitive(data.sops_file.secrets.data[“user_password”]) } ) } } |

The TPL files for Secrets can be found here.

Deploying Adapters

An adapter in the Chainlink ecosystem refers to an external instrument utilized by the Chainlink node operator to obtain off-chain information via an API and then report it to a node. There are numerous APIs that the Chainlink node operator can incorporate into their environment to acquire the same type of data that the other nodes are receiving, for example, weather data.

TrackIt also developed a Helm Chart to deploy Chainlink Adapters. The chart is organized

into multiple folders, one for each adapter, and includes Kubernetes resources such as Deployment to deploy the pod, Secret to store the API Key of the adapter, and Service to expose the adapter inside the Cluster.

| resource “helm_release” “adapters” { chart = “${path.module}/../../../helm/adapters” name = “adapters” version = “0.1.64” # We are using SOPS to retrieve Adapter API Keys and put it as a value for the Chart set { name = “nomics.config.api_key” value = sensitive(data.sops_file.secrets.data[“nomics_api_key”]) } set { name = “tiingo.config.api_key” value = sensitive(data.sops_file.secrets.data[“tiingo_api_key”]) } set { name = “cryptocompare.config.api_key” value = sensitive(data.sops_file.secrets.data[“cryptocompare_api_key”]) } recreate_pods = true force_update = true } |

Deploying Monitoring Tools – Grafana and Prometheus

Pre-existing Helm Charts were used to deploy Prometheus and Grafana.

For Prometheus:

| resource “helm_release” “prometheus” { name = “prometheus” repository = “https://prometheus-community.github.io/helm-charts” chart = “prometheus” version = “15.8.7” namespace = “default” set { name = “alertmanager.enabled” value = “false” } set { name = “pushgateway.enabled” value = “false” } set { name = “server.persistentVolume.storageClass” value = “efs-sc” } values = [ file(“${path.module}/prometheus/values.yaml”) ] } |

Prometheus values can be found here.

For Grafana:

| resource “helm_release” “grafana” { name = “grafana” repository = “https://grafana.github.io/helm-charts” chart = “grafana” version = “6.29.4” namespace = “default” set { name = “ingress.hosts” value = “{${var.grafana_domain_name}}” } values = [ templatefile( “${path.module}/grafana/values.yaml”, { chainlink-ocr : indent(8, file(“${path.module}/grafana/dashboards/chainlink-ocr.json”)), external-adapter : indent(8, file(“${path.module}/grafana/dashboards/external-adapter.json”)) acm-certificate-arn : var.grafana_acm_certificate_arn } ) ] } |

Grafana dashboards can be found here.

Grafana values can be found here.

As seen in the screenshot displayed above, the application of ‘templatefile’ to the Grafana is done to incorporate two custom Chainlink dashboards (namely chainlink-ocr and external-adapter) and the AWS Certificate Manager certificate ARN.

Step #4: Encrypting and Decrypting Secrets Using SOPS and Terraform

To encrypt and decrypt secrets, TrackIt employed a SOPS editor by leveraging an AWS KMS key for this purpose. Using SOPS helps push encrypted credential files onto the repository.

Creating the Clear Secrets YAML File

clear-secrets.yaml

| user_password: ‘password’ wallet_password: ‘my_wallet_password’ nomics_api_key: ‘xxxxxx’ cryptocompare_api_key: ‘xxxxxx’ tiingo_api_key: ‘xxxxxx’ |

Note: this file is ignored with .gitignore and shouldn’t be pushed since it contains clear secrets

Using SOPS CLI to Encrypt Secrets

| $ export SOPS_KMS_ARN=arn:aws:kms:$AWS_REGION:$ACCOUNT_ID:key/$KMS_KEY_ID $ sops -e clear-password.yml > ./tf/secrets/$ENV/encrypted-secrets.yaml |

Secrets are then encrypted in ./tf/secrets/$ENV/encrypted-secrets.yaml

Using Terraform SOPS Provider to Decrypt and Use Secrets Key

providers.tf

| terraform { required_providers { sops = { source = “carlpett/sops” version = “~> 0.5” } } } |

Simple example using Terraform SOPS provider

| resource “aws_secretsmanager_secret” “user_password” { name = “${var.name}-${var.env}-user-password” description = “User password” recovery_window_in_days = 7 kms_key_id = var.kms_key_id } resource “aws_secretsmanager_secret_version” “user_password” { secret_id = aws_secretsmanager_secret.user_password.id secret_string = sensitive(data.sops_file.secrets.data[“user_password”]) } data “sops_file” “secrets” { source_file = “${path.module}/secrets/${var.env}/encrypted-secrets.yaml” } |

Note: This is an example that demonstrates how to use SOPS

Secrets Manager

Critical secrets such as the database password are stored in AWS Secrets Manager.

| resource “aws_secretsmanager_secret” “rds” { name = “${var.name}-${var.env}-psql-password” recovery_window_in_days = 0 tags = local.tags } resource “aws_secretsmanager_secret_version” “password” { secret_id = aws_secretsmanager_secret.rds.id secret_string = module.rds.cluster_master_password } |

Conclusion

AWS has become the leading provider for customers who want to deploy their blockchain and ledger technology workloads on the cloud. The AWS cloud currently provides extensive support for all major blockchain protocols, including Sawtooth, DAML, Hyperledger, Ethereum, Corda, Blockstack, and more, through over 70 validated blockchain solutions from partners. This article provides readers with a resource to deploy secure, reliable, and scalable Chainlink environments on AWS.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.