Written by Maram Ayari, DevOps Engineer and Clarisse Eynard, Software Engineering Intern

Contents

What is “Prompt Engineering”?

Prompt engineering is the process of designing and optimizing the inputs, or “prompts,” given to artificial intelligence (AI) models to elicit specific, accurate, and relevant responses. The goal of prompt engineering is to improve the quality and relevance of the output generated by AI models such as language translation, text summarization, and content generation.

What is a Prompt?

A prompt is a set of natural language instructions or a question given to an AI model to generate a response. The prompt can be a simple sentence, a paragraph, or even a piece of code. The quality of the prompt has a significant impact on the quality of the output, as it determines what the AI model is asked to do and how it should do it.

The following graphic represents the six essential elements of prompt engineering:

- Task element serves as the core objective that AI is tasked to accomplish, lays out clear instructions defining the purpose behind the generated output, be it summarize, analysis, creation, or other specific tasks, enabling AI to comprehend its role accurately.

- Context element acts as the foundation upon which the prompt is built, providing essential background information, setting, or pertinent circumstances relevant to the task. A well-defined context aids AI in understanding the scope and nuances of the prompt, resulting in more precise and contextually fitting responses.

- Example element offers guiding instances, illustrations, or references relevant to the task. These examples assist AI in comprehending the desired output by providing tangible models that align with the expected outcome, enhancing the clarity of instructions.

- Persona encapsulates the intended audience or user persona for whom the output is intended. Defining the persona assists in tailoring the language and style of the generated content to resonate effectively with specific demographics or readerships.

- Format outlines the structural specifications or layout expected in the generated response. It encompasses instructions regarding organization, presentation style, length, or other formatting requirements essential for achieving the desired output.

- Tone encapsulates the desired mood, attitude, or style expected in the generated content. It guides AI in infusing appropriate emotional tones, formality, or informality necessary to connect effectively with the intended audience.

Why is Prompt Engineering Required?

Prompt engineering helps bridge the gap between the AI’s capabilities and the user’s needs. By carefully crafting prompts, users can guide an AI model to produce more accurate, useful, and contextually appropriate responses, enhancing the overall user experience.

Key Benefits of Prompt Engineering

- Improved Model Performance: By crafting optimized prompts, users can improve the performance of language models, enabling them to produce more accurate, relevant, and informative responses.

- Increased Efficiency: Prompt engineering can reduce the need for manual post-processing and editing as well as minimize the risk of errors and misinterpretations.

- Enhanced User Experience: By providing clear and concise prompts, prompt engineering makes it easier for users to interact with language models and receive desired outputs.

- Increased Flexibility: Prompt engineering allows users to adapt language models to various tasks, domains, and applications, making them more versatile and useful.

Prompt Engineering Best Practices

- Be Clear & Direct: Provide clear instructions and context to guide the AI model’s responses.

- Use Examples: Include examples in the prompts to illustrate the desired output format or style.

- Give the AI a Role: Prime the AI to inhabit a specific role (like that of an expert) to increase performance for a specific use case.

- Use XML Tags (1): Incorporate XML tags to structure prompts and responses for greater clarity.

- Chain Prompts: Divide complex tasks into smaller, manageable steps for better results.

- Encourage Step-by-Step Thinking: Encourage the AI to think step-by-step to improve the quality of its output.

- Prefill Responses: Start the AI’s response with a few words to guide its output in the desired direction.

- Control Output Format: Specify the desired output format to ensure consistency and readability.

- Ask for Rewrites: Request revisions based on a rubric to get the AI to iterate and improve its output.

- Optimize for Long Context Windows: Optimize prompts to take advantage of the AI’s ability to handle longer context windows.

- Use a Metaprompt (2): Utilize a metaprompt to prompt the AI to create a prompt based on specific guidelines. This can be helpful for drafting an initial prompt or quickly creating many prompt variations for testing.

(1) ⇒ An XML tag is a fundamental component of the Extensible Markup Language (XML) used to define the structure of data. Tags enclose elements within angle brackets < >, with opening tags marking the start (<tag>) and closing tags indicating the end (</tag>) of an element. Tags can also contain attributes to provide additional information about the element.

(2) ⇒ A metaprompt is a higher-level prompt used to guide the creation of prompts. It provides a template or guidelines for generating specific prompts tailored to particular tasks or objectives. Metaprompts help streamline the prompt engineering process, allowing for efficient creation and testing of multiple prompt variations.

What is Amazon Bedrock?

Amazon Bedrock is a managed service by Amazon Web Services (AWS) that provides the foundational infrastructure for building and deploying machine learning models and applications. It aims to simplify the process of working with AI and machine learning technologies.

Key Features

Amazon Bedrock offers access to a range of pre-trained machine learning models known as Foundation Models (FMs). These models enable developers to start using AI without the need to build and train models from scratch. This is particularly beneficial for users without extensive expertise in machine learning.

Bedrock also provides scalable and reliable infrastructure which is essential for handling the computational demands of training and deploying machine learning models. This infrastructure ensures that applications can grow and adapt as needed without running into performance bottlenecks.

Prompt Engineering Approaches

The best prompt engineering approach for a specific use case depends on the task and the data. Common tasks supported by LLMs on Amazon Bedrock include:

- Classification: Classification tasks involve categorizing input data into predefined classes or categories. For instance, sentiment analysis is a common classification task where the model determines the sentiment of a text, such as whether it’s positive, negative, or neutral. Another example is email spam detection, where emails are classified as either spam or not spam.

- Question-answer, without context: The model must answer the question with its internal knowledge without any context or document.

- Question-answer, with context: The user provides an input text with a question, and the model must answer the question based on information provided within the input text.

- Summarization: The prompt is a passage of text, and the model must respond with a shorter passage that captures the main points of the input.

- Open-ended text generation: The model must respond with a passage of original text that matches the description provided in the prompt. This also includes the generation of creative text such as stories, poems, or movie scripts.

- Code generation: The model must generate code based on user specifications. For example, a prompt might request text-to-SQL or Python code generation.

- Mathematics: The input describes a problem that requires mathematical reasoning at some level, which could involve numerical, geometric, or other forms of mathematical reasoning.

- Logical deductions: The model must make a series of logical deductions based on the information available in the prompt and any additional information provided.

- Entity extraction: Entities can be extracted from text or input based on a provided question to identify and categorize elements within a larger context.

- Chain-of-thought reasoning: The model must provide a step-by-step reasoning detailing how an answer is derived based on the initial prompt.

Benefits

The primary benefit of Amazon Bedrock is its ease of use. By abstracting much of the complexity involved in machine learning, it provides a user-friendly interface and tools that accelerate the development process. This makes it accessible to a broader audience, including those who may not have deep technical expertise in AI.

Cost efficiency is another significant advantage. By utilizing managed services and pre-trained models, organizations can reduce the time and resources needed to deploy AI solutions, leading to substantial cost savings. Bedrock also fosters innovation. With easy access to powerful AI tools, businesses can experiment and innovate more quickly, bringing new AI-driven products and services to market faster.

Demonstration

The following demo will show readers how to create an image analysis solution using an Amazon Bedrock model.

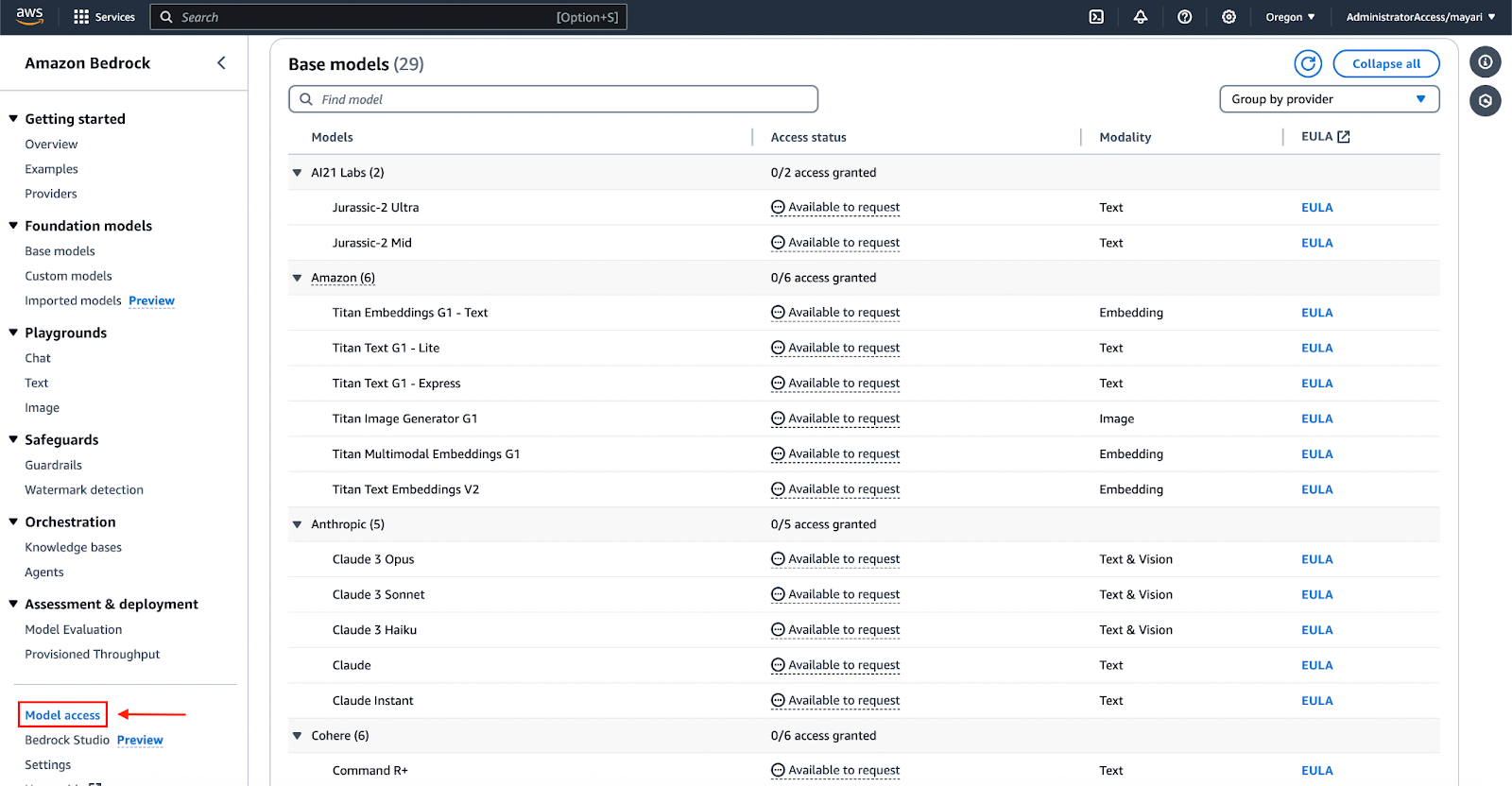

1. Model Access

Access to Amazon Bedrock Foundation models is not granted by default. To gain access to a Foundation Model (FM), an IAM user with sufficient privileges must request access to the model through the console. Once access to a model is granted, it is available to all users in the account.

To manage model access, select Model Access at the bottom of the left navigation pane in the Amazon Bedrock Management Console. On the Model Access page, you can view a list of available models, the output modality of the model, whether you have been granted access to the model, and the End User License Agreement (EULA).

2. Choose Model

The Claude 3 Sonnet Foundation Model has been used in this example. Claude 3 Sonnet is part of the larger Claude 3 family which also includes Claude 3 Opus and Claude 3 Haiku. Sonnet is designed to provide a middle ground in terms of performance and speed, making it an ideal choice for applications that require both efficiency and a high level of capability. Like its siblings, Sonnet is multimodal i.e. capable of processing both text and image data.

Claude 3 Sonnet’s capabilities include nuanced content creation, accurate summarization, and complex scientific queries. The model demonstrates increased proficiency in non-English languages and coding tasks, supporting a wider range of use cases on a global scale.

This model was chosen for its key feature of offering vision capabilities that process images and provide text outputs. Claude 3 Sonnet excels at analyzing and comprehending various visual assets such as charts, graphs, technical diagrams, and reports. It achieves performance levels comparable to other top-tier models in image processing, all while maintaining a notable speed advantage.

3. Installing and Importing Required Libraries

| pip3 install boto3 pip3 install json pip3 install base64 |

| import boto3 import json import base64 |

These libraries will be used for the following:

- boto3: To interact with AWS services

- json: To encode and decode JSON data to interact with APIs

- base64: To encode the text or binary data into base64 format and decode the base64 data into text or binary data

4. Initializing the required client objects

The process of invoking images with the Amazon Bedrock Runtime client is straightforward. First, an SSO session is created and Bedrock is initialized:

| session = boto3.Session(profile_name=profile_name) runtime = session.client(“bedrock-runtime”, region_name=”us-west-2″) |

5. Prompt used

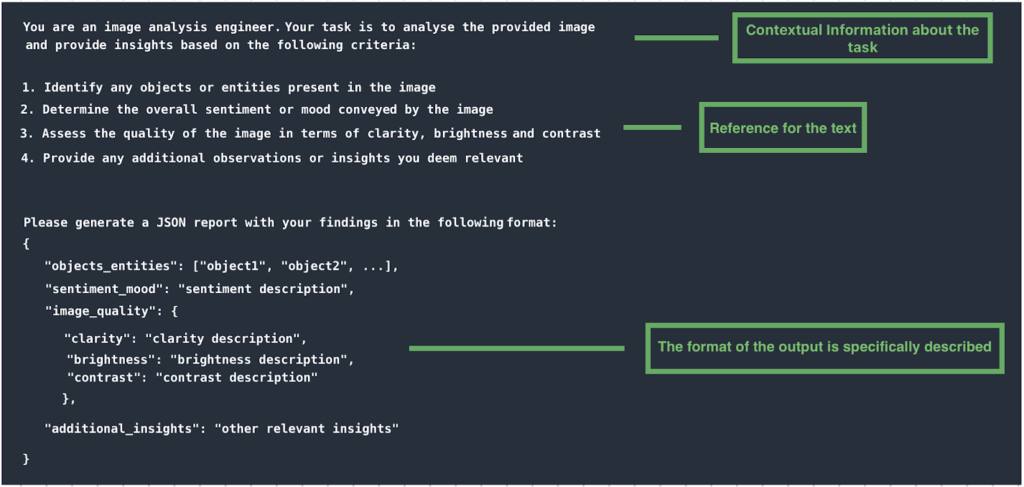

Below is an examination of the prompt template used for Claude 3 Sonnet. In prompt engineering, clarity is paramount and requires a blend of context and task instructions.

| PROMPT_TEMPLATE = “”” You are an image analysis engineer. Your task is to analyze the provided image and provide insights based on the following criteria: 1. Identify any objects or entities present in the image. 2. Determine the overall sentiment or mood conveyed by the image. 3. Assess the quality of the image in terms of clarity, brightness, etc. 4. Provide any additional observations or insights you deem relevant. Please generate a JSON report with your findings. { “objects_entities”: [“object1”, “object2”, …], “sentiment_mood”: “sentiment description”, “image_quality”: { “clarity”: “clarity description”, “brightness”: “brightness description”, “contrast”: “contrast description” }, “additional_insights”: “other relevant insights” } “”” |

6. Calling the Amazon Bedrock client to invoke the LLM model API

Claude 3 Sonnet utilizes the Anthropic Claude Messages API for inputting inference parameters. Below is a sample JSON structure for supplying an image:

| { “anthropic_version”: “bedrock-2023-05-31”, “max_tokens”: 1000, “messages”: [ { “role”: “user”, “content”: [ { “type”: “image”, “source”: { “type”: “base64”, “media_type”: “image/jpeg”, “data”: encoded_image, }, }, { “type”: “text”, “text”: PROMPT_TEMPLATE, } ], } ], } |

The following is the image that will be used in this example:

7. Analyze the image

To provide the image data, encode it using base64:

| IMAGE_PATH = <IMAGE_PATH> with open(IMAGE_PATH, “rb”) as image_file: image_bytes = image_file.read() encoded_image = base64.b64encode(image_bytes).decode(“utf-8”) |

8. Full example

| import boto3 import json import base64 IMAGE_PATH = <IMAGE_PATH> profile_name = <AWS_CLI_PROFILE_NAME> # Create a session using the specified profile session = boto3.Session(profile_name=profile_name) runtime = session.client(“bedrock-runtime”, region_name=<AWS_REGION>) PROMPT_TEMPLATE = “”” You are an image analysis engineer. Your task is to analyze the provided image and provide insights based on the following criteria: 1. Identify any objects or entities present in the image. 2. Determine the overall sentiment or mood conveyed by the image. 3. Assess the quality of the image in terms of clarity, brightness, etc. 4. Provide any additional observations or insights you deem relevant. Please generate a JSON report with your findings. { “objects_entities”: [“object1”, “object2”, …], “sentiment_mood”: “sentiment description”, “image_quality”: { “clarity”: “clarity description”, “brightness”: “brightness description”, “contrast”: “contrast description” }, “additional_insights”: “other relevant insights” } “”” # Read the image file and encode it in base64 with open(IMAGE_PATH, “rb”) as image_file: image_bytes = image_file.read() encoded_image = base64.b64encode(image_bytes).decode(“utf-8”) # Construct the body for the API request body = json.dumps({ “anthropic_version”: “bedrock-2023-05-31”, “max_tokens”: 1000, “messages”: [ { “role”: “user”, “content”: [ { “type”: “image”, “source”: { “type”: “base64”, “media_type”: “image/jpeg”, “data”: encoded_image, }, }, { “type”: “text”, “text”: PROMPT_TEMPLATE, } ], } ], }) # Invoke the model using the API response = runtime.invoke_model( modelId=”anthropic.claude-3-sonnet-20240229-v1:0″, body=body ) response_body = json.loads(response.get(“body”).read()) print(response_body[‘content’][0][‘text’]) |

9. Model output

The model’s response accurately describes the content of the image provided, demonstrating the power of Amazon Bedrock with Claude 3 Sonnet and Python.

Output:

| { “objects_entities”: [“A young woman”, “Red sweater”], “sentiment_mood”: “The image conveys a joyful, cheerful, and positive mood. The woman is smiling widely, expressing happiness and delight.”, “image_quality”: { “clarity”: “The image is clear and focused, with the subject in sharp detail.”, “brightness”: “The brightness levels are well-balanced, with good exposure.”, “contrast”: “The contrast is appropriate, with distinct tones separating the subject from the background.” }, “additional_insights”: “The woman’s radiant smile and lively expression create an uplifting and energetic vibe. The slightly blurred background helps draw the viewer’s attention to the subject’s face and expression.” } |

Conclusion

This demonstration highlights the power of natural language processing to automatically generate rich descriptions of image content. Leveraging tools such as the Amazon Bedrock model, Claude 3 Sonnet, and sophisticated prompt engineering template, we achieved remarkable results, producing insightful captions and descriptive paragraphs that accurately represent the images.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.

About Maram Ayari

DevOps Engineer at TrackIt with almost a year of experience, Maram holds a master’s degree in software engineering and has deep expertise in a diverse range of key AWS services such as Amazon EKS, Opensearch, and SageMaker.

Maram also has a background in both front-end and back-end development. Through her articles on medium.com, Maram shares insights, experiences, and advice about AWS services. She is also a beginner violinist.

About Clarisse Eynard

Currently in her 4th year at Epitech, Clarisse is an intern at TrackIt specializing in both frontend and backend development. Clarisse holds an AWS Cloud Practitioner certification.

Her interests lie in making user experiences easier through innovative frontend solutions and developing robust backend systems that support them.