Written by Maram Ayari, Software Engineer at TrackIt

Processing audio and visual media at scale requires advanced AI-driven solutions to extract meaningful insights efficiently. AWS provides a suite of services that enable automated transcription, speaker diarization, and on-screen text identification, streamlining media workflows. By automating these tasks, organizations can enhance accessibility, content indexing, and real-time analysis without manual intervention.

Contents

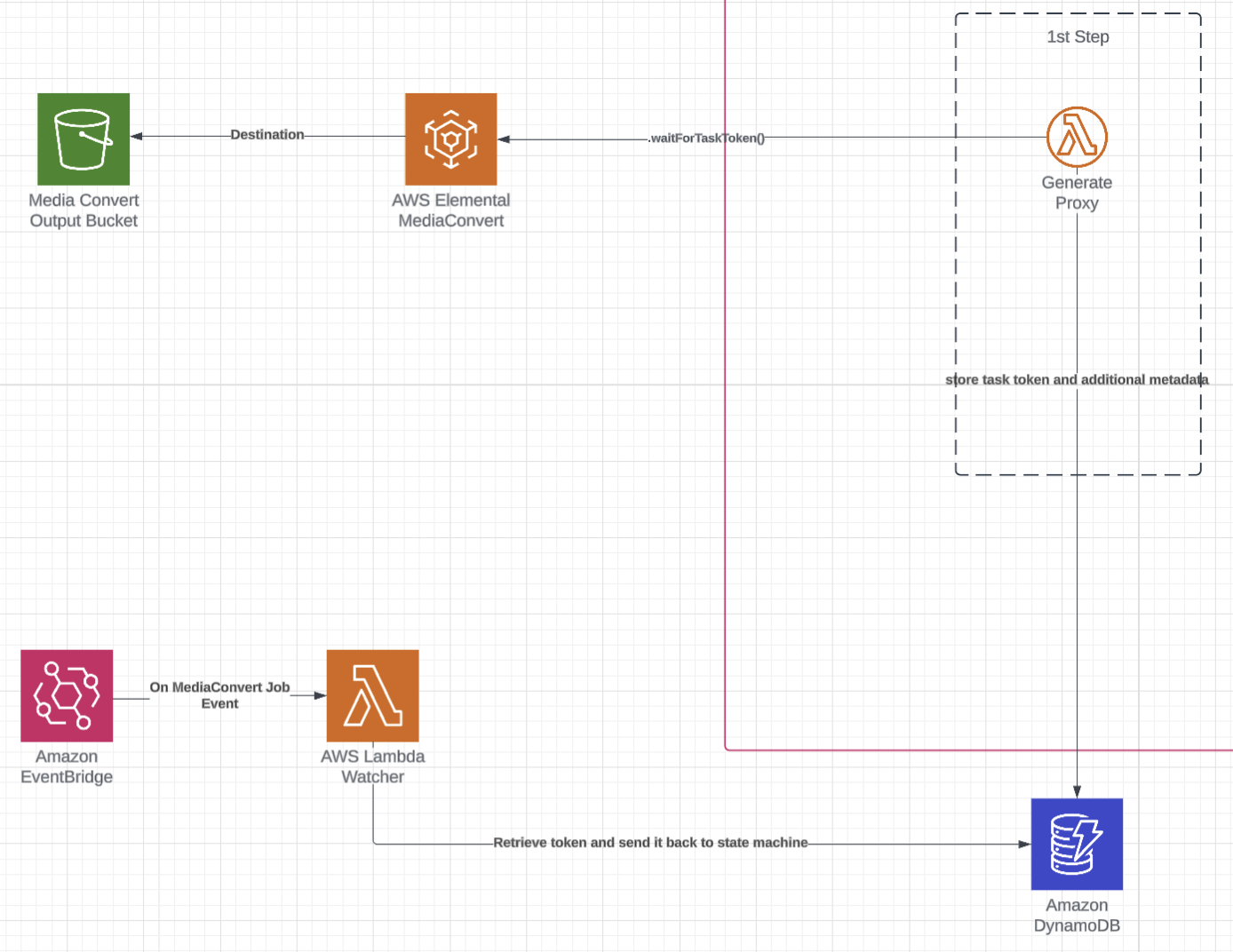

Input Preparation

A media pipeline that efficiently produces the inputs required for AI-powered features like transcription and speaker diarization.This media pipeline includes an AWS Lambda function for MediaConvert Job Creation that processes MP4 files. The function triggers a MediaConvert job to convert the ingested MP4 file into output formats and extracts audio tracks (WAV) from the video for further analysis and processing.

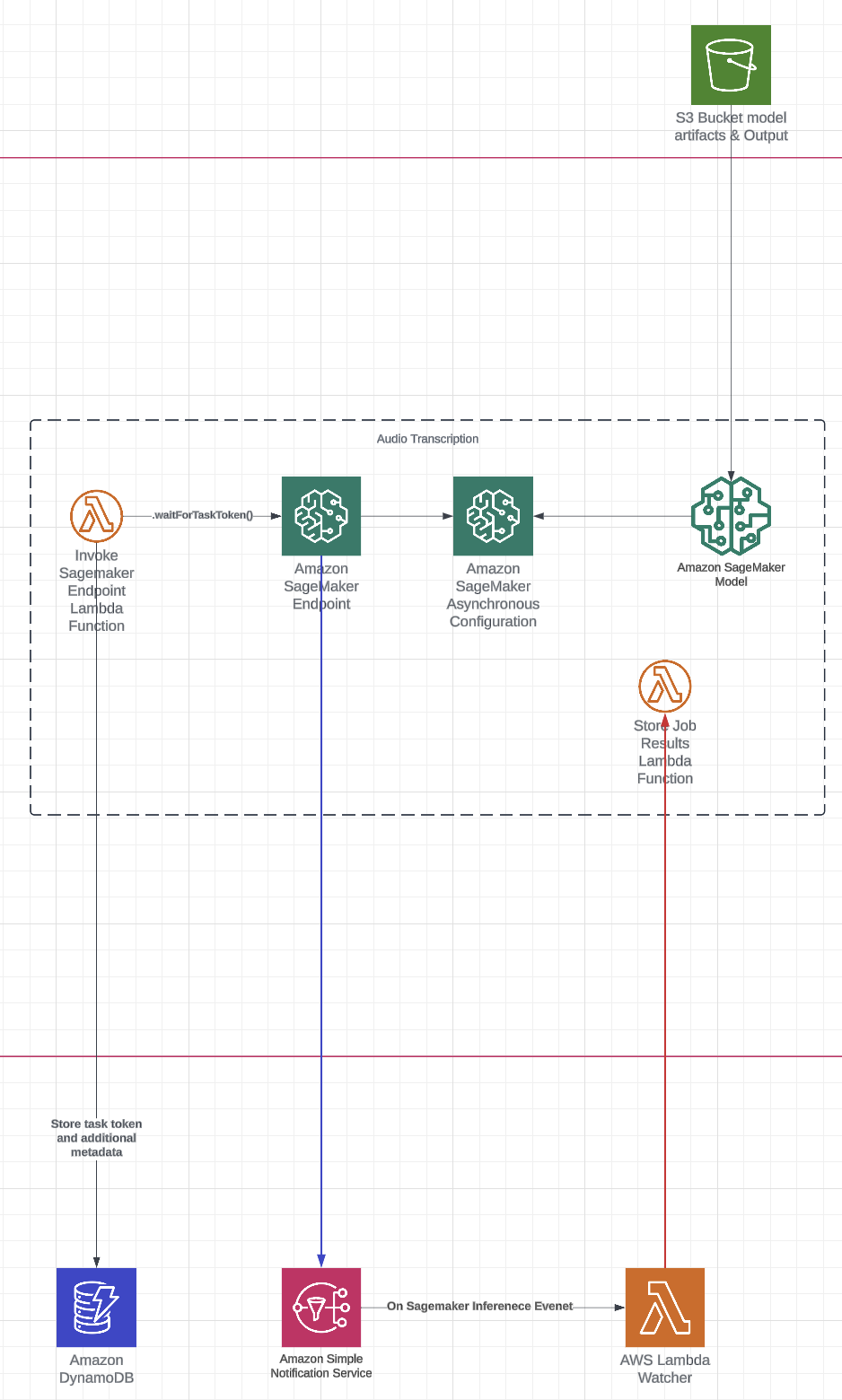

Audio Transcription

AWS services work together to provide an efficient and scalable audio transcription solution. At the core of this process is Whisper, an advanced speech recognition model designed for high-accuracy transcription across various audio formats. To deploy and manage this model, Amazon SageMaker, a fully managed machine learning service, is used alongside other AWS components to ensure seamless processing:

- SageMaker Model: The Whisper model, along with its inference code, is stored as artifacts in an Amazon S3 bucket. SageMaker references these artifacts during deployment to process transcription requests.

- SageMaker Endpoint Configuration: This configuration defines the parameters needed to deploy the model as a scalable endpoint. It includes settings for instance type, scaling options, and asynchronous processing, ensuring that large or long-duration audio files can be transcribed without interrupting other operations.

- SageMaker Endpoint: Acting as the interface for transcription requests, the SageMaker Endpoint processes audio input asynchronously. This setup allows for efficient handling of high-volume or lengthy audio files, supporting payloads of up to 1GB and processing times of up to 15 minutes without affecting other tasks.

- Lambda Function: AWS Lambda coordinates the transcription workflow by triggering asynchronous inference, monitoring progress, and handling post-processing. For instance, it stores transcribed text in an Amazon DynamoDB table, making results easily accessible and seamlessly integrated with other services.

Example Output Transcription:

| [ “[{\”text\”: \” Hi Sanjay, I’m very much interested in learning about generative AI. We have been hearing about generative AI a lot. Can you help me understand what is generative AI? Yeah, sure. Generative AI refers to artificial intelligence model that can generate new content such as text, images, audio, or video based on the training data they have been learned from.\”, \”start\”: 0.042, \”end\”: 25.338}, {\”text\”: \” Okay. And can you give me an example of a generative AI model? Sure. One popular example is GPT-3, generative pre-trend transformer model, a language model developed by OpenAI that can generate human-like text on a wide range of topics. Okay. Okay. And I’ve heard about fine-tuning of models. What is it and why is it important?\”, \”start\”: 26.35, \”end\”: 53.688}]”, “application/json”] |

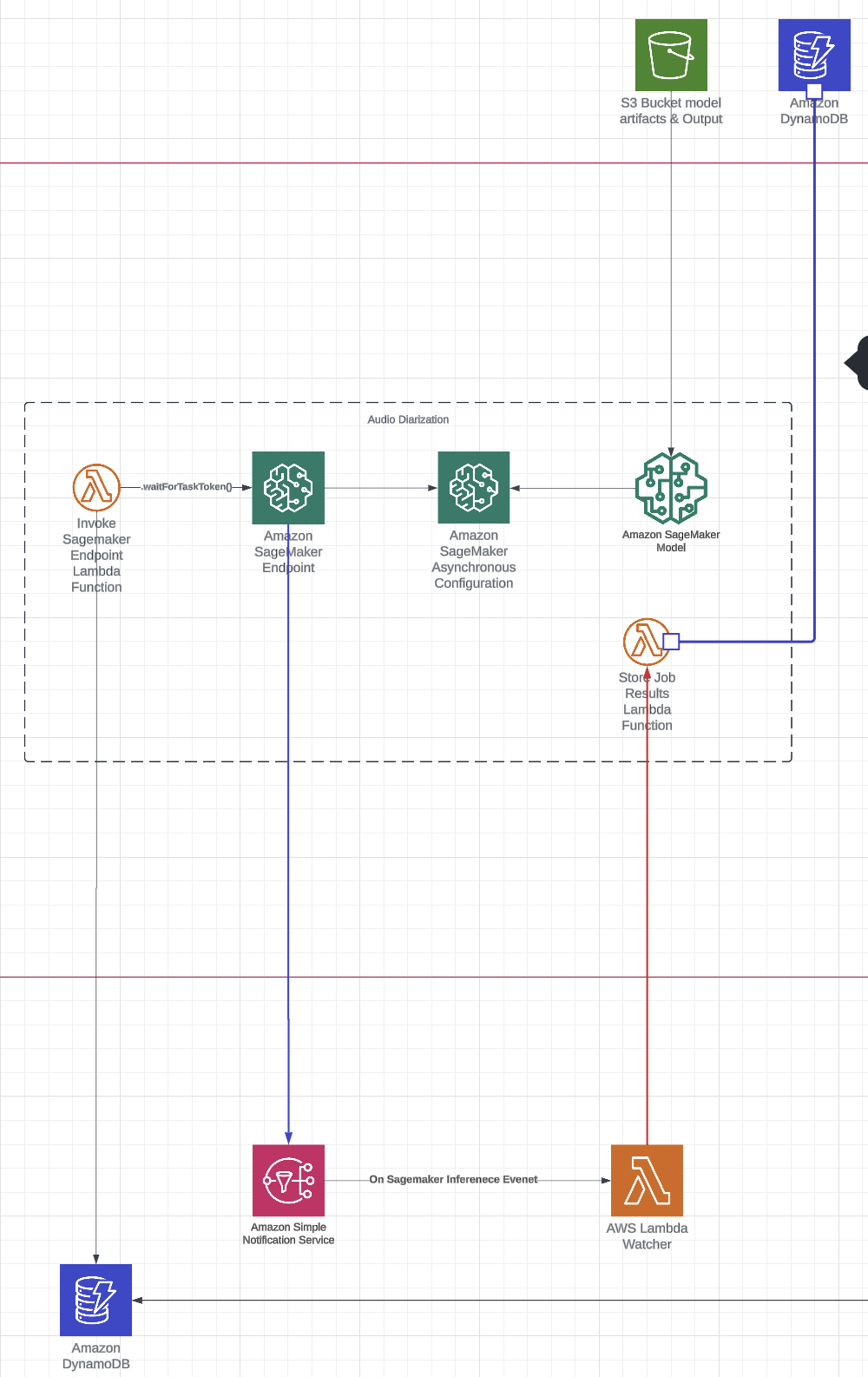

Audio Diarization

AWS services work together to enable accurate audio diarization, which involves segmenting audio by speaker and transcribing speech with proper attribution (e.g., Speaker_0, Speaker_1). At the core of this process is WhisperX, an enhanced version of Whisper that integrates Pyannote, a speaker diarization model. Several AWS components ensure efficient deployment and processing:

- SageMaker Model: Like in the transcription workflow, the WhisperX model and its inference code are stored as artifacts in an S3 bucket. SageMaker references these artifacts during deployment to process transcription and diarization requests.

- SageMaker Endpoint Configuration: This configuration, similar to the transcription setup, defines the parameters for deploying the WhisperX model as a scalable endpoint. It includes settings for instance type, scaling options, and asynchronous processing to handle large or complex audio files without blocking other operations.

- SageMaker Endpoint: As in the transcription workflow, the SageMaker Endpoint processes audio asynchronously, allowing it to handle large files (up to 1GB) and long processing times (up to 15 minutes) while supporting multiple concurrent requests.

- Lambda Function: Lambda coordinates the diarization workflow, triggering asynchronous inference, monitoring progress, and handling post-processing. In this case, it stores speaker-labeled transcriptions and segmented audio in a DynamoDB table, ensuring the diarization results are easily accessible for further analysis or downstream applications.

Example Output Diarized Transcription:

The output is a structured JSON format where each speech segment includes:

- Timestamps (start, end): Indicate when the speaker starts and stops talking.

- Text: The transcribed spoken content.

- Speaker Label: A generic label such as SPEAKER_00, SPEAKER_01, etc., assigned by the diarization model to differentiate voices.

| { “segments”: [ { “start”: 0.202, “end”: 5.225, “text”: ” Hi Sanjay, I’m very much interested in learning about generative AI.”, “speaker”: “SPEAKER_00” }, { “start”: 5.225, “end”: 8.948, “text”: “We have been hearing about generative AI a lot.”, “speaker”: “SPEAKER_00” }, { “start”: 8.948, “end”: 12.35, “text”: “Can you help me understand what is generative AI?”, “speaker”: “SPEAKER_00” }, { “start”: 12.35, “end”: 13.591, “text”: “Yeah, sure.”, “speaker”: “SPEAKER_01” }, { “start”: 13.591, “end”: 25.258, “text”: “Generative AI refers to artificial intelligence model that can generate new content such as text, images, audio, or video based on the training data they have been learned from.”, “speaker”: “SPEAKER_01” }, { “start”: 27.01, “end”: 27.671, “text”: ” Okay.”, “speaker”: “SPEAKER_00” } ]} |

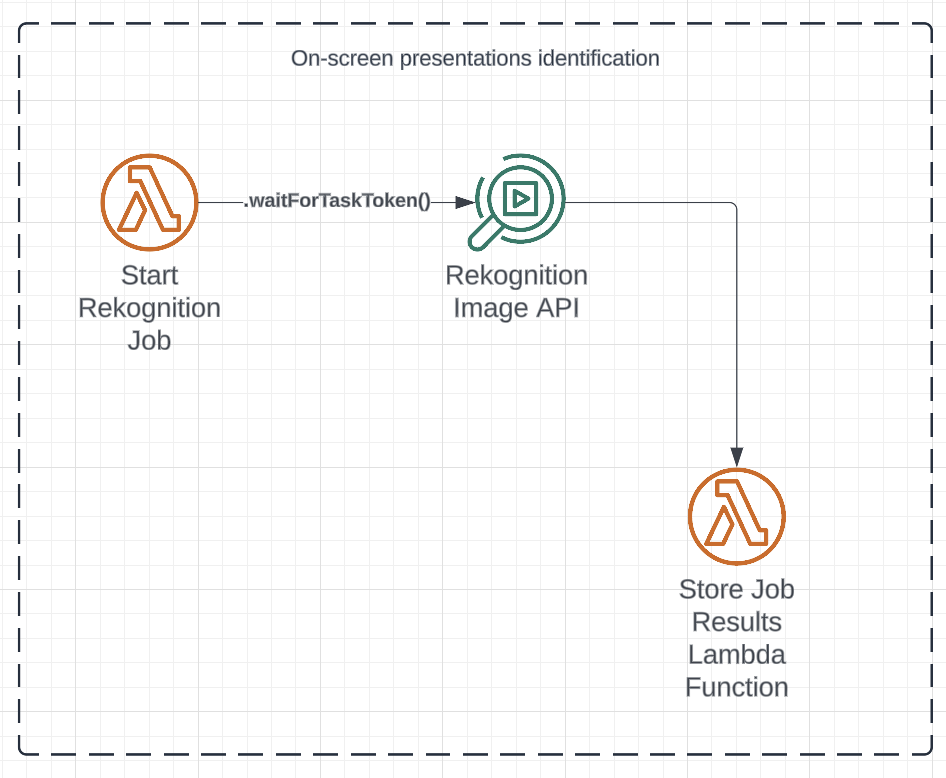

On-Screen Text Identification

AWS serverless services automate the identification and extraction of presentation content from visual media. The process unfolds as follows:

- Lambda Trigger for Rekognition Job: An AWS Lambda function initiates an Amazon Rekognition job, configuring it to analyze visual content and detect on-screen presentation elements such as slides.

- Rekognition Analysis: The AWS Rekognition API processes the media using computer vision techniques to identify and extract relevant presentation details. This may include optical character recognition (OCR) to detect and interpret text within slides.

- Storing Results in DynamoDB: Once Rekognition completes the analysis, another Lambda function processes the extracted data and stores it in a DynamoDB table, ensuring structured storage and easy retrieval.

Conclusion

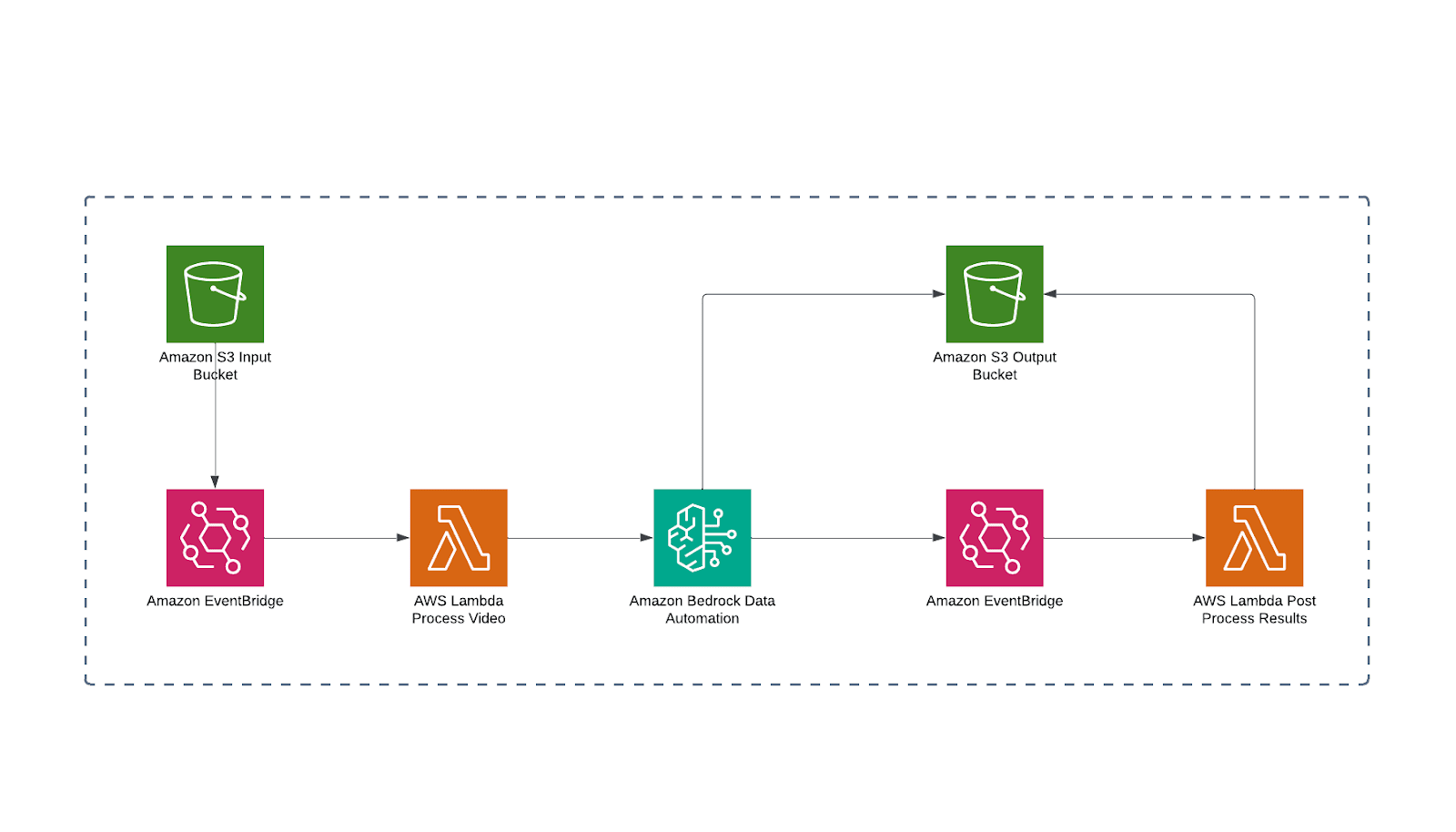

By leveraging AWS for transcription, speaker diarization, and on-screen text identification, media workflows become more efficient, but these capabilities can be further streamlined. Amazon Bedrock Data Automation (BDA) presents an opportunity to unify these features into a single, more cohesive workflow, reducing complexity and enhancing automation. As a feature of Amazon Bedrock, BDA enables developers to extract valuable insights from unstructured multimodal content, including documents, images, video, and audio, to build GenAI-based applications. By integrating these processes into a unified pipeline, BDA simplifies implementation while improving scalability and adaptability for AI-driven media analysis.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.