Written by Lucas Braga, DevOps Engineer

This tutorial demonstrates how to configure the Deadline Spot Plugin for bursting AWS cloud rendering jobs.

Contents

- Prerequisites & Limitation – Bursting Render Farms

- AWS Permission Setup

- Configuration

- Resource Tracker Role

- Amazon Machine Images (AMI)

- User Data

- Creating the Launch Template

- Creating the Render Fleet

- Configuring the Deadline Spot Plugin

- Configuration

- Hybrid Rendering

- How to Ensure that Cloud Nodes Are Requested Only When Required

- Disable Jobs in the “none” Group

- Using the Spot Plugin

- Troubleshooting

- About TrackIt

- About Lucas Braga

Prerequisites & Limitation – Bursting Render Farms

There are two prerequisites and settings in the environment to consider before proceeding with the setup of the Spot Plugin.

Prerequisite #1: It is critical that at least one node is running Pulse (or at least RCS) for nodes to be correctly requested. It is also preferable to prevent Workers from doing House Cleaning during this process.

Prerequisite #2: To avoid unexpected expenses, it is required to disable the submission of jobs without groups (i.e. ‘none’ group jobs).

Prerequisite #3: It is important to have a reliable connection between the Workers’ VPC and the projects/Deadline repository storages. This can be done either using Direct Connect or site-to-site VPN.

Limitation: Only one Region can be configured per repository on the Spot Plugin. For multiple Regions, users will need to configure new repositories.

AWS Permission Setup

This section describes the configuration of Roles and Profiles needed for the Spot Event Plugin.

Configuration

Spot Event Plugin Credentials (User)

Spot credentials are used by the Spot Event Plugin and contain the permissions necessary to create, maintain, and modify the Spot Fleets and optionally those permissions necessary to deploy the Resource Tracker. The required permissions can be granted by attaching the following AWS-managed IAM policies to the IAM user:

- AWSThinkboxDeadlineSpotEventPluginAdminPolicy

- AWSThinkboxDeadlineResourceTrackerAdminPolicy

These credentials will be used when configuring Deadline. Readers can consult the step-by-step tutorial on how to create IAM Users here. In the example presented in this tutorial, the user created is called DeadlineSpotEventPluginAdmin.

Spot Fleet IAM Instance Profile (Role)

The Spot Fleet IAM Instance Profile is an IAM role used by the Workers that are started by the Spot Fleet Requests. The role is used to give the Workers permission to terminate themselves, determine what Group they are part of, and report their status to the Resource Tracker (if in use).

The required permissions can be assigned by attaching the following AWS-managed IAM policy to an IAM role.

- AWSThinkboxDeadlineSpotEventPluginWorkerPolicy

Readers can consult the step-by-step tutorial on how to create IAM Roles here. In the example presented in this tutorial, the role created is called DeadlineSpotWorker (It is important to note that the name of this role has to begin with “DeadlineSpot”). Additional permissions can be added to the role for System Manager access and to seamlessly join domains.

IAM Fleet Role

The IAM Fleet Role is used directly by the Spot Fleet. It gives the Spot Fleet the permissions required to start, stop, and tag instances. By default, a role will automatically be created for the account called aws-ec2-spot-fleet-tagging-role containing all the permissions that are needed. If the role has not been created, users can assign the following policies:

o AmazonEC2SpotFleetTaggingRole

o AWSThinkboxDeadlineSpotEventPluginWorkerPolicy

o AWSThinkboxDeadlineSpotEventPluginAdminPolicy

Resource Tracker Role

The Resource Tracker Role is an IAM role used by Deadline Resource Tracker to access the AWS resources that it creates in the account. The IAM role must have the following settings:

- Trusted Entity: AWS Service: Lambda

- Permissions policies: AWSThinkboxDeadlineResourceTrackerAccessPolicy (AWS managed IAM policy)

- Role name: DeadlineResourceTrackerAccessRole

Amazon Machine Images (AMI)

When creating Spot Fleet Requests, an Amazon Machine Image (AMI) is required for each Spot Fleet. The AMIs represent the base states for each of the instances. Readers can consult a tutorial on how to create a custom AMI here.

User Data

To ensure the proper functioning of the fleet, certain procedures need to be executed at runtime. For this, PowerShell scripts are created and will later be used to create the template. For the domain bound, the domain has to be joined and then unjoined at terminations. For more information, readers can consult the tutorial on how to manage domain membership of a dynamic fleet of EC2 instances.

Creating the Launch Template

A Spot Fleet Request defines a collection of Spot instances and their launching parameters. The Spot Event Plugin uses a separate Spot Fleet Request for each Deadline Group. With the images, roles, and users already created, this section covers the creation of the launch template and the JSON (JavaScript Object Notation) that will be used to request it.

1) Go to Services → EC2 and click AMIs

2) Find the AMI to be used as a base for the Launch, right-click and choose Images and templates → Create template from instance

3) Create a name for the launch template

4) Create a description for the launch template

5) Make sure the AMI ID is the one originally selected

6) Under Storage (volumes) select the volume size (it only scales up, so make sure to create an image above the minimum volume size but not too big)

7) Under Resource tags, click Add tag (*These steps must be followed in order for the plugin to work)

- a) Set Key to DeadlineTrackedAWSResource

- b) Set Value to SpotEventPlugin

8) Under Network interfaces make sure that Auto-assign public IP is set. Set the subnet to Production Private Subnet 1, and Security group ID to the one corresponding to deadline-spot-SG

9) Open Advanced Details

- a) Set IAM instance profile as DeadlineSpotWorker

- b) Set Shutdown behavior to Don’t include in launch template

- c) Set EBS-optimized instance to Don’t include in launch template

10) Click Create launch template

Creating the Render Fleet

A Spot Request needs to be created to enable the automatic launching of Workers by Deadline:

1) In the EC2 Console, click on Spot Requests on the left

2) Click Request Spot Instances

3) On Launch Parameters set to Use a launch template and select the template created on the previous section

4) Most of the settings can be left as default

5) Scroll down and select the Total Target Capacity

6) Select the checkbox next to Maintain Target Capacity

7) Once ready, DO NOT CLICK Launch

8) Scroll down to the bottom and click JSON config on the right corner, this will download a file called config.json that will be modified and used as a base for the requests

Below is the edited version of the config.json file. Notice that an “Overrides” field was added under “LaunchTemplateConfigs” in order to further filter instances and add weight. Fields corresponding to a new group name can also be added in order to create another fleet. The “Version” field has been changed to $Latest.

| {“awsspot”: { “IamFleetRole”: “arn:aws:iam:::role/aws-ec2-spot-fleet-tagging-role”, “AllocationStrategy”: “capacityOptimizedPrioritized”, “TargetCapacity”: 4, “ValidFrom”: “2022-07-25T02:52:51.000Z”, “ValidUntil”: “2023-07-25T02:52:51.000Z”, “TerminateInstancesWithExpiration”: true, “Type”: “maintain”, “OnDemandAllocationStrategy”: “lowestPrice”, “LaunchSpecifications”: [], “LaunchTemplateConfigs”: [ { “LaunchTemplateSpecification”: { “LaunchTemplateId”: “-“, “Version”: “$Latest” }, “Overrides”: [ { “InstanceType”: “c6i.16xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 1 }, { “InstanceType”: “c6i.16xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 1 }, { “InstanceType”: “m6i.12xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 5 }, { “InstanceType”: “m6i.12xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 5 }, { “InstanceType”: “m6i.8xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 3 }, { “InstanceType”: “m6i.8xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 3 }, { “InstanceType”: “r6i.12xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 6 }, { “InstanceType”: “r6i.12xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 6 }, { “InstanceType”: “r6i.8xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 4 }, { “InstanceType”: “r6i.8xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 4 }, { “InstanceType”: “r6i.4xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 2 }, { “InstanceType”: “r6i.4xlarge”, “WeightedCapacity”: 1, “SubnetId”: “-“, “Priority”: 2 } ] } ] } } |

Configuring the Deadline Spot Plugin

This section covers the configuration of the Deadline Spot Plugin. To access the plugin, users need to ascend to Super User access on the Deadline Monitor.

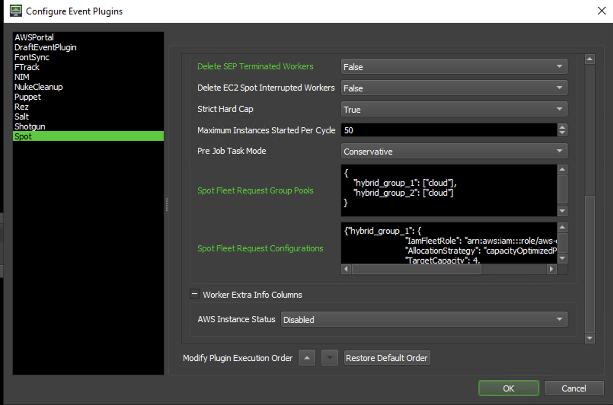

Go to Tools → Configure Events → Spot. Multiple fields are required:

- State: Specifies how the Event Plugin should respond to Events. If set to Global, all Jobs and Workers will trigger the Events for this Plugin. If Disabled, no Events are triggered for this Plugin. Set to Global Enabled.

- Enable Resource Tracker: Deadline Resource Tracker will help optimize resources by terminating instances and Spot Fleet Requests that don’t appear to be behaving as expected. Set to True.

- Use Local Credentials: Specifies whether or not users wish to use local AWS credentials (found in ~/.aws/credentials (Linux & Mac) or %USERPROFILE%\.aws\credentials (Windows)). If users are using local credentials, they may leave the Access Key ID and Secret Access Key fields blank. The use of Local Credentials is recommended

- AWS Named Profile: The AWS Named Profile that contains Spot Fleet credentials. Only required if Use Local Credentials is set to True. For more information on AWS Named Profiles and how to create them, readers can consult the Named profiles for the AWS CLI guide

- Access Key ID: The AWS Access Key ID for the Spot Credential (either Administrator acc or DeadlineSpotEventPluginAdmin)

- Secret Access Key: The AWS Secret Access Key for the chosen user.

Configuration

- Logging Level: Different logging levels. Select Verbose for detailed logging about the inner workings of the Spot Event Plugin. Select Debug for all Verbose logs plus additional information on AWS API calls that are used. Default: Standard.

- Region: The AWS region in which to start the Spot Fleet Requests. In our case us-west-2

- Idle Shutdown: Number of minutes that an AWS Worker will wait in a non-rendering state before it is shutdown. Default: 10.

- Delete SEP Terminated Workers: If enabled, Deadline Spot Event Plugin terminated AWS Workers will be deleted from the Workers Panel on the next House Cleaning cycle. Warning: The terminated Worker’s reports will also be deleted for each Worker. This may be a problem when users wish to use reports for future debugging of a render Job issue. To avoid this issue, set the field to True.

- Delete EC2 Spot Interrupted Workers: If enabled, Deadline Spot Event Plugin terminated AWS Workers will be deleted from the Workers Panel on the next House Cleaning cycle. Default: False

- Pre Job Task Mode: How the Spot Event Plugin handles Pre Job Tasks.

- Conservative: Will only start one Spot instance for the Pre Job Task and ignores any other Tasks for that Job until the Pre Job Task is completed. Ignore will not take the Pre Job Task into account when calculating target capacity. Normal will treat the Pre Job Task like a regular Job Queued Task. Default: Conservative

- Spot Fleet Request Group Pools: A mapping between the Groups and Pools.

| Example: { “group_name”:[“pool1″,”pool2”], “2nd_group_name”:[“pool3”] } |

- Spot Fleet Request Configurations: A mapping between the Groups and Spot Fleet Requests (SFRs). Paste from the previous step here.

- AWS Instance Status: If enabled, the Worker Extra Info X column will be used to display the AWS Instance Status if the instance has been marked to stop or terminated by EC2 or Spot Event Plugin. All timestamps are displayed in UTC format. Default: Disabled

Hybrid Rendering

For users who want bursting render farm capabilities, the following steps have to be followed:

- Separate the nodes between Pools, and configure all the on-premise nodes to be part of one “on-premise” pool. Then configure the Spot Plugin so that the created nodes are attached to a “cloud” pool. Below is an example of how to set Spot Fleet Request Group Pools under Spot Plugin configuration utility:

| { “hybrid_group_1”: [“cloud”], “hybrid_group_2”: [“cloud”] } |

- Add all instances that have rendering capability to the hybrid group

- Configure the Spot Plugin by editing the request to add this hybrid group

- If this is not done, every existing group can be configured to be burst by the Spot Plugin. It is important to configure the request for each group in the Spot Plugin and set the pools accordingly. Users can have either separate AMIs for every group or install everything in the same AMI and use the same request for all groups.

- With this configuration on-premise instances have priority, and cloud nodes will only be requested in the case of a lack of on-premise resources. For example: if a job is submitted with 4 frames to render and only 2 on-prem nodes are available, those 2 available nodes will pick up 2 frames and the remaining 2 will trigger 2 AWS Spot Instances.

- Users can have a full cloud group by not attaching any on-premise nodes to the group. In such cases, users don’t need to set pools.

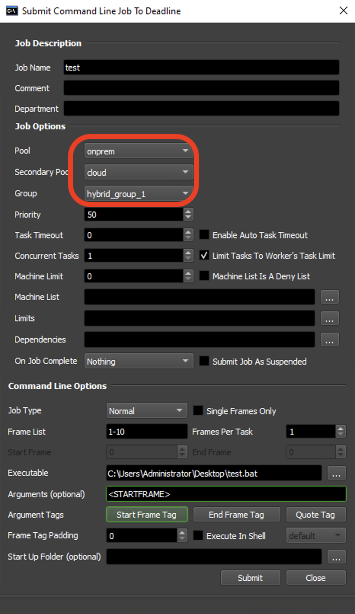

How to Ensure that Cloud Nodes Are Requested Only When Required

When submitting projects, it is important to choose the desired hybrid group during submission (for example “hybrid_group”). When submitting Pool options, select the primary Pool as “onprem” and the Secondary Pool as “cloud”. Otherwise, cloud nodes may be requested even when on-premise nodes are sufficient.

Pool Submission Options

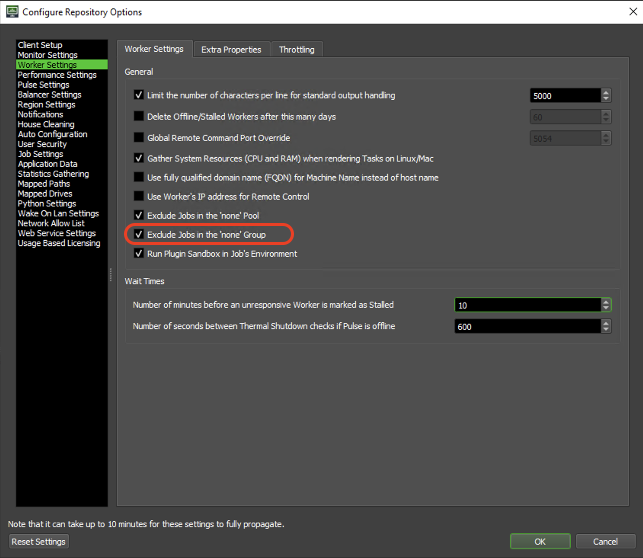

Disable Jobs in the “none” Group

Spot Plugin keeps instances active as long as they have work. It is not possible to prevent cloud nodes from taking jobs when they are in the “none” group. When jobs in the “none” group are enabled, they can be picked up by cloud nodes and may continue to run even when not required, leading to unpredictable costs.

The only way to prevent cloud nodes from picking jobs from the “none” group is to prevent all nodes from taking them. This setting can be configured under Configure Repository Options → Worker Settings → Exclude Jobs in the ‘none’ Group. It can also be useful to adopt the practice of not accepting jobs in the “none” pool in the future thus avoiding unpredictable behavior. Users need Super User access to configure this additional setting.

Repository Options: Exclude Jobs in the ‘none’ Group

Using the Spot Plugin

There are no changes from the end user’s perspective. The user selects the group configured in the plugin and the request is automatically sent to AWS. The estimated time to start and configure instances is between 10 and 12 minutes. If the new instances take longer than 15 minutes to appear on the Dashboard, the logs must be checked. This will be covered in the Troubleshooting section.

Troubleshooting

This section covers some key troubleshooting areas.

- When a job is requested and Workers take too long to show up, the first place to check under the AWS console is EC2 → Spot Requests and see if there are any active requests.

- If there’s an active request, check the status

- If the status is active but unfulfilled, check the request history by clicking on the corresponding ID. In most cases, this is due to resource unavailability and the user has to wait until resources are available. A detailed explanation of the lifecycle of Spot Requests can be found here.

- If the status is fulfilled then check to see why it’s not showing up. The most common cases are as follows:

- If there’s an active request, check the status

- Maximum number of computer accounts that users are allowed to create in the domain exceeded. If this is the case, removing one “Zombie Instance” from the domain and adding another should correct the issue.

- Failed to run User Data: Connect using Session Manager. For this, go to C:\ProgramData\Amazon\EC2Launch\Logs\ and check for anything abnormal

- For further help, there are CloudWatch log groups created to help on UserData and Site-to-Site VPN communication.

- If there is no request at all, check Cloudtrail → Event History for the respective request.

- Another common cause is that the JSON used in the plugin is not 100% compatible. In this case, the requests run in –dry-run mode only for troubleshooting purposes. The Event shows the reason and can be corrected. User permissions may also be the reason. For this, check the Access Keys set in the plugin.

- If there’s no request at all, the most common cause is that the resource finder located an unhealthy flag. As a precaution and to avoid unnecessary costs, the unhealthy flag is added to the fleet. This can be seen when logged in as a Super User in the Deadline Monitor in the bottom right corner. Double-click and enter admin credentials to unlock the requests after investigating the cause.

- Check that the plugin State is set to Global Enabled.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.

About Lucas Braga

Data Scientist by formation and DevOps by experience, Lucas has been a DevOps Engineer at TrackIt since 2021. With 8 years of experience spanning Media & Entertainment, TV, and Design, he brings a unique perspective to projects.

An out-of-the-box thinker and serial problem solver, Lucas excels at finding innovative solutions.