Written by Maxime Roth Fessler, DevOps & Backend Developer at TrackIt

As generative AI gains traction across industries, deploying models that rely on GPU acceleration has become a key concern for both developers and infrastructure teams. Tools like Fooocus, an AI image generator that enables local inference using advanced models, require environments that can dynamically allocate compute resources while minimizing operational overhead. Amazon EKS, with Auto Mode, provides a flexible platform to meet these requirements.

This article outlines strategies for deploying GPU-backed infrastructure and presents a case study on deploying Fooocus using Amazon EKS (Elastic Kubernetes Service). Key challenges and benefits from the deployment process are also discussed.

Contents

Why Deploy Fooocus on EKS?

Amazon EKS offers several advantages for deploying GPU-intensive applications like Fooocus AI. It provides elastic scalability to accommodate fluctuating workloads, along with cost optimization through dynamic resource allocation. Monitoring and alerting capabilities enhance operational efficiency by enabling faster issue detection and resolution. EKS also integrates with infrastructure-as-code tools such as Terraform, supporting automated, consistent deployments.

For Kubernetes-native environments, deploying Fooocus on EKS allows teams to leverage existing familiarity with container orchestration.

What is Fooocus?

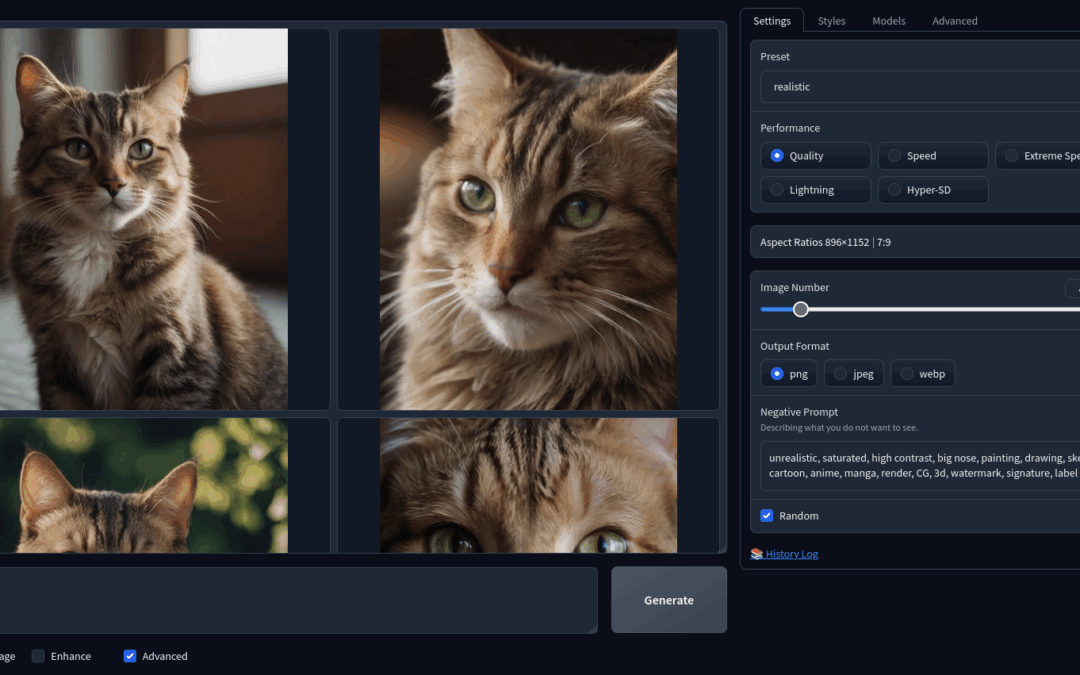

Fooocus is an open-source AI-powered image generation tool that enables the creation of high-quality visuals using user-friendly prompts. It streamlines the image generation process through automation and supports features like GPT-2-based prompt expansion. The software runs in both local and cloud-based environments and is compatible with a wide range of models, offering flexibility for a variety of creative and professional use cases.

Practical Use Cases for Fooocus

Fooocus supports a wide range of applications, including:

- Generating high-quality visuals for blogs and social media

- Rapid prototyping for design and creative projects

- Producing illustrations for educational or artistic content

- Enhancing marketing collateral with AI-generated imagery

- Creating personalized product visuals tailored to end-user preferences

Its versatility makes it a valuable tool for content creators, educators, marketers, and developers alike.

Deploying Fooocus with EKS Auto Mode

Fooocus is typically installed via a setup script that configures the environment and downloads the required models. It also supports Docker and Docker Compose for containerized deployments. In the example presented in this article, the deployment leverages the Docker image as the foundation.

This implementation is based on a fork of the AWS sample project DeepSeek using vLLM on EKS, which has been generalized to support both image generators like Fooocus and language models such as DeepSeek. The approach extends the original functionality to meet the specific demands of AI image generation.

Infrastructure provisioning is handled using Terraform, which sets up the EKS cluster and supporting services, such as Amazon ECR for storing container images. Kubernetes manifests are managed via Helm to introduce dynamic configuration, such as replica count or image URLs. Docker is used for container image creation and pushing to the ECR registry.

The repository used to deploy Fooocus: https://github.com/trackit/eks-auto-mode-gpu

How are GPUs handled with EKS Auto Mode?

By default, EKS Auto Mode provisions a general-purpose node pool, which is optimized for workloads not requiring GPUs. For GPU-intensive tasks, a dedicated GPU-enabled node pool must be configured.

Key configuration steps include:

- Taints: Apply GPU-specific taints (e.g., nvidia.com/gpu=Exists) to the node group.

- Tolerations: Add matching tolerations in pod specifications.

- Instance Types: Use EC2 instance types that include GPUs (e.g., g4, g5, p4, p5).

- Labels: Label nodes with GPU metadata for scheduling awareness.

- Resource Requests: Define GPU resource requests and limits in Kubernetes manifests.

No additional setup such as installing the NVIDIA plugin is required. EKS Auto Mode automatically provisions appropriate nodes with the necessary GPU hardware when a pod requests it.

Conclusion

Deploying Fooocus on Amazon EKS Auto Mode showcases the power of Kubernetes in orchestrating GPU-intensive AI workloads. The integration of Terraform, Helm, and Docker enables automated, scalable, and production-ready deployments. This setup not only simplifies the deployment of tools like Fooocus but also provides a reusable foundation for a wide variety of GPU-accelerated applications, including large language models and other machine learning systems.

By abstracting away the complexities of GPU configuration and infrastructure scaling, this deployment strategy allows teams to focus on innovation and content generation, driving value in creative, educational, and commercial domains.sive architecture, well-suited to dynamic production environments and the evolving demands of media asset workflows.

About TrackIt

TrackIt is an international AWS cloud consulting, systems integration, and software development firm headquartered in Marina del Rey, CA.

We have built our reputation on helping media companies architect and implement cost-effective, reliable, and scalable Media & Entertainment workflows in the cloud. These include streaming and on-demand video solutions, media asset management, and archiving, incorporating the latest AI technology to build bespoke media solutions tailored to customer requirements.

Cloud-native software development is at the foundation of what we do. We specialize in Application Modernization, Containerization, Infrastructure as Code and event-driven serverless architectures by leveraging the latest AWS services. Along with our Managed Services offerings which provide 24/7 cloud infrastructure maintenance and support, we are able to provide complete solutions for the media industry.