If you come on this page from google, don’t go further. You are looking for the command “show storagearray longrunningoperations;” 🙂

Yesterday one of our clients had an issue during the upgrade from SNFS4 to SNFS5. While troubleshooting the issue we had to fail and replace one of the metadata drive from the NetApp E-Series disk array.

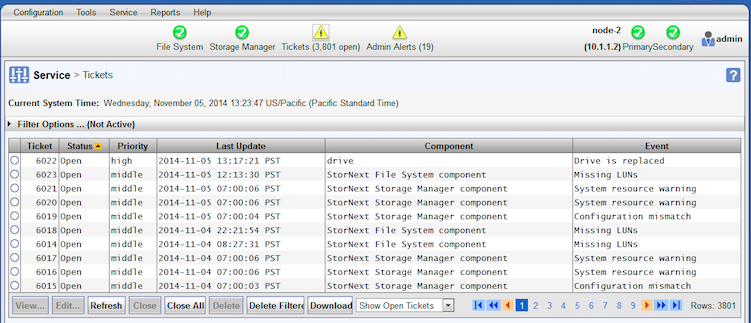

Usually we just replace the failed drive with the new drive and check everything is good in the Quantum StorNext web interface and don’t bother too much about it.

This time it’s a bit different since the first issue brought a lot of visibility to the SAN architecture. We wanted to keep everything stable and avoid to impact the production system with useless latency, lower performances or downtime visible by the users.

So when we decided to replace the metadata drive we wanted to see the progress of the drive replacement to make sure everything goes smoothly and to be able to communicate when the SAN will be completely healthy and in an optimal state.

Unfortunately the only management options we have are:

- Quantum StorNext web interface

- NetApp E-Series CLI command (SMcli)

These 2 tools don’t provide an intuitive way to check how far we are in the rebuild of the drive. StorNext webui doesn’t expose this detail of information and it took us more than 1 hour, 4 calls to support and few google queries to figure out how to get this information from the CLI (SMcli).

So if you want to know how your rebuild is progressing, you need to type the command “show storagearray longrunningoperations;”:

[root@node-1 ~]# SMcli Qarray1a -S -c "show storagearray longrunningoperations;" Long Lived Operations: LOGICAL DEVICES OPERATION STATUS TIME REMAINING 1 Copyback 86% Completed 17 min TRAY_85_VOL_4 Copyback 85% Completed 17 min [root@node-1 ~]#

Before to go there, we poked around trying the obvious commands to get these information with no luck.

“show storagearray healthstatus” tells us the array is fixing itself but no information about when it will be done:

[root@node-1 stornext]# SMcli localhost -c "show storagearray healthstatus;" Performing syntax check... Syntax check complete. Executing script... Storage array health status = fixing. The following failures have been found: Volume - Hot Spare In Use Storage array: Qarray1 Volume group: 1 Status: Optimal RAID level: 1 Failed drive at: tray 85, slot 6 Service action (removal) allowed: No Service action LED on component: Yes Replaced by drive at: tray 85, slot 1 Volumes: TRAY_85_VOL_3, TRAY_85_VOL_4 Script execution complete. SMcli completed successfully. [root@node-1 stornext]#

“show volumegroup[$number]” tells us who are the members of the volumegroup, but nothing about what is going on:

[root@node-1 stornext]# SMcli localhost -c "show volumegroup[1];" Performing syntax check... Syntax check complete. Executing script... DETAILS Name: 1 Status: Optimal Capacity: 558.410 GB Current owner: Controller in slot B Quality of Service (QoS) Attributes RAID level: 1 Drive media type: Hard Disk Drive Drive interface type: Serial Attached SCSI (SAS) Tray loss protection: No Data Assurance (DA) capable: Yes DA enabled volume present: No Total Volumes: 2 Standard volumes: 2 Repository volumes: 0 Free Capacity: 0.000 MB Associated drives - present (in piece order) Total drives present: 3 Tray Slot 85 5 [mirrored pair with drive at tray 85, slot 1] 85 6 85 1 [hot spare drive is sparing for drive at 85, 6] Script execution complete. SMcli completed successfully. [root@node-1 stornext]#

And if we check the drive itself nothing interesting shows up:

[root@node-1 stornext]# SMcli localhost -c "show drive[85,6];" Performing syntax check... Syntax check complete. Executing script... Drive at Tray 85, Slot 6 Status: Replaced Mode: Assigned Raw capacity: 558.912 GB Usable capacity: 558.412 GB World-wide identifier: 50:00:cc:a0:43:32:f6:90:00:00:00:00:00:00:00:00 Associated volume group: 1 Port Channel 0 2 1 1 Media type: Hard Disk Drive Interface type: Serial Attached SCSI (SAS) Drive path redundancy: OK Drive capabilities: Data Assurance (DA), Full Disk Encryption (FDE) Security capable: Yes, Full Disk Encryption (FDE) Secure: No Read/write accessible: Yes Drive security key identifier: None Data Assurance (DA) capable: Yes Speed: 10,020 RPM Current data rate: 6 Gbps Logical sector size: 512 bytes Physical sector size: 512 bytes Product ID: HUC109060CSS601 Drive firmware version: MS04 Serial number: KSGX0VVR Manufacturer: HITACHI Date of manufacture: Not Available Script execution complete. SMcli completed successfully. [root@node-1 stornext]#

But I am happy we discovered the command “show storagearray longrunningoperations;” to monitor the copy back from the hot spare to the new drive and to confirm everything completed correctly with everything in healthy and optimal status.

[root@node-1 ~]# SMcli Qarray1a -S -c "show storagearray longrunningoperations;" Long Lived Operations: No operation is currently in progress. [root@node-1 ~]# SMcli Qarray1a -S -c "show volumegroup[1];" DETAILS Name: 1 Status: Optimal Capacity: 558.410 GB Current owner: Controller in slot B Quality of Service (QoS) Attributes RAID level: 1 Drive media type: Hard Disk Drive Drive interface type: Serial Attached SCSI (SAS) Tray loss protection: No Data Assurance (DA) capable: Yes DA enabled volume present: No Total Volumes: 2 Standard volumes: 2 Repository volumes: 0 Free Capacity: 0.000 MB Associated drives - present (in piece order) Total drives present: 2 Tray Slot 85 5 [mirrored pair with drive at tray 85, slot 6] 85 6 [mirrored pair with drive at tray 85, slot 5] [root@node-1 ~]# SMcli localhost -c "show storagearray healthstatus;" Performing syntax check... Syntax check complete. Executing script... Storage array health status = optimal. Script execution complete. SMcli completed successfully. [root@node-1 ~]#

I guess the best would have been to have:

- connected the NetApp E-Series E2700 to the network

- installed SANtricity software GUI on a Windows host

- checked the progress from SANtricity GUI

About disk storage array CLI. I got spoiled early on with DDN S2A (2004) command line, later the SFA CLI (2008) disappointed me. Nexsan didn’t provide any CLI at all (2012). But today I realized NetApp / LSI / Engenio (2014) is definitely not better. Still a lot of progress in the world of block storage appliances to achieve (If it still makes sense for the hardware vendors to work on it).